Answer the question

In order to leave comments, you need to log in

How to train a neural network with Relu+Softmax layer?

The neural network for solving MNIST consists of 3 layers:

1) input [784]

2) hidden [500]. RELU activation function

3) Output[10].softmax activation function

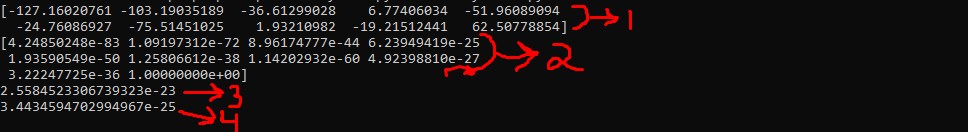

On the screen under number 1 - input of the output layer

On the screen under number 2 - output of the output layer (after applying softmax)

On the screen under number 3 - the maximum value of the derivative by the weights connecting 2 and layer

On the screen under the number 4 - the average value of the same derivative

Obviously, with such small values, the network will not learn. What to do?

Answer the question

In order to leave comments, you need to log in

Yes, at first it hovered over "Obviously, the network cannot be trained with such small values" .)) Why do you think so?

As a rule, data are fed to the input of neural networks in a scaled (normalized) form. Therefore, first convert the matrices X_train and X_test from integer values on the interval [0,255] (image of the MNIST dataset) to real values on [0,1].

You probably don't have the right network architecture. Rely on sample: link , which split the MNIST dataset with 99.25% efficiency.

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question