Answer the question

In order to leave comments, you need to log in

How to create a series of PDFs in PHP 7.3.5 via domdpf?

Hello, I'll try to explain the essence:

php 7.3.5

dompdf latest version from https://github.com/dompdf/

1. I want to download Wikipedia.

1.1. take an article, for example $url = ' https://ru.wikipedia.org/wiki/Host ';

1.2. we pump out the necessary links to related articles from the necessary div-a in the article p. 1.1.:

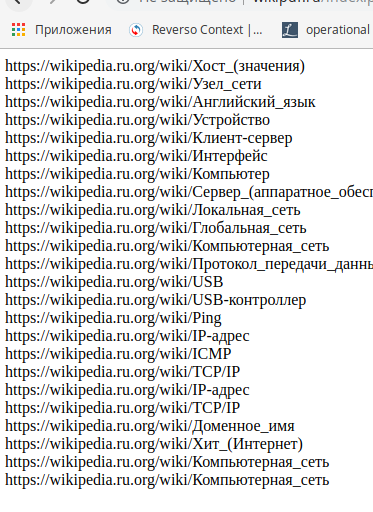

it turns out like this:

1.3. then I want to create a pdf in a cycle for each link from the picture, but it turns out only to make the whole code for the 1st link

:

<?php

// reference the Dompdf namespace

require_once 'dompdf/autoload.inc.php';

use Dompdf\Dompdf;

use Dompdf\Options;

//download image

/*function file_get_contents_curl($url) {

$ch = curl_init();

curl_setopt($ch, CURLOPT_HEADER, 0);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1);

curl_setopt($ch, CURLOPT_URL, $url);

$data = curl_exec($ch);

curl_close($ch);

return $data;

}

//$data = file_get_contents_curl( $url );

//$fp = 'logo-1.png';

//file_put_contents( $fp, $data );

//echo "File downloaded!"

*/

// instantiate and use the dompdf class

//get HTML by URL

$url = 'https://ru.wikipedia.org/wiki/Хост';

$html = file_get_contents($url);

$dom = new DOMDocument();

$dom->loadHTML($html);

//get all a-tags from div.mw-body-content

$links = [];

$xPath = new DOMXPath($dom);

$anchorTags = $xPath->evaluate("//div[@class=\"mw-body-content\"]//a/@href");

foreach ($anchorTags as $anchorTag) {

$links[] = $anchorTag->nodeValue;

}

foreach($links as $link) {

//decode url to cyrillic

$linkDecoded = urldecode($link);

//if url contains unneeded words, delete them

if ( (strpos($linkDecoded, 'Категори') === false) && (strpos($linkDecoded, 'Википедия') === false) &&

(strpos($linkDecoded, 'index.php') === false) && (strpos($linkDecoded, 'Файл') === false) &&

(strpos($linkDecoded, '#') === false) ) {

//if $linkDecoded[0] === '/' -> add 'https://ru.wikipedia.org'

if ($linkDecoded[0] === '/') {

$linkDecoded = 'https://wikipedia.ru.org' . $linkDecoded . '<br>';

// 5 === len(wiki/); https://stackoverflow.com/questions/11290279/get-everything-after-word

$title = substr($linkDecoded, strpos($linkDecoded, 'wiki/') +5);

echo $linkDecoded;

//DOMpdf -> pdf

$options = new Options();

$options->set('defaultFont', 'DejaVu Sans');

//не было тут его, если что - убрать

$html = file_get_contents($url);

$dompdf = new Dompdf($options);

$dompdf->loadHtml($html);

//(Optional) Setup the paper size and orientation

$dompdf->setPaper('A4', 'landscape');

// Render the HTML as PDF

$dompdf->render();

// Output the generated PDF to Browser

//title is basename === https://wikipedia.ru.org/wiki/ -> Протокол_передачи_данных

$dompdf->stream($title);

}

}

}Answer the question

In order to leave comments, you need to log in

the problem is that the render method issues a return and it turns out that only one turn is processed in the loop. you need to collect the html message in a loop using concatenation and only then submit one large html for conversion to pdf

, something like this you should have

$html = '';

foreach($links as $link) {

//decode url to cyrillic

$linkDecoded = urldecode($link);

//if url contains unneeded words, delete them

if ( (strpos($linkDecoded, 'Категори') === false) && (strpos($linkDecoded, 'Википедия') === false) &&

(strpos($linkDecoded, 'index.php') === false) && (strpos($linkDecoded, 'Файл') === false) &&

(strpos($linkDecoded, '#') === false) ) {

//if $linkDecoded[0] === '/' -> add 'https://ru.wikipedia.org'

if ($linkDecoded[0] === '/') {

$linkDecoded = 'https://wikipedia.ru.org' . $linkDecoded . '<br>';

// 5 === len(wiki/); https://stackoverflow.com/questions/11290279/get-everything-after-word

$title = substr($linkDecoded, strpos($linkDecoded, 'wiki/') +5);

echo $linkDecoded;

//не было тут его, если что - убрать

$html .= file_get_contents($url);

}

}

}

//DOMpdf -> pdf

$options = new Options();

$options->set('defaultFont', 'DejaVu Sans');

$dompdf = new Dompdf($options);

$dompdf->loadHtml($html);

//(Optional) Setup the paper size and orientation

$dompdf->setPaper('A4', 'landscape');

// Render the HTML as PDF

$dompdf->render();

// Output the generated PDF to Browser

//title is basename === https://wikipedia.ru.org/wiki/ -> Протокол_передачи_данных

$dompdf->stream($title);thanks, as always let down by inattention:

instead of

necessary

$linkDecoded = 'https://ru.wikipedia.org' . $linkDecoded;Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question