Answer the question

In order to leave comments, you need to log in

Why does IO wait time on different disks affect each other?

Hello!

We have a server with SSD and HDD. On the SSD there is a mysql database (we store application data), and on the HDD there is a MongoDB database (for storing files). The same configuration and with the same data is created on the second server, which is a mirror and backup. Due to desynchronization, it was necessary to copy all MongoDB data to the backup server from the main one. Since the data is 2TB, a clean data backup is not suitable, as this will stop the production for almost a day, which is unacceptable. It was decided to copy the data from one database to another gradually using a script. But when reading data from the main MongoDB database (from the HDD), the IO Wait SSD time (2-5 times) begins to increase dramatically, both for reading and writing.

Due to the increase in IO Wait time on the SSD, the service starts to lag and this is unacceptable. The question is, why does reading from the HDD (MongoDB) disk affect the SSD so much? And how can this be overcome?

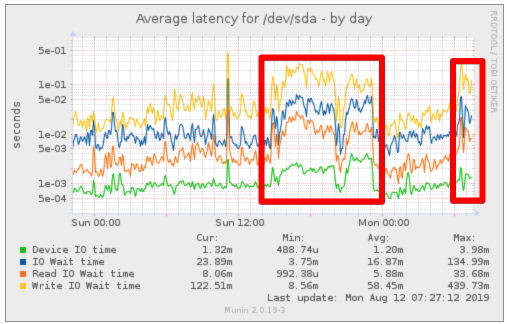

I am attaching a screenshot with an IO Wait time schedule for SSD. Areas marked in red are when reading from the HDD. The scale is logarithmic.

Answer the question

In order to leave comments, you need to log in

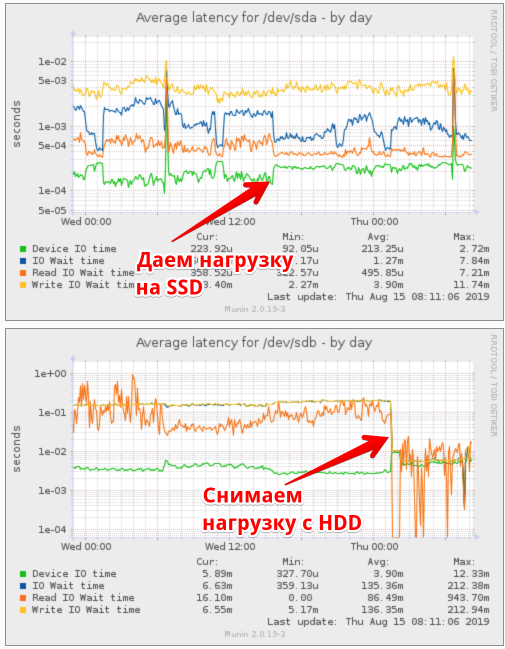

As a result of experiments, the reason seems to have been found. The mutual influence of disks on IO Wait time is observed on the controller in IDE mode. On the backup server, we repeated the load profile on two disks that are connected to the same controller (the servers are the same in configuration), but in AHCI mode and mutual influence on IO Wait time is not visible.

Now it remains to wait for the opportunity to restart the main server in order to enable AHCI mode there as well.

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question