Answer the question

In order to leave comments, you need to log in

Varnish caching, actualization (Crowler?) or cache invalidation?

There is a loaded store on Magento (EE)

There is such a ready-made infrastructure (AWS):

1.1 Nginx - accepts incoming http & https connections, directs traffic to Varnish

1.2 Varnish on the same server acts as a load balancer + front-end cache

2. Many nodes (Apache, PHP) on which Magento

actually spins 3. Sessions in memcache, backend cache - memcache + radish (radish is a separate EC2 instance, memcache - ElastiCache)

4. Admin server - a dedicated node for the admin panel - requests go directly bypassing the varnish.

Now cache invalidation works on this infrastructure:

For each URL (which can be cached), the backend sends a special X-Cache-Tags header listing all the tags for this page (for example, this catalog page with 15 products - in the header there will be something like this: CAT_12, PRO_1, PRO_2, etc.) .

Further, when we from the admin panel, for example, change product No. 1 with the PRO_1 tag, then a purge (ban) request will go to the varnish server (asynchronously of course) and the varnish resets the cache for this object (that is, all the urls in which the X-header was Cache-Tags with this PRO_1 value).

But there is one "BUT".

1-2 times a day, you have to reset the entire cache cache due to the import of products. And then, within 20-40 minutes, users jump to the backend and fill 80% of the cache. This leads to load spikes on the backend, users wait 10-30 seconds for the page to load, and most importantly, the remaining 20% are the most resource-intensive (execution time minutes) not very frequently used resources, they can be cached (fill the cache) for a long time (days), and in the meantime, the next day, we reset the entire cache again and the whole cycle repeats again.

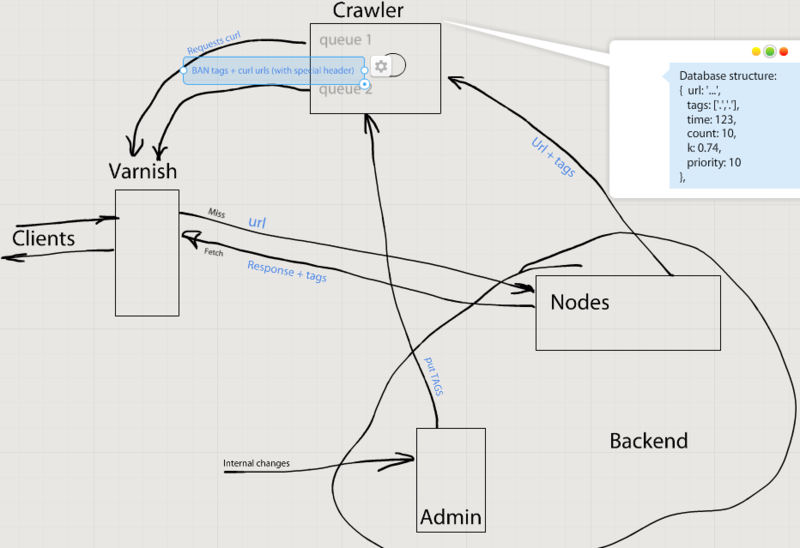

That's actually what I want to hear is it worth digging in the direction of updating (Crowler?) Cache. That is, make the cache “eternal”, and bring it up to date with the crawler.

So far, this picture comes to mind:

How do you solve such issues (and with what technologies)?

Answer the question

In order to leave comments, you need to log in

The first thing that comes to mind is to do block caching. Each product has a separate cache. Collect via esi.

Next, you can add caching to the statics behind the varnish. The same ngix, for example. Then you can update this cache and only then delete it from the varnish. Gets rid of the problem when the client asks for the page at the same time as the crawler.

Actually the question is on the forehead, why is Varnish not in the first place with you? According to this scheme, he should stand at the front and give everything else that he does not need to give to nginx.

There is a module from Turpentine for varnish to generate blocks via esi.

All exceptions are registered in the admin panel for this module, the module generates a new config and send it directly to the varnish, and also saves it to a file.

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question