Answer the question

In order to leave comments, you need to log in

Similar to Reget or FlashGet for Linux?

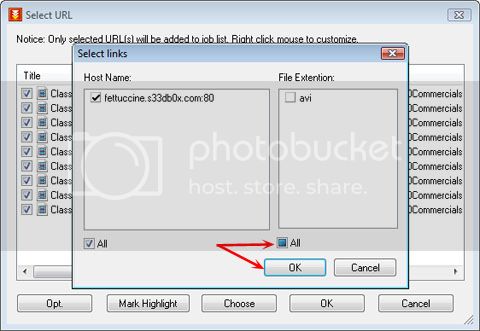

There were Reget and FlashGet downloaders under Windows, allowing you to build a list of all links on the page on any page in the browser by right-clicking, select from them those that satisfy a given template (for example, www.foo.com/bar/*/text.html) and download everything .

But under Linux, I didn’t find something as convenient as a tool.

Who knows what?

Thank you!

Answer the question

In order to leave comments, you need to log in

Source

Task:

Download all .pdf files linked from ` http://www.advancedlinuxprogramming.com/alp-folder`.

Solution:

wget -r -l1 -t1 -nd -N -np -A.pdf -erobots=off www.advancedlinuxprogramming.com/alp-folder

Comments:

-r Recursively, i.e. download the page ` http://www.advancedlinuxprogramming.com/alp-folder` and follow its links;

-l1 Recursion depth is 1, i.e. download only direct links located directly on the page ` http://www.advancedlinuxprogramming.com/alp-folder`;

-t1 Make only one attempt to download the file;

-nd Do not create directories, but download everything to the current directory; if there are two files with the same name, a number will be added to the name;

-N Do not download if the file is not newer than the local copy;

-np Do not follow links to the parent directory;

-A.pdf Download only files ending in .pdf;

-erobots=off Do not download standard robots.txt file;

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question