Answer the question

In order to leave comments, you need to log in

Need help. How to properly configure storage for a pair with a Blade server?

Good day!

Comrades, we need your help in setting up the server hardware, namely, connecting the storage system to the Blade server. I must say right away that countless different resources have already been read over the past month, so I ask for help in "real time".

Unfortunately, I have not encountered anything like this before, and the task of putting the equipment into operation has been set. And there are a lot of questions.

Input data:

At work, we purchased a new piece of hardware - a Fujitsu DX100 S4 storage system. Includes 2 sets of drives - SSD and SAS, fast array and slow array, respectively. The storage system has 8 Fiber Channel ports - 4 on each of the two controllers (hereinafter CM#X), divided into 2 groups (hereinafter CA#X) of 2 ports each (hereinafter Port#X).

All this was purchased to replace a faulty storage system, which was taken out of service a long time ago due to dead disks.

The new storage system was supposed to work in tandem with the HP C3000 Blade server, with three blades on board. ESXI is installed on each and a VMWare cluster is raised on all this.

This server has a Brocade 8/12c SAN switch installed, with four licensed Fiber Channel ports.

What we have:

1. SAN switch: Connected by two FC ports to two storage controllers (CM#0 and CM#1).

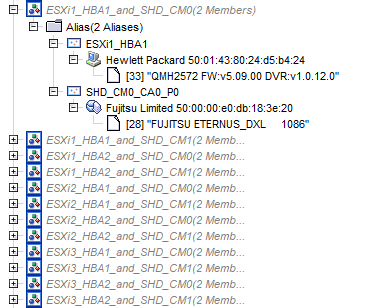

The zoning is done as follows:

The first HBA of the first blade is mapped to the first storage port.

The first HBA of the first blade is assigned a second storage port.

The second HBA of the first blade is mapped to the first storage port.

...

The second HBA of the third blade is assigned a second storage port.

Total 12 zones. Correspondence is given by WWN. Everything is collected in a single config on the SAN switch.

Here the first (1) question arises - is it really worth doing zoning by WWN or should it be done hard by ports?

2. Storage:

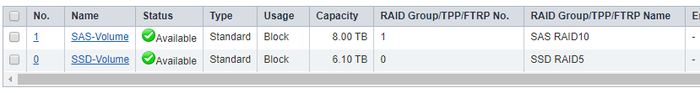

a.) Has 2 partitions (SSD-volume and SAS-volume):

SSDs are assembled in RAID5, have a size of 6.10 TB.

SAS are assembled in RAID10, have a size of 8.00 TB.

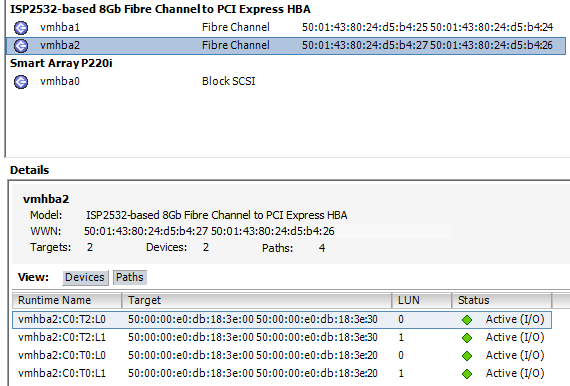

b.) Partitions are assembled into a group of two LUNs and presented to the server as follows:

Column explanations:

1. Host Group - names of HBA port groups from the Host column corresponding to each of the blades.

2. Host - HBA adapters for each of the blades.

3. CA Port Group - names of storage controller port groups from the CA Port tab.

4. CA Port - storage ports that are connected to the server, where CM#X is a controller, CA#X is a group of ports on the controller, Port#X is a port of each of the groups.

5. LUN Group - a group of LUNs that need to be presented to the Blade server.

6. Host Response - host operating mode, working in Active/Active mode (configured by hand).

This brings up the second (2) question - is it correct to do a presentation on individual blades? Or was it possible to collect everything in one heap?

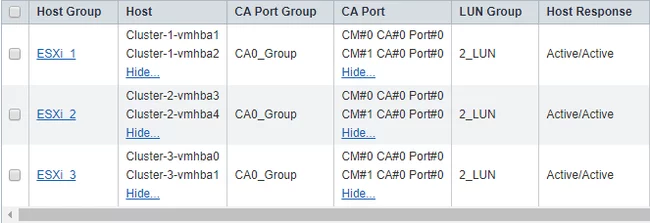

3. VMware.

VMWare sees both LUNs.

It looks like this:

Each vmhba sees both LUNs in double quantity (...3e:20 and ...3e:30 - WWN addresses of storage ports). The path mode is set to Round-Robin for each of the LUNs.

The third (3) question arises - is VMWare configured correctly?

And here we come to the next one.

Problem:

Previously, VMWare was set to "Fixed". Which paths were chosen - this information, unfortunately, has not been preserved. Everything worked correctly for a couple of days with a couple of lightweight virtual machines on board (1 - Ubuntu, 2 - Windows 7). On the evening of the third day, I launched a VM migration from Windows Server 2008 R2 to the storage system. No further configuration changes were made.

The next day, complaints began to pour in that everything running on the storage system was slowing down.

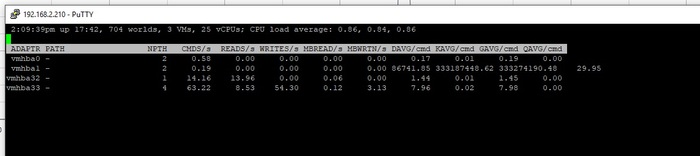

When using the esxtop utility with the 'd' option (connecting via SSH to one of the blades), huge DAVG, KAVG, GAVG and QAVG values appeared:

The mode was changed to Round-Robin. Some paths have been disabled. At the very least, and in a hurry, the virtual machines were migrated from the storage system.

At the moment, the storage system somehow works, but it is not clear how.

After already a month of trying to set up and read the manuals, there is no longer an exact idea of \u200b\u200bhow all this should work.

I would like to get advice on how to approach the organization of a storage system with a Blade server with only one SAN switch.

Any help in choosing the right approach is appreciated! Thank you!

Answer the question

In order to leave comments, you need to log in

Well, you and Lermontov did not read everything and did not carefully read very much.

1. If you want to have crap with ports and constantly write down what goes where. You can reserve by ports (the security guard will be happy - but he is an idiot because there is traffic encryption and GDPR for security). So do what you did. In practice, I will say it is much easier to put it in and forget it.

2. It all depends on the goals, the very grouping of the blades and what is spinning there. If these are the same systems for the same purposes. That's all for one. If these are heterogeneous server blades (for different purposes), then for each separately.

3. Most likely, it was not the storage system that slowed down (the output of the graph from the storage system is needed), but the Datastore was overloaded.

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question