Answer the question

In order to leave comments, you need to log in

How to restore free space in SR Storage in XenServer 6.2?

I apologize for the thousand and first question about freeing up free space after deleting virtual machines / snapshots. I read what was here, read the citrix forums, googled, struggled with the problem for two days. The results are there but not what I expected.

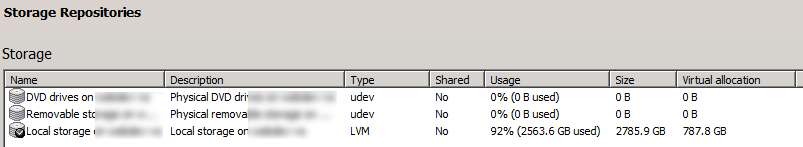

Given: XenServer version 6.2, LVM storage on 3Tb

Hence the question immediately, what is usage, size is understandable, but virtual allocation ? I read that this is a reserved place for snapshots, but I'm not sure that I will find where I saw it.

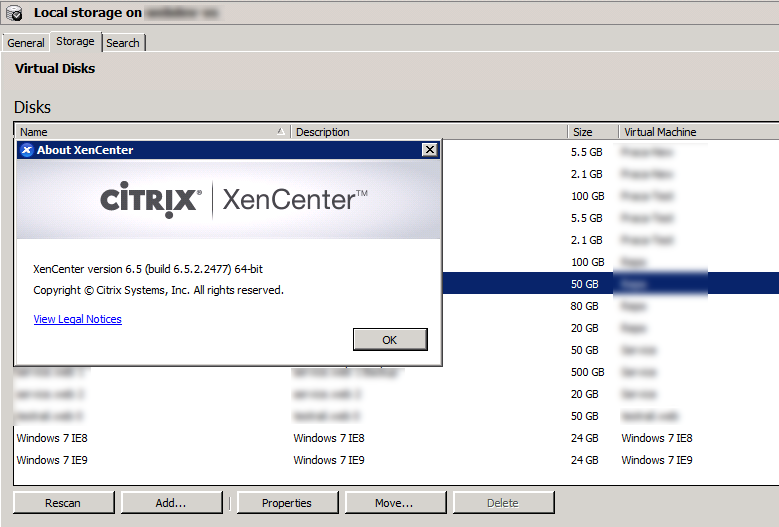

Removed unnecessary virtual machines, transplanted some to other disks. Deleted unnecessary disks through the client

Free space was not added. Research has begun.

Removed unnecessary lvm partitions that apparently remained after the removal of machines, looked through lvscan (inactive flags).

inactive '/dev/VG_XenStorage-04ff2444-48bf-33f5-2afd-fa33abaf5228/VHD-94d0fa64-f1e2-4b7f-b4d0-37aaee985ba7' [8.00 MB] inherit

inactive '/dev/VG_XenStorage-04ff2444-48bf-fa5233 /VHD-8df726ff-3193-47cf-9551-0b855726bcb1' [50.11 GB] inherit

There are a lot of 8MB disks in the list, where did they come from, I didn't create them on purpose.

As a result, about 200GB was added, as shown by pvs, after the update and the client (shown in the screenshots, did them when 200GB was freed, before that it was 99% full)

Machine disks individually take up about 2Tb in total.

I thought the remaining space might be taken up by snapshots by doing

xe vdi-list is-a-snapshot=true | grep name-label

It turned out that 1 snapshot in two machines, and the size of their disks is 10-20GB, well, they cannot occupy the rest of the space.

Total lost about 500GB. There is no possibility to transfer data to another SR.

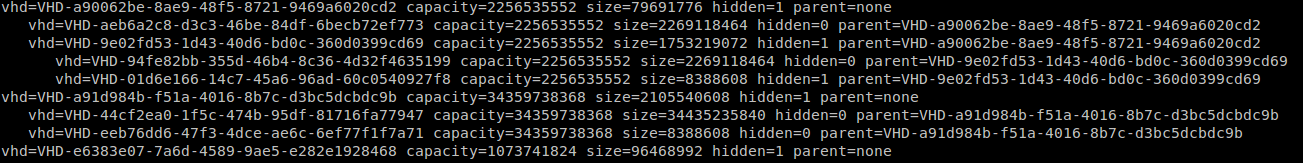

More interesting information can be obtained with the vhd-util scan -f -m "VHD-*" -l "VG_XenStorage-04ff2444-48bf-

33f5-2afd

-fa33abaf5228" -p command

knows?

Suggest thoughts, where to look for the loss? Thanks for the discussion.

UPD: according to the recommendations of Argenon , I rescanned the storage, I did it before, along the way I corrected the error with the remaining image storage according to the article stan.borbat.com/fix-mark-the-vdi-hidden-failed

Answer the question

In order to leave comments, you need to log in

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question