Answer the question

In order to leave comments, you need to log in

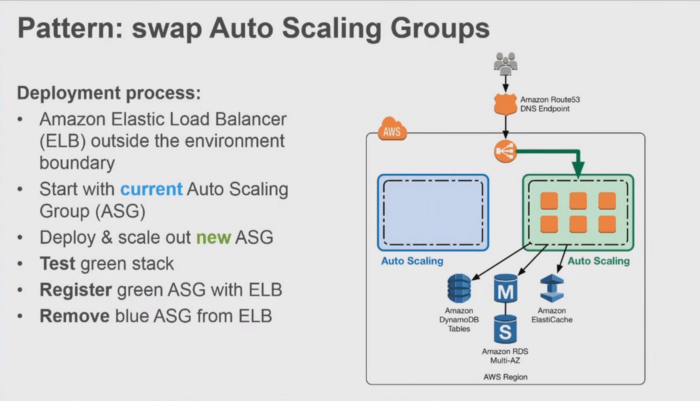

How to "orthodox" set up Blue / Green deployment through terraform on aws?

Let's start with what led up to this broad question. Here is the picture:

In contrast to the above scheme. At the moment, instead of the autoscaling group, I am replacing the launch configuration with a new ami image. After deploying new infra regardless of value:

deregistration_delay = 300

Resource:

resource "aws_lb_target_group" "lb_tg_name" {

name = "${var.env_prefix}-lb-tg"

vpc_id = var.vpc_id

port = "80"

protocol = "HTTP"

deregistration_delay = 300

stickiness {

type = "lb_cookie"

}

health_check {

path = "/"

port = "80"

protocol = "HTTP"

healthy_threshold = 3

unhealthy_threshold = 3

interval = 30

timeout = 5

matcher = "200-308"

}

tags = {

Name = "app-alb-tg"

Env = var.env_prefix

CreatedBy = var.created_by

}

}Answer the question

In order to leave comments, you need to log in

Chip from 2015 from a Hashicorp employee . You need to create an ASG with a lifecycle for each LC and manage traffic switching on the balancer.

In Terraform, you can do this:

resource "aws_launch_configuration" "myapp" {

name_prefix = "myapp_"

...

resource "aws_autoscaling_group" "myapp" {

name = "myapp - ${aws_launch_configuration.myapp.name}"

min_elb_capacity = = "${var.myapp_asg_min_size}"

...

lifecycle { create_before_destroy = true }min_elb_capacity, they will not be attached to the balancer. Then the healthcheck balancer itself should change the status of new instances as InService and start sending traffic to them, at the same moment TF will start deleting the old ASG.

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question