Answer the question

In order to leave comments, you need to log in

Expanding a table while parsing?

Good afternoon!

Got a question. It is necessary to parse the table data from the site using BS4 in Python, but the whole problem is that the table is initially displayed not completely, but is expanded in pieces using the page slider and using the multi selector with the selection of certain parameters

How can I pull out the whole table, and not pieces?

Well, or at least how can I teach the program to flip pages and change the multi selector data?

Answer the question

In order to leave comments, you need to log in

In general, I'm talking about parsing.

The code that is visible on the page through the element inspector in the browser is the code that the browser has prepared for the user. You open the site - the browser sends a request to the server. A request consists of a method, a path, headers, and a body. After the initial request, the browser receives an HTML page from the server, which includes (usually) a lot of JS scripts, which, during execution, can create additional requests to the server for additional information. After completing all the steps, the user sees the finished result in the browser, which differs from the original request due to additional javascripts.

If an element (class, id, etc) is visible in the browser's inspector, this does not mean that the element was present at the initial request. To see the code that the browser initially receives (the same code that you get through requests, curl, etc.) - you need to press CTRL + U, or right-click -> view page code

This is the same code that you get, and that's it data needs to be searched only in it, this guarantees that the element you need will be present when making requests through the PL.

If the required element is not on the page, then it is loaded by some kind of JS script. There will be two options here:

1. JS sends an additional request to the server, gets the required data and inserts it into HTML.

2. Data is created inside a JS script without requests (very unlikely)

If the data appears as a result of an additional request, then you just need to repeat this request.

To understand what you need, you need to use any traffic sniffer. The simplest is the request logger built into the browser. F12 -> network.

Usually it is enough to put the filter on XHR.

If you have Fiddler on hand, it will also work. Well, Burp / ZAP as an option (but very bold).

The algorithm will be something like this:

1. Open the Network tab

2. Clear the request history (if any)

3. It is advisable to check the "Preserve log" box so that the history does not disappear.

4. Refresh the page. If the content is loaded when scrolling / at the click of a button - twist / click, and so on.

5. Now you can press CTRL + F all in the same Network tab and enter the text you are looking for (let's say the name of the product).

6. On the left there will be those requests that contain this substring. Now you just need to click on them, find the one you need, see what it consists of and repeat it through requests.

You need to pay attention to the headers and the body of the request. Not uncommon when loading additional. Information in the body of the request is also passed the pointer to the current page, or the index of the element from which the new list begins. Also, additional headers can be added. For example, csrf token, or X-Requested-With. If repeating the request did not bring the desired result, it is worth checking the headers and the body again. If the site loads data when clicking on buttons, scrolling the page, etc., the algorithm is the same.

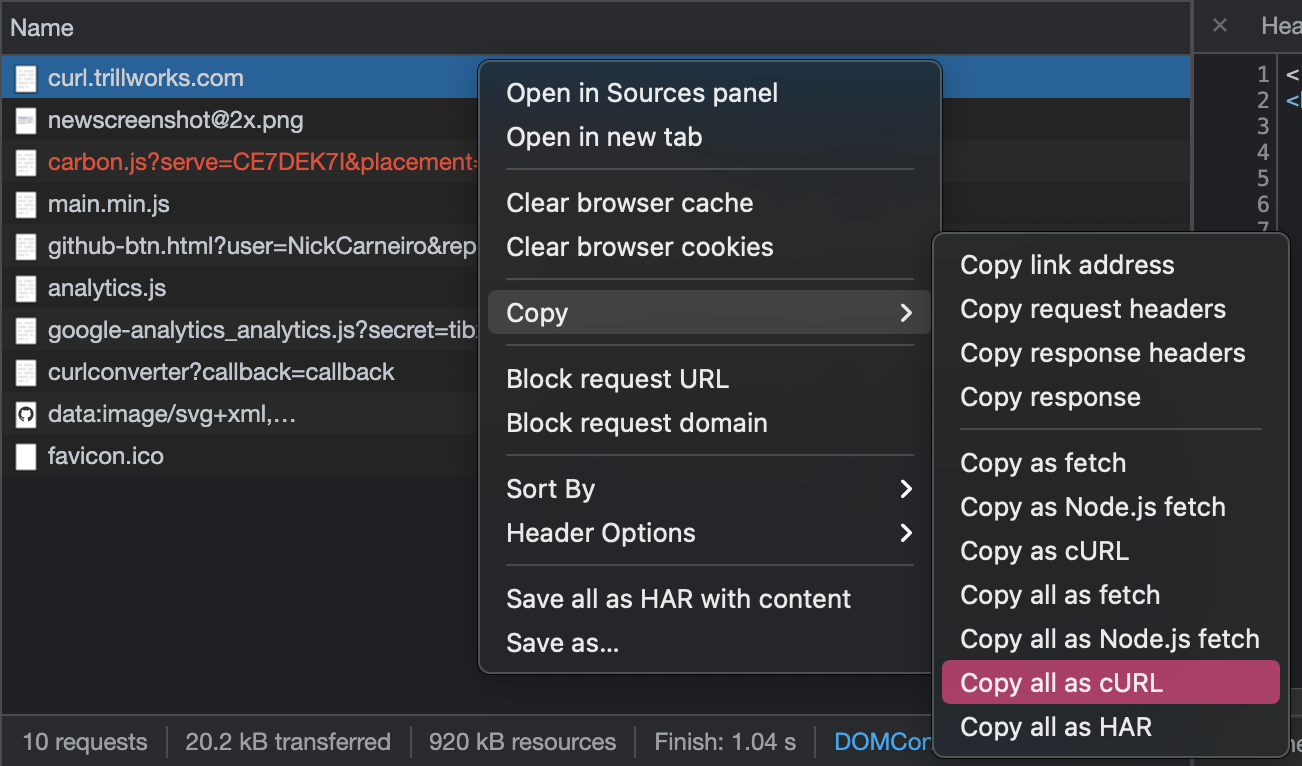

I share a good site that I saw here: https://curl.trillworks.com/

Copy your request as CURL

Then paste it on the site. It will give you the finished Python code. But you need to understand that this is an automatic process and it does not always give the correct result. In particular, the body transformation application/jsonis rather wrong. But for copy-paste of some headlines, it is quite suitable. In general, you can use the site, but you also need to think for yourself

. Of the good programs - Postman. Allows you to easily and quickly make queries, there is an export to Python code. I advise if the requests are quite heavy to make them "for profit".

Short summary:

1. Code via browser inspector != code from requests / curl request.

2. Most likely, the data you need is loaded additionally. requests are searched through any traffic monitoring.

3. Watch the request body and headers. Headlines, even the smallest ones, can affect the final result.

4. Always try to add headers User-Agent

If the data is loaded without additional requests, is in an unknown format, or just too lazy to figure it out, use Selenium. It is the same browser, but only with the ability to control its operation.

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question