Answer the question

In order to leave comments, you need to log in

Why such discrepancy in host/esxi occupied space?

Good afternoon.

A host running an esxi hypervisor is used.

It currently has 2 virtual machines running on it.

Each VM uses thin disks.

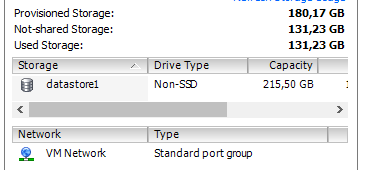

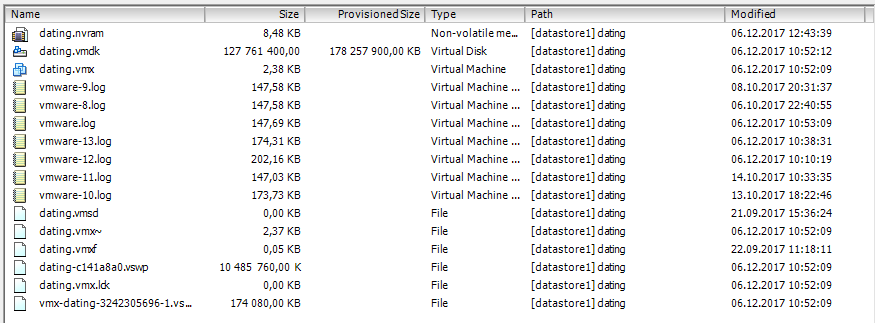

Used space 131gb.

The folder shows that the disk is 127/170.

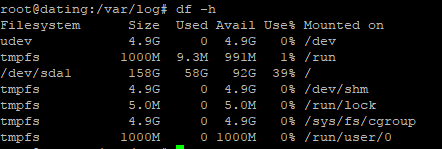

But in the virtual machine itself, it is displayed that only 60GB is occupied:

I tried:

vmkfstools -K name.vmdk

But this command did not really help.

Where is the problem?

Answer the question

In order to leave comments, you need to log in

The speaker above is right about the causes, but wrong about the way to deal with it. Filling in with zeros is not a solution, and no space, of course, will be freed up in this way. Disks will grow to the maximum size simply, that's all. Yes, in reality, such blocks can be marked as free on the storage (depending on the implementation of the storage, because Varya itself does not do zero block detection), but this will not change anything for you, since the hypervisor will consider these blocks BUSY (based on data from VMFS), despite the fact that the storage will consider them free. And you still won't be able to place more data than VMFS considers free.

Therefore, vmkfstools -K must be run AFTER you have zeroed out all the free space on the virtual machine disks. That's when it will go through the blocks on the datastore and actually mark them as free. Yes, and the machine must be turned off. And this operation (zeroing free space and then vmkfstools) will have to be done with a certain frequency.

You can do without shutting down, but you will need two datastores (at least). When migrating from datastore to datastore in Var, you can specify the conversion of disks. Accordingly, the process there is as follows - first zero out the free space, then migrate the machine to another datastore with the conversion of the disk to a thick one, and then migrate it back, converting the disks to a thin one. Then, in the process of reverse conversion, Varya will go through all the blocks, and mark the zero ones as free, compressing the disk to its actually used volume. But this is only available with two datastores and vCenter.

Well, a lot depends on how exactly you present the storage to the hypervisor. Local disks, NFS, block devices (FC, iSCSI) - have their own nuances of work. Starting with ESXi 6.0, if the storage system is able to thin provision, then the discard option can be enabled on guest systems when mounting. Then the TRIM / UNMAP command will reach the storage, which will allow you to actually mark the blocks under the deleted files as free, this will be understood by both VMFS and the storage. This will not work on local drives and NFS.

First: the output of the df command shows information about mounted filesystems, so you can't see what's below.

See how much the virtual core sees

and how much is allocated for partitions. Compare sizes in bytes.

Secondly: thin disks, this is an opportunity to "sell" more than they have :)

In real operation, the benefit is doubtful. And this is a direct way to run into problems when one or more hosts are stopped because they asked the hypervisor to allocate space, and there is nothing to allocate.

Both in Linux and in all modern Windows, it is possible to stretch a partition in a virtual machine without stopping the system, so I allocate a disk a little more than I need at the moment with a margin of a couple of months, and add it as necessary.

Thirdly, why not split the virtual disk into the necessary "hard" partitions (which do not take up much space and practically do not change with the life of the software),

/boot

//home

/var/lib/mysql

/var/log/

и так далееDidn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question