Answer the question

In order to leave comments, you need to log in

Why does the site freeze with 30-40 concurrent requests?

There is a script, I send requests via multi curl to the site, in response, when the requests are completed, it sends a call back. (He sends each request separately, one response at a time).

When a situation occurs that 30-35 call backs come at the same time, the script starts up, makes a call to the database, and starts executing a curl request through a proxy, naturally it can hang for a long time, because. is done through a proxy.

I heard about the queue system , but I don’t quite understand how it works and whether it will help in my situation.

If this same queuing system simply delays the execution of requests until the previous requests are completed, then I'm afraid this will not work for me, because. the lifetime of what the site gives me through a call back is only 2 minutes and I have to make a request as soon as possible.

=====

When many call backs are executed (they run curl requests), when trying to access phpmyadmin, the page loads in minutes ... but through filezila everything works quickly, etc.

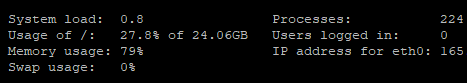

Below I show what the load looks like: The

question in the end is this: Is it possible to do something, or just not load a large number of requests until the old ones are completed? Lack of resources or what? Will the queuing system help in my case?

Answer the question

In order to leave comments, you need to log in

From all that I understand, it turns out that in your callback handler a request is made via curl somewhere else (through proxy) and thus the worker from the web server pool is kept until the request is completed. Your server will quickly run out of web workers, even if you increase them to 100 and run out of memory. Of course, this is a bad decision.

Yes, in essence, you need to implement a queuing system.

You need to execute the incoming callback request as quickly as possible and release the pool. To do this, you need to limit its work to put the necessary data in some kind of storage or send it as a message in the queue manager, delegating the work of sending it to another pool of workers. For example, 1-4 workers / daemons on ReactPHP or Node.js, which will take a batch of tasks and send out requests asynchronously.

1) If PHP works through FPM - increase the number of processes as long as there is enough memory / processor

2) If it works through Apache - throw out Apache and install FPM, then repeat step 1

3) If you really want queues - feed requests to the queue server, give the task ID to the client , and the client once every N seconds, let it poll whether the task has completed or not

For a general understanding of what is happening: How long will my server last?

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question