Answer the question

In order to leave comments, you need to log in

When trying to read the ls /root directory, the process freezes, how to fix it?

Actually, 15 million files were generated in this folder by cron using wget

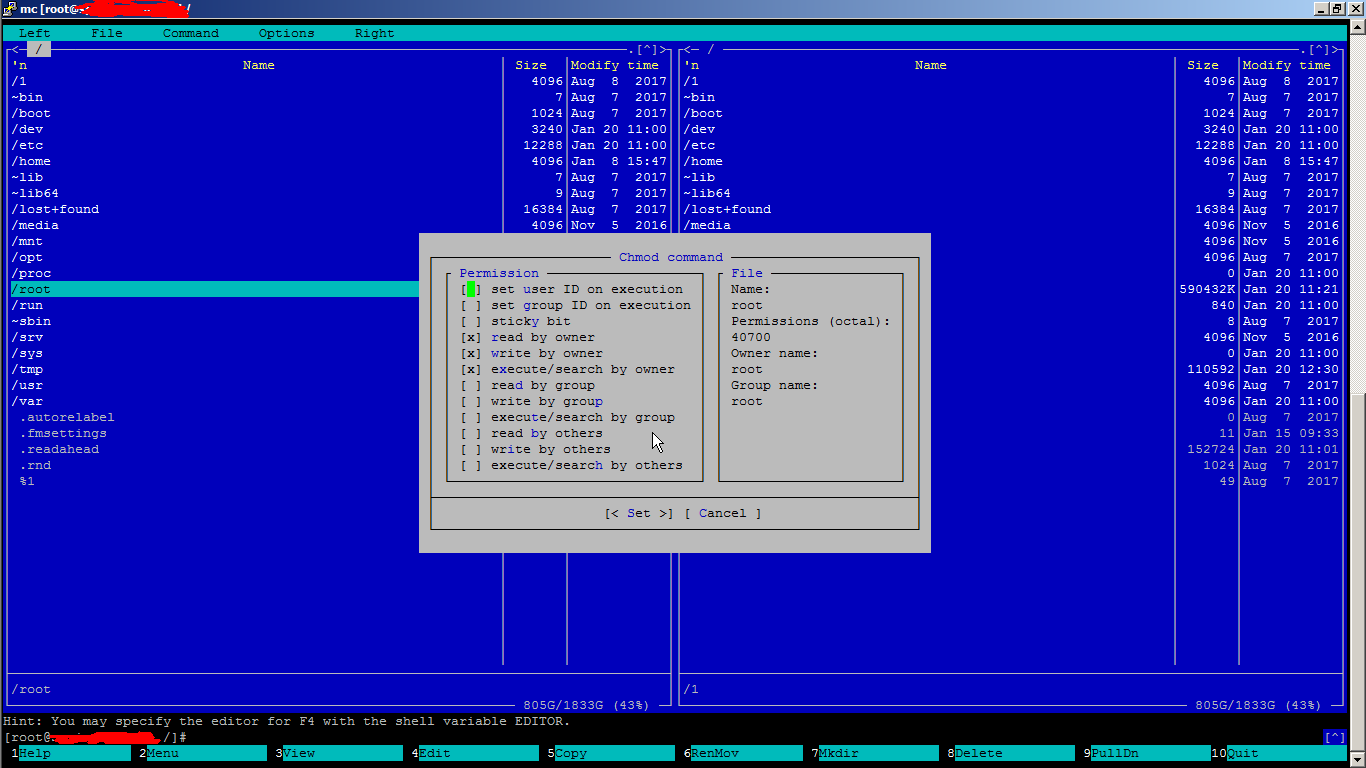

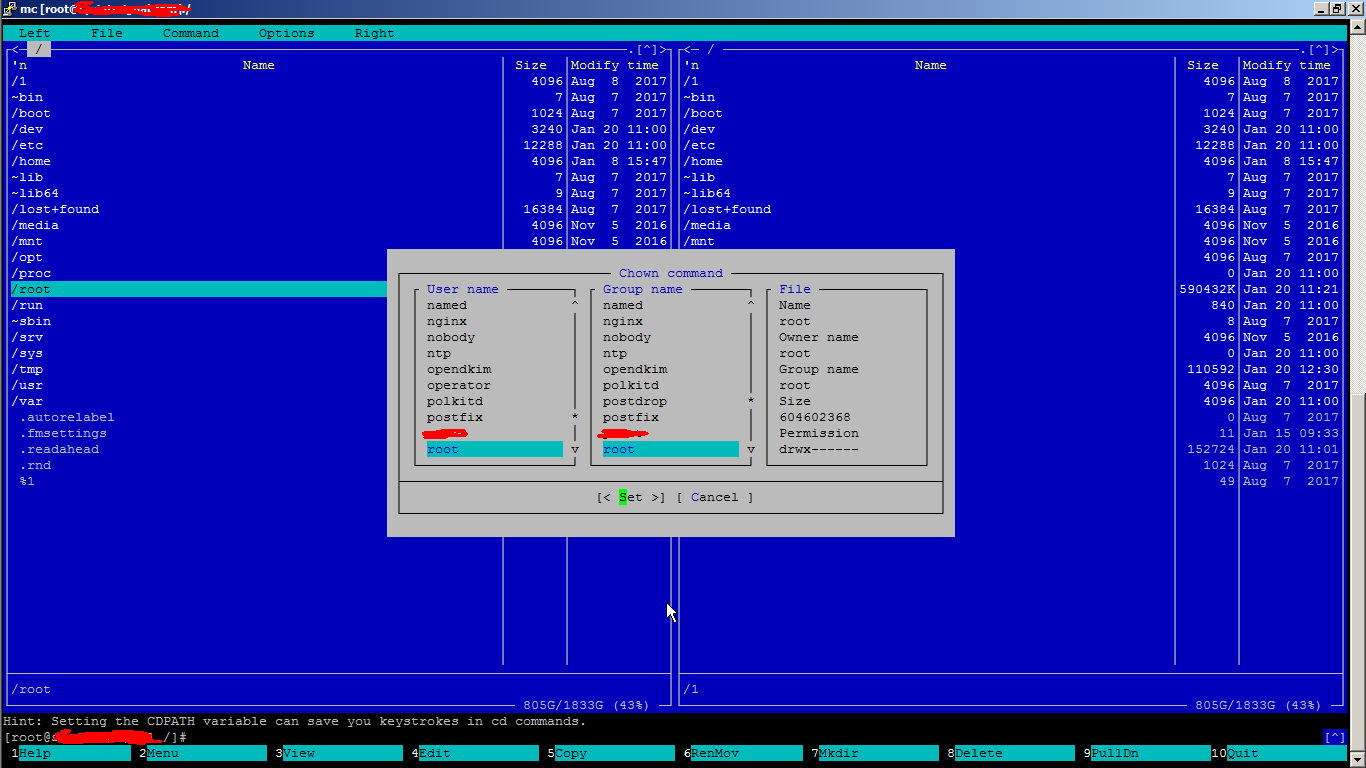

. I fixed the problem, cleaned the folder. but now at any attempt to read the directory everything hangs. ssh works. There is nothing suspicious in the logs. And yes, the hung process cannot be removed - it simply does not kill ((only reboot. In the meantime, you don’t remove the process - the server does not respond to other commands from root. With the rights to the folder, everything seems to be normal, the cd command works. How to fix it? maybe the file one is no longer cake? Nobody came across?

And yes - at first everything was normal.

Confuses the size of the folder - it is still 600 megs

Answer the question

In order to leave comments, you need to log in

In general, the problem is solved

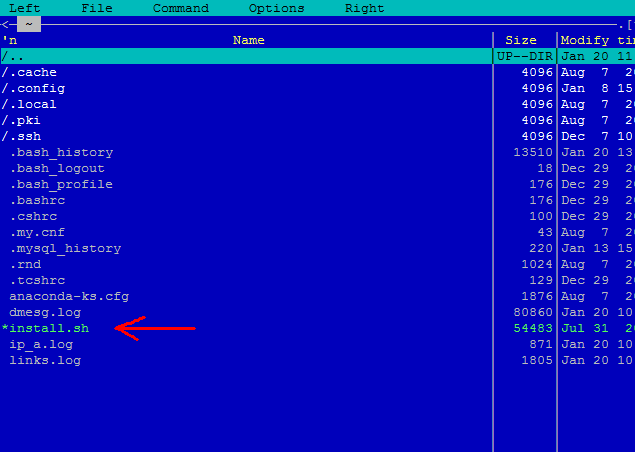

, that's what was in the folder.

it turns out that somehow this script was launched (panel installer (() - possibly due to incorrect rights to the folder - the screenshots in the topic are correct)))

Thank you all for trying to help - I myself was shocked by the problem ((( sometimes it works, sometimes it doesn't work , by the

way, tell me why it was so?

By default, ls not only reads the directory, but also does a stat on each file to color-code their type and executable bit. This greatly slows down the process.

Bypass calling ls without the options given in alias ls:

$ alias ls

alias ls='ls --color=auto'

$ ls # вызывает алиас

$ 'ls' # вызывает обычный ls без параметров'ls' /root > /tmp/list_of_files

less /tmp/list_of_filesfind /root -name 'index.html.*' -delete

ls /root

Actually, 15 million cron files were generated in this folder via wget

Nobody faced?

rootexists to administer the system, and not to download trillions of files into its directory.

Ext4 is good, I was afraid that btrfs was there. And so everything is a bundle. "ls" hangs because there are still millions of files. This operation causes a frenzied disk IO and uses a lot of memory (or worse, swap). Therefore, everything freezes in this world. If you look at the size of "ls -lhd /root" of the directory itself, you'll be horrified. The best way to repair has already been suggested to you - save everything you need (usually .ssh , everything else is acquisitive) and demolish the entire directory, then create it again and return the saved files.

I suspect that the file system structure expands the size of the directory to fit a list of 15 million files, but it does not know how to reduce the size of the directory.

That is, when the files were created, the blocks were allocated under "/root" and that's it, now ls will subtract the entire volume, despite the fact that it only uses a few entries.

I recommend creating a new directory, moving all the visible contents into it, and bang the old one, then rename the new directory to root,

but it seems that this has already been done.

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question