Answer the question

In order to leave comments, you need to log in

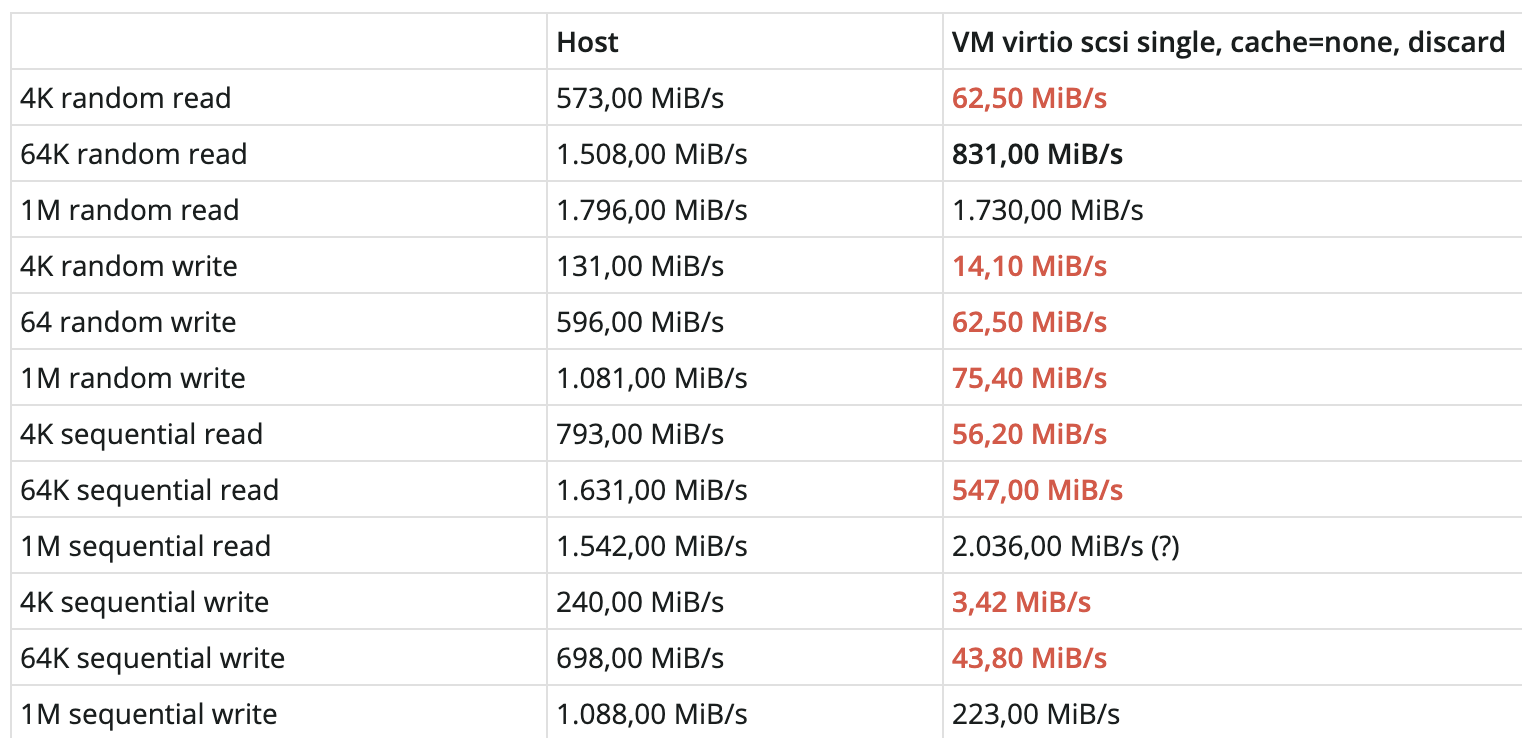

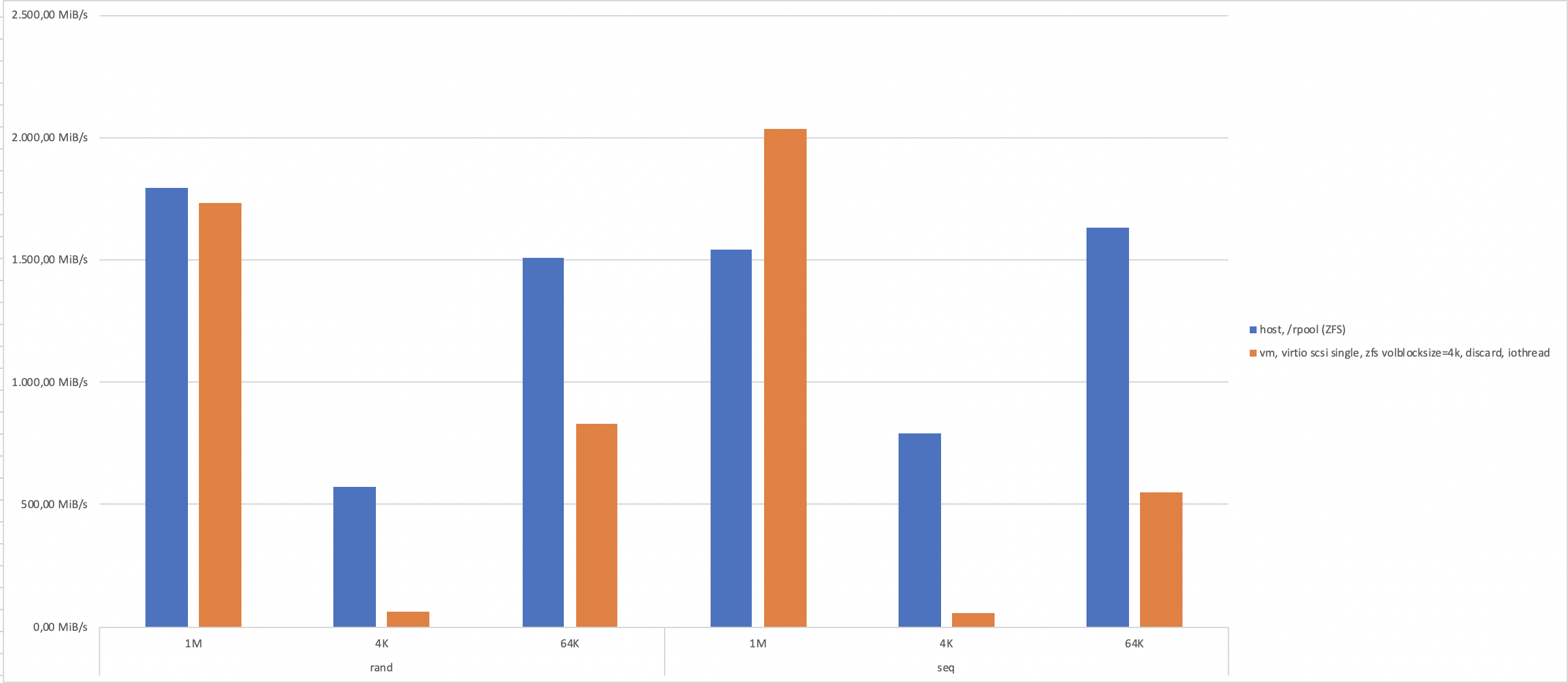

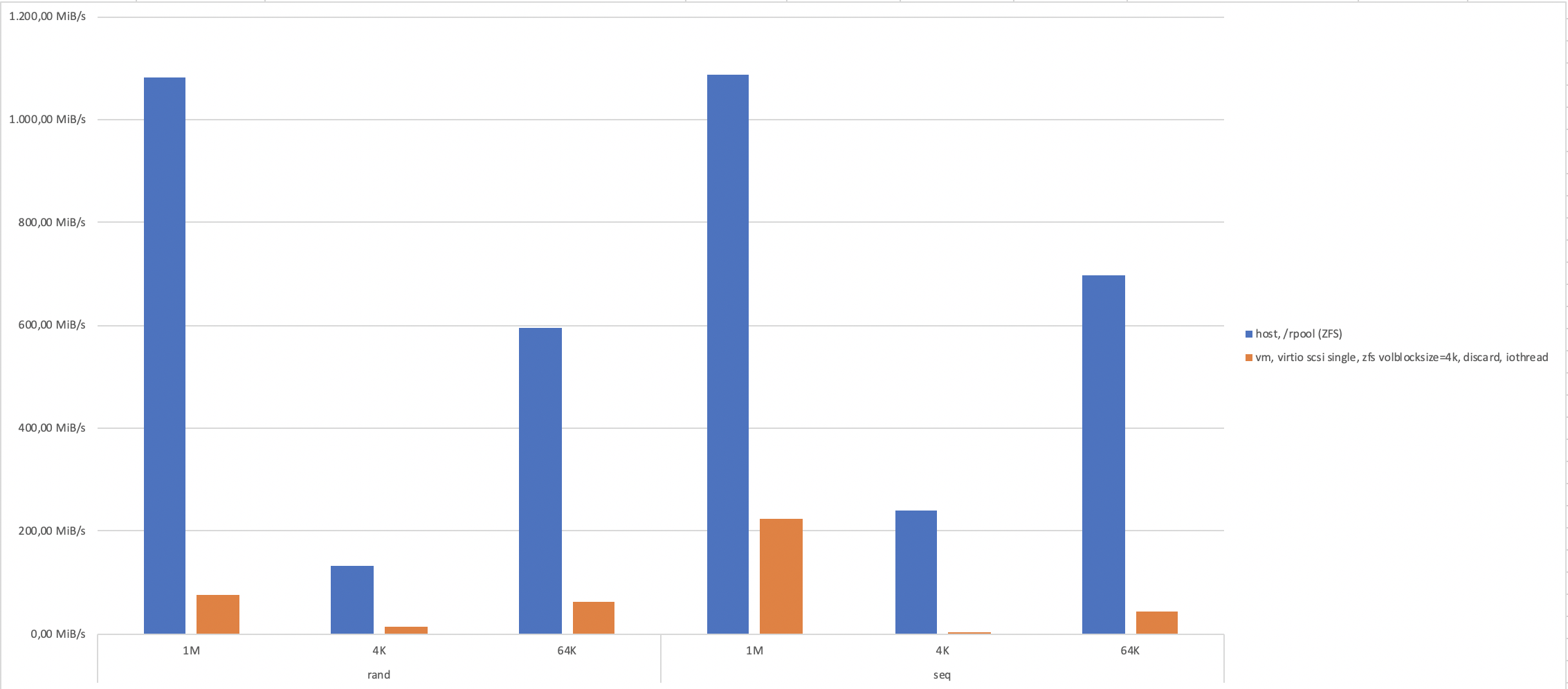

What is the reason for such degradation of IO performance between proxmox with ZFS and WS19 VM?

For a week now I have been trying to find the reason for the terrible drop in IO performance between a host with proxmox on ZFS and Windows Server 2019 virtual machines.

Given:

fio --filename=test --sync=1 --rw=$TYPE --bs=$BLOCKSIZE --numjobs=1 --iodepth=4 --group_reporting --name=test --filesize=1G --runtime=30

Answer the question

In order to leave comments, you need to log in

In proxmox 5 I also tried zvol, with Linux - the result is the same

I got to the current ZFS developers - there were patches, the problem was confirmed, but their fixes did not help

I sit on LVM

If you need performance, you will have to abandon ZFS, I suffered for a long time but could not get a good performance for a high disk load in

ZFS

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question