Answer the question

In order to leave comments, you need to log in

What is the correct way to exclude dynamic URLs and pages with ".html" URL?

There are pages that are output by the parser and have a URL like:

/zhenskie-platya-_53.html?makeOrder=1

How to close pages in robots.txt that have html in their URL? particle?

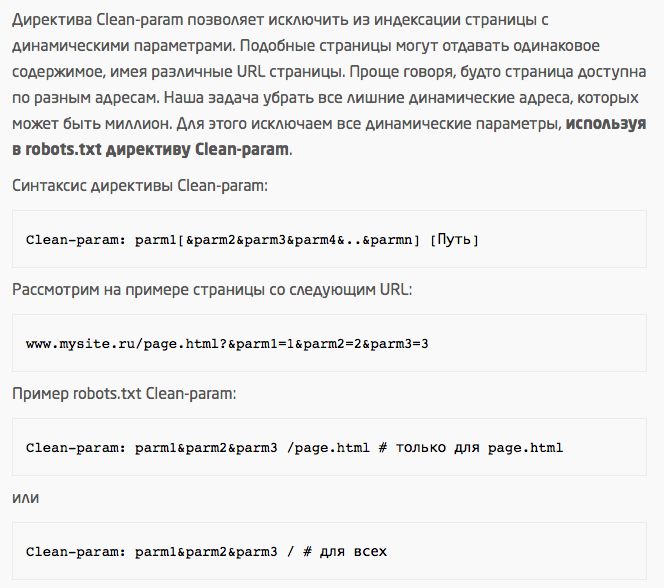

I found a way to close it on the net using the Clean-param directive, below is a screenshot:

But how to register it for my case, so that with pages with html? were they closed by a particle?

Answer the question

In order to leave comments, you need to log in

Clean-param only works for Yandex. And it's not clear if it works.

For your case, the special character * is suitable, denoting any sequence of characters:

Disallow: *.html?*

More about everything: https://yandex.ru/support/webmaster/controlling-ro...

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question