Answer the question

In order to leave comments, you need to log in

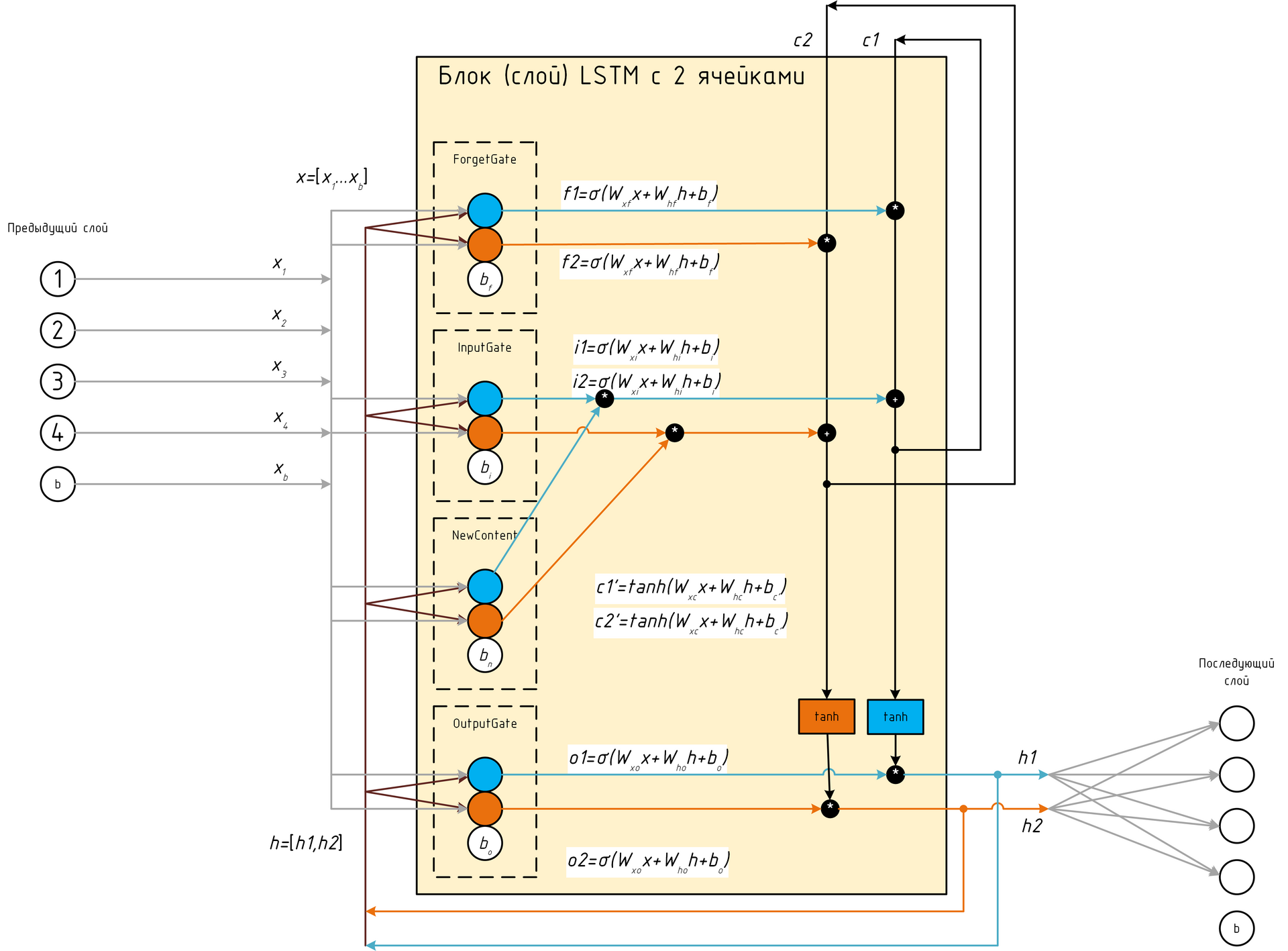

What is the correct configuration for a multi-memory LSTM block?

Hello!

Interested in neural networks. I'm trying to implement them in code. With simple networks based on perceptrons, everything is clear. But I ran into difficulties in understanding networks based on LSTM. In all sources, the principle of LSTM operation is described using the example of one memory cell - there are no problems with understanding this topic. But, when I tried to assemble a block of several cells, the following questions arose:

1. How to draw an analogy of an LSTM cell with a simple neuron (perceptron)? Those. if we create a layer based on LSTM, can we consider an LSTM cell as an analog of a simple neuron in a simple network layer? Or will a block of several LSTM cells be an analogue of a neuron? Or is the LSTM block already an analogue of the perceptron layer?

2. Biass neuron in LSTM gates - is this neuron common for a particular gate of all cells or does it have its own in each cell? Let me explain, let's say we have two cells, then there will be two biass neurons in InputGate (one per cell) or one?

His understanding is shown in Fig.

True False?

Answer the question

In order to leave comments, you need to log in

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question