Answer the question

In order to leave comments, you need to log in

What is the best way to implement a file storage structure for an average (200-250 people) organization?

Good afternoon.

The question arose of choosing a file storage structure for one organization. The estimated amount of data is 4 TB of virtual machine images for the HyperV server (and later on the cluster), 4 TB for file storage (office documents, a bunch of small reports) and 6 TB for roaming user profiles. Load for 200-250 users. The data doesn't change very often.

I ask for advice from more experienced colleagues, how to cram it into iron? I need it to be reliable and work like clockwork. Provides an acceptable speed for working with files over the network. I would like to provide fault tolerance within 3-4 hours and further scalability.

As I gave myself - One dedicated physical SuperMicro server under Windows 2012 R2 (licensed for it) with a RAID1 RAID controller for the system and 4 SAS drives 10 TB 10000 rpm in RAID10 for data. Deduplication and Shadow Copy are enabled on the volume. All data is stored on one volume. And another VM on another Hyper-V server with DFS enabled. On the Hyper-V node, the backup virtual machine is stored on a RAID1 20TB SATA disk for low cost. Or storage for a volume with user data is mapped inside a virtual machine via an iSCSI target from a backup server. Synchronization between the two servers goes through DFS in the active-passive mode at night, during the period of the least load on the servers. Then, in the event of a failure of the main physical server, the DFS root is remapped and users continue to work. The loss of files on the balls in 5-6 hours for work is not so critical, important data can be pulled out of the backup later. Lives on this for 2-3 days while the main server is being repaired.

I considered the option of making the second server not virtual, but immediately building it on the basis of Storage Spaces from MS Server 2012 R2 RAID1 20 TB SATA drives and 2 TB SATA SSDs. Then, in the event of a failure of the main server, everything will not be mercilessly stupid and slow down. Storage Spaces will give Tiering for frequently used files and the recovery time of the main server can be extended to a week or two. Does this decision make sense? Or what is the best way to organize the file storage structure for an average office organization?

Answer the question

In order to leave comments, you need to log in

200 users, the load can be decent. Are you ready to pull up to 10 gigabit storage? Or do you have a 6tb ssd?

My advice, think about dividing the storage into several, by tasks. Not all 200 people climb to the same data, for sure it’s easy to divide everything by department there. It's not about access, but about the physical placement of data on hardware (disks and servers with their own network connections).

Even if all this hardware will sit in one rack, the main thing is to physically separate the data. Because of this, instead of 3tb hdd and more disks, 1tb sizes are sometimes justified (less prices per gigabyte are already sad).

It is necessary to determine the levels of desired fault tolerance.

1. Virtualization - host level

2. CSV\Storage

3. Guest OS

4. Data(Content)

Then you need to understand the fault tolerance is needed in Active - Active or Active - Passive mode and auto Failover

or Manual failover.

DFS - Active - Active. But replication is at the file level. That is, while the file is locked, it will not be replicated to other nodes. But there is no failover.

Storage Replica - replicates a volume at the block level in synchronous or asynchronous mode. It doesn't care what data is stored, it replicates blocks. But switching to another node is handled.

FS cluster - One disk that travels between cluster nodes. But there is a firework.

You can also combine technologies. For example, use FC with Storage Replica or DFS + SR.

You can also make a replica of an entire virtual machine at the virtualization level.

Backups - the taste and color. It is possible to backup at the virtualization level, it is possible at the guest OS level. In the case of Hyper-V, it's great to backup through powershell with backup deduplication. Guest data can be easily backed up via powershell direct to VM directly from the hypervisor.

Regarding hardware solutions like Synology - you need two devices for HA. Horizontal scaling is expensive. Scaling is mostly only vertical.

1. Analyze how often what data and where they run (to which folders, etc.) - in this way, get a cut of what the balls are by loading specific branches and find out what is more important where IOPS, speed, energy saving

2. Based on the above, figure out how you can spread this ball around iron

3. Council. It is better to assemble file servers on the host system, and not on hypervisors, because in which case all the data remains clean on the screws, and not in disk images, and according to statistics it works faster.

4. Server preration on a separate disk. Take a backup of the system once a day with Acronix once a day and send it to the backup server: if it’s with the system, just roll out yesterday’s backup, if with a sysdisk, we change and roll out yesterday’s backup

5. Send backups to a server via DFS. At least to another room, and preferably building

6. Once a week, back up critical data with Acronix and to the cloud or somewhere else outside the territory

. rest sata/ssd

I would rather answer a non-author who has already chosen a strategy and is obviously already biased in one way or another in favor of Microsoft. I will write to those who will read it.

Having owned several Synologies over the years, I've seen how flexible, fast, and great a NAS it is. 200 users is not 2000+ (I'm not going to give advice for that amount), but for an average and slightly above average company, contacting Microsoft is not only overhead, but also worse performance and flexibility. Obviously, the advantage of a soft raid (on Synology) over an iron one on Windows. There will be no difference in speed, but in 5-6 years look for exactly similar drives and overpay ...

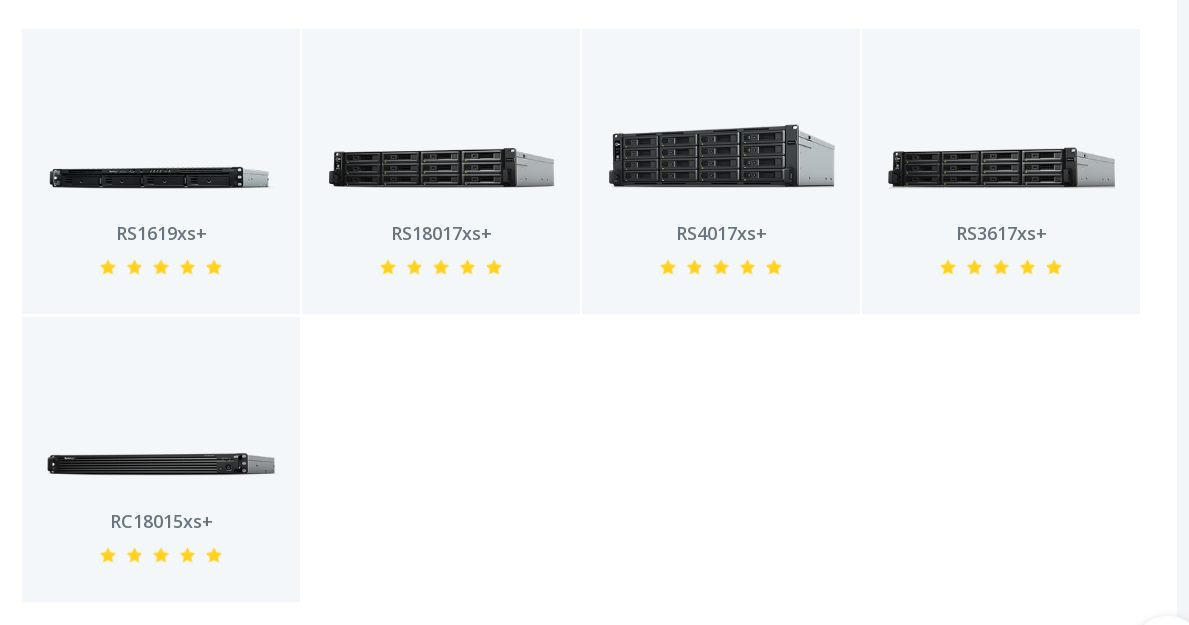

Synology has professional solutions, prices can be found here(opened the first Russian site that came across). On the site of Synology itself for these tasks (250 users), several models were proposed:

but it seems that not all of them are in Russia (judging by the first link).

The software has many of its own and third-party applications, ranging from popular web servers and CMS engines, wikis and ending with the ability to run virtual machines. (Synology is based on Linux)

But I'll tell you about one inconspicuous feature that I think is very useful. All deleted or overwritten files by users end up in the recycle bin, which is available only to the administrator and who, of course, configures the storage time for files in it. I don't know if this is available on Windows.

You are digging a huge hole for yourself. Raid 10 cannot be used at all. Although everything looks great, in practice it dies with all the data irretrievably. Of the 4 disks, it is better to assemble the 3rd one with a hot swap. Supermicro does not fit into the concept of "I need it to be reliable and work like clockwork" at all. You need to proceed from the available budget, tasks, and allowable downtime. 200 users can occasionally throw off ready-made text files, or they can render 3D together. requirements for iron will be completely different.

It is not clear from the description - will the VMs be backed up to the storage, or will they work from it?

If there is no money at all, but you want, in addition to Sinology there is Kunap. which, in addition to CIFS, can block access and NFS, with built-in tools, can synchronize between devices, network "bins" (where everything deleted will fall) not available to users (or available, as you configure), logging of actions, monitoring console. and since this year knows how to service.

The task is actually not as simple as it seems at first. If you assemble a solution from shit and sticks, the result will be appropriate. For example, be prepared that you will have to reboot devices to update the software, which involves working at night or on weekends. How much do you need it? From experience, a loaded storage on SATA disks requires the replacement of all disks in two years - consider the cost of ownership as factoring in force majeure.

I would recommend contacting integrators, at least one or two. You will get an idea of what and how to calculate for the selection of a solution, you will have an idea of the existing solutions and their functionality. You need to be sure to voice the allowable downtime, the possibility of scaling. Consider not only the cost of the solution, but also the cost of support, which will result in its absence in terms of money, time to solve problems on your own, both software and hardware, implementation time. In order for you to calculate the performance in IOPS, take into account the performance of network equipment at the core level and access level, equipment interfaces to the user network and among themselves, for block devices you also need to calculate whether a SAN network is needed and its cost, whether you can configure it yourself. Take into account the power requirements at the right time of work without it, the number of power ports and lay the PDU based on this and a cable with suitable connectors. Do not forget to calculate how much space it will take in the racks, its weight and dimensions (so that you can bring it into the room through the existing doors and after installation it does not fall into the basement along with the racks and ceilings). Consider how you will restore backups, that if you store them on virtual machines on which disks die, everything will be lost for you, and if it’s just on disks in a raid, it will take you about a week to restore when the raid dies (this is if in your the city has a recovery office and there is money for it). Start with a TOR that will contain all your requirements for volumes, speed of work, data types and their volume, existing channels in the core and to users, time to restore from backup, time to restore equipment with backup. Knowing the speed in the network core and the speed to users, you can roughly calculate the maximum available speed and the amount of data that you can take from the storage, that is, what disks you need and their number. Please note that vendors recommend storage capacity of no more than 60%, with higher occupancy, performance may drop significantly. Practice shows that it is very useful to show the result of the integrator's work to the vendor with a request to check the technical specifications for correctness and the availability of all necessary licenses. And in general, talking with the technical department of the vendor is very useful for understanding what will work and how, whether what you have been offered is really what you need or there are other solutions. By the way, after such communication, the illusion "

Please note that if you do something wrong, or forget - after implementation you will have to answer a question like "we invested a lot of money in the solution, and as a result we got a lot of problems, lags and brakes - how will you compensate ?!". If the allocated budget is not enough for a solution that meets the tasks, you need to prepare two options, suitable for the tasks and suitable for the money with a list of + and - and say "it can be cheaper, but then this is waiting for us ..." Then when the ass comes, it will be possible at least to say that the decision was made by the management and this asshole was planned and expected.

It was interesting to read the thread. Tk and the task is described well, and many solutions and approaches have been proposed. I'll add my 5 cents too.

1. Much depends on the situation and the experience of the author. From requirements to solutions. From the budget ... you can go on for a long time. Therefore, it is probably difficult both to give an answer and to make a choice.

For example. If you already have an infrastructure on "Windows", it may not be the best solution to work with Linux and Synology solutions and others like them.

If it is possible to involve an integrator in the work, then you can get experience of interacting with the integrator. But do not get the experience associated with the launch of such a solution.

Etc.

2. There are very strange "advice", and apparently the authors of these tips have experience that allows them to give them such advice.

For example, about RAID10. RAID10 is quite an array. It has both pluses and minuses.

Or SuperMicro servers. These servers are probably no worse and no better than Dell or HP servers. They are more affordable. But the service is worse. Although a lot depends on the supplier.

3. Probably not the best time for SAS disks. Тк:

- if you need volume, we take SATA

- we need speed, we take SSD

4. From my experience, shadow copies for Windows are a great help, both for users and for technical support. Many questions, "the file was there in the morning and now it's gone" - can be solved on the spot. Of course, they do not cancel backups, but they save a lot of time on support.

5. And again, from experience, you should not give up virtualization. This layer of abstraction makes many issues easier to solve.

- backups and recovery from them. Having a copy of the data and having a VM with data are not the same thing. You can usually start the VM faster and with less overhead.

- it is easier to do migrations, relocations and implement schemes with HA

6. It has already been said, divide data storage according to the nature of the load. Conditionally do not store everything in one basket.

For example:

- file server, moderate load during working hours

- profiles, high load at the beginning and end of the "shift"

- vm - "uniform" load throughout the day

This will allow more flexible implementation of access control, backup and recovery, etc.

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question