Answer the question

In order to leave comments, you need to log in

Python how to make many requests and get response without error?

The question is how to do many checks at once and get an answer. That is, to make a service for monitoring site pages.

We use the aiohttp python library in multithreading. Up to 25,000 checks in 2 minutes can be done locally. I have 8gb of ram. Maybe on hardware it will be more powerful and faster, I haven’t checked it yet, or maybe the code is shit. In the future, such checks can grow up to 300 thousand ...

I also read this article https://pawelmhm.github.io/asyncio/python/aiohttp/... , but there is only sending, without processing the response ...

Question :

1.How can I increase either the number of threads, or just the number of checks at a time?

2.How to correctly take into account the option that the pages may delay the response, timeout, but how competent is this decision?

3.How to process responses correctly if a lot of data comes at a time?

Answer:

It seems to me that the only solution is to use microservices.

As I see it.

A pool of tasks arrives, conditionally 100 thousand. Previously, I shove them across several servers, for example 5 pcs. So one server goes 20 thousand at a time. All these tasks first get into their databases, on their servers, and then they can be merged into one common table. But you can also read data from different servers ... But when there are even more such checks, you will need to add the number of servers and distribute all tasks between servers ....

Why do I need this? Yes, as a matter of fact, practice, solve interesting problems for yourself .... And train to develop microservices.

Maybe someone has already solved this or knows where such tasks are dealt with by examples, I will be grateful.

Answer the question

In order to leave comments, you need to log in

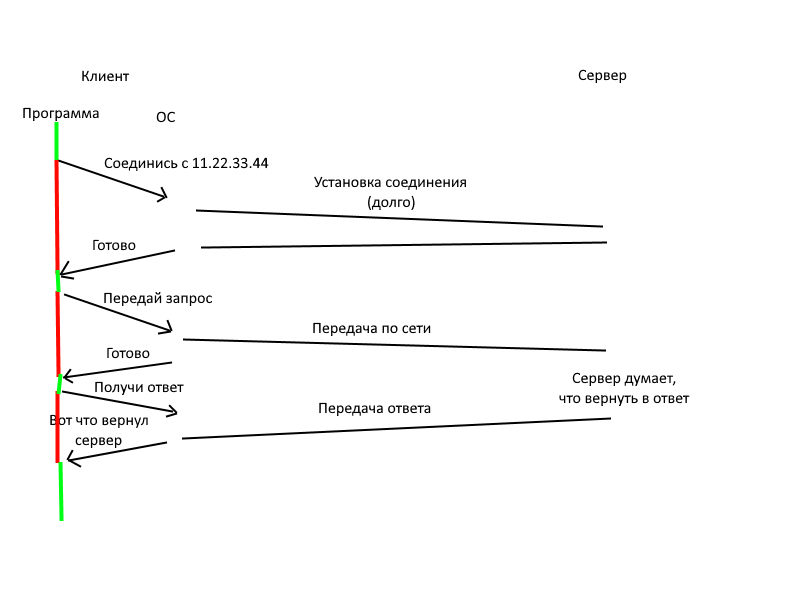

First, NEVER mix multithreading with asynchrony. Asynchrony was invented in order not to make threads.

If I understood correctly, then you want to make a program that checks the performance of many sites at the same time.

See:

How can I increase either the number of threads or just the number of checks at a time?

Previously, I shove them across several servers, for example 5 pcs. So one server goes 20 thousand at a time. All these tasks first get into their databases, on their servers, and then they can be merged into one common table. But you can also read data from different servers ...

How to correctly take into account the option that pages may delay the response, timeout, but how competent is this decision?

async def make_request(address):

...

return address, response # чтобы потом можно было понять, на что это ответ

async def main():

urls = [...]

reqs = []

for url in urls:

reqs.append(make_request(url)) # Обратите внимание на отсутствие await, нам не нужно ждать завершения сейчас

results = await asyncio.gather(reqs) # gather объединяет несколько корутин в одну, теперь мы ждем, пока выполнятся все запросы

# теперь results - list[tuple[<address>, <response>]]

for url, result in results:

print(...) # print можно делать и в обработчике, тут уже зависит от того, что вы хотите сделать с ответомHow to process responses correctly if a lot of data comes at a time?

async with session.get(...) as resp:I didn’t quite understand what you want to achieve exactly, but what prevents you from creating asynchronous multithreading with try except, specifying timeout and verify=False ? each thread works on its own, does not wait for the previous one, and timeout is responsible for the maximum waiting for a response from the site.

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question