Answer the question

In order to leave comments, you need to log in

Nibble myth or reality?

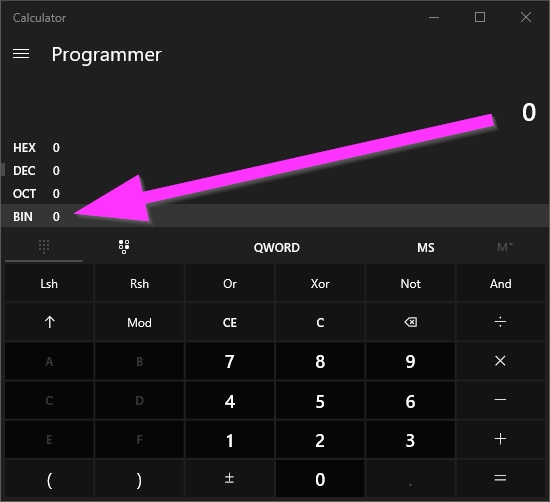

For example, 0 is just shown as zero and the extra part is discarded, or is zero really a simple zero?

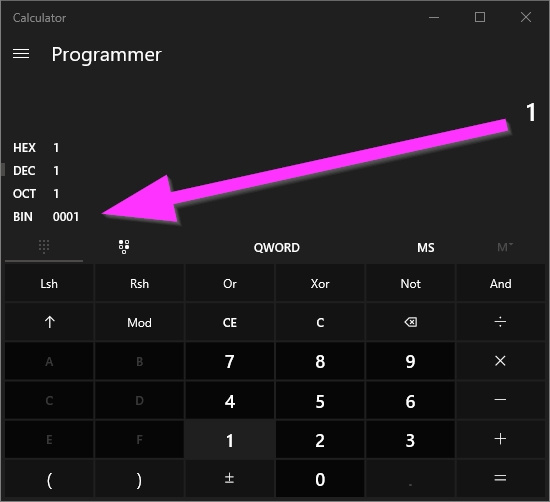

Whether 3 extra digits are discarded, or 7:

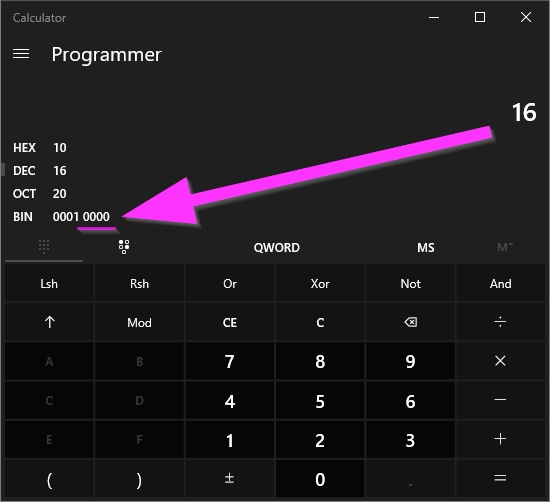

and when the number rises higher than 15 (with zero inclusive), one more nibble is added:

fig. Added another nibble? Or nibble on x86 x64 etc. is it nothing more than a simple abstraction? Or is small data still operated by a chain of nibbles and zeros?

who understands bytecodes, explain briefly how it works - after all, a byte, it also consists of eight characters, and not from buckshot of one zero, nibble, and an octet "depending on the situation."

Does only the octet work, or is nibble used to save resources , and zero along?

PS For some reason, I always thought that zero is a whole byte filled with zeros "0000 0000" and not just 0.

Answer the question

In order to leave comments, you need to log in

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question