Answer the question

In order to leave comments, you need to log in

Neural network on paper?

Hello

, I'm trying to understand how a neural network works.

Or rather, how it is internally arranged

. That is, I want to roughly speaking manually write a simple neural network on a piece of paper.

After all, everything that the program does can be written manually.

How will it look like?

I read a bunch of articles but did not understand how to do all this manually?

Let's take the simplest task:

Let's say there is a square and a circle (of different sizes).

How to write a neuron network on paper which gives an image as input, and at the output

it returns "this is a square" or "this is a circle".

Everywhere some abstract examples are the same.

And nowhere is it written to do it manually

I understand that it will be necessary to do a lot of cycles there, and in general that the entire National Assembly works on a bunch of cycles.

But I don’t understand how to solve such a problem on paper, roughly speaking

Answer the question

In order to leave comments, you need to log in

Different levels of abstraction are suitable for describing different systems in different ways, for example, thermodynamics is poorly suited for describing human mental activity, although a person is a thermodynamic system.

Cycles are the wrong way to describe it.

If "abstract examples" do not give you understanding - perhaps the point is your insufficient preparation, after all, they give someone? Usually, in the preface to the textbook or introductory lecture of the course, the level of knowledge necessary for mastering the material is indicated.

well, on your fingers, for example:

you have this photo divided, for example, into 9 sectors

, each of them has a state, for example, 1 or 0 (you do not influence the state of the sectors, but only evaluate)

let's say data from these sectors is read by one neuron - after it he considered the state of all sectors, he must draw a conclusion - is it a circle or a square

, he makes this conclusion on the basis of the so-called "decisive function".

let's say the decisive function is like this - if the sum of all values \u200b\u200bof all sectors is more than 5 - then this is a square; if less, then this is a circle (decisive functions can be more complicated, but the very essence of finding solutions is important)

and the essence is this ->

you cannot influence the input values themselves, therefore, to influence the result, you can multiply the input values by a certain coefficient, and by varying the coefficient multiplied by the input value you adjust the result of the "decisive function" to the required result. This adjustment occurs through a process called backpropagation.

there are 8 units at the input (circle), at the beginning the coefficients, for example, are equal to one - the sum of all incoming values multiplied by the coefficient is equal to 8, you check if it corresponds to your scheme (in which the circle is values less than 5) - no 8 is greater than 5, then you you lower the coefficient values a little (usually they change the values not immediately by the required amount, but by a small value called the learning rate) for example by 0.1 (there are many other schemes for changing the coefficients), but because you show examples with a circle and a square, then increasing by 0.1 the values of the input signals that activated the neuron, then lowering it, you get the desired scheme, in which at the input of 8 sectors with units, 1 central zero, your sum with coefficients gives 4.8 in the answer, for example, which corresponds to a circle, which means the coefficient for all incoming values is 0.6

PS The

very semantic connection between some class and a value greater than 5 or less than 5 is made by the Bayesian function in the neuron, but here we set these values ourselves.

On paper, this will not be easy to do. If you really need it, try something simpler. For example, a neural network that calculates the sum of two numbers. Then it will be possible to calculate the weights several thousand times step by step and get a working neural network on paper

. The simplest type of neural networks is convolutional:

here is an example of how to do it on paper:

https://habrahabr.ru/post/312450/

https://habrahabr.ru/post/313216/

Example from Matlab.

First, you turn a two-dimensional image into an array of zeros and ones by scanning (it was the sticks that worked in the retina of the eye).

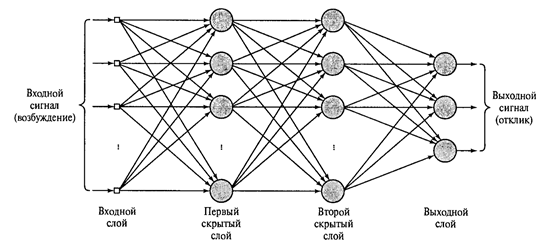

Next, you need to come up with a task type. For example, classification (there are others: clustering, function approximation...). For this case, you select the architecture: the number of layers, the number of neurons, activation functions.

Then training.

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question