Answer the question

In order to leave comments, you need to log in

Keras LSTM gradient breakdown, how to get out of this situation?

Greetings to all who decided to drop by!

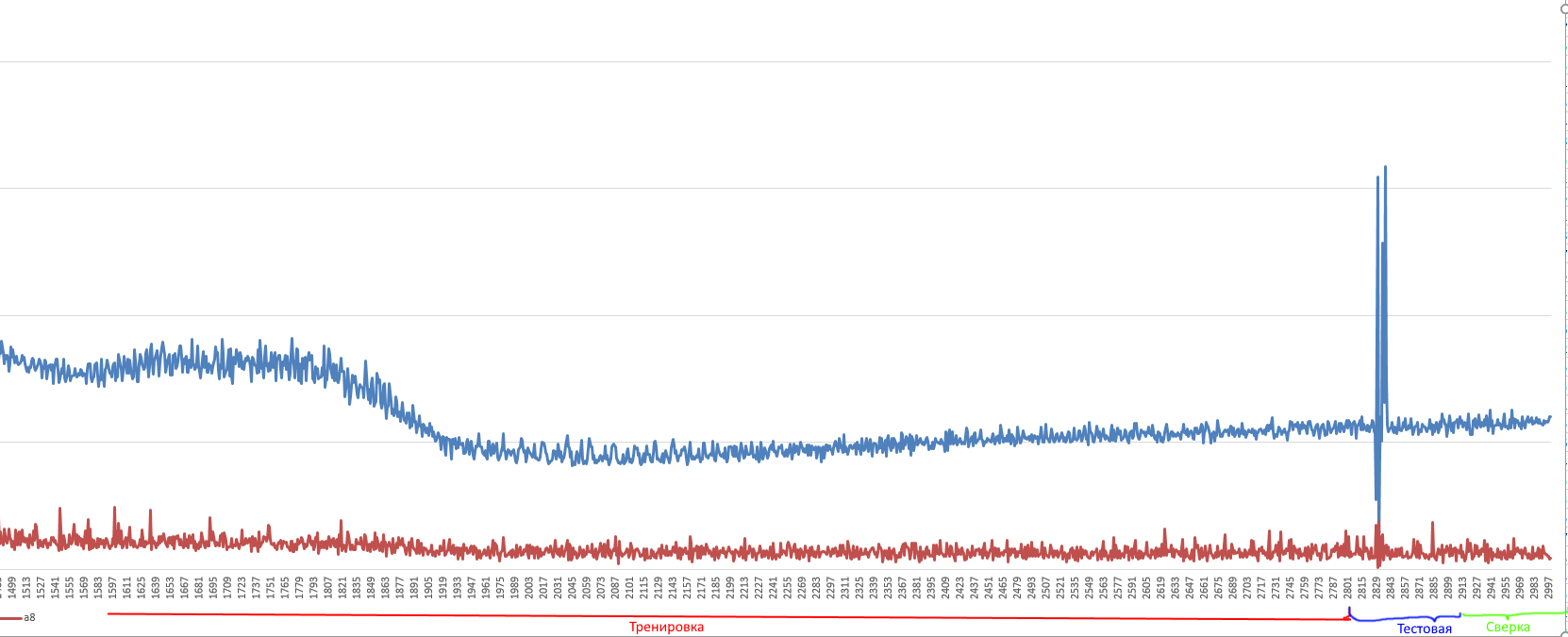

I built a neural network according to manuals from sites, everything would be fine, but everywhere a small dataset is used and a neuron with the ReLU activation function works as it should. According to my tests, everything works as it should with a dataset of no more than 500 observations. But when I go to a larger dataset for training, the loss goes to NuN. I tried, as they say, to set the clipvalue clipping gradient, yes, it helped, but as a result, there was no prediction of the series ... I played with this parameter, but all to no avail. I went through different optimizers, normalized the data to sense 0. Dataset of 10 columns of 4000 values each. Checked for Null, there are no zeros. Also tested on random values without any readings from outside. I don't know what to do anymore.

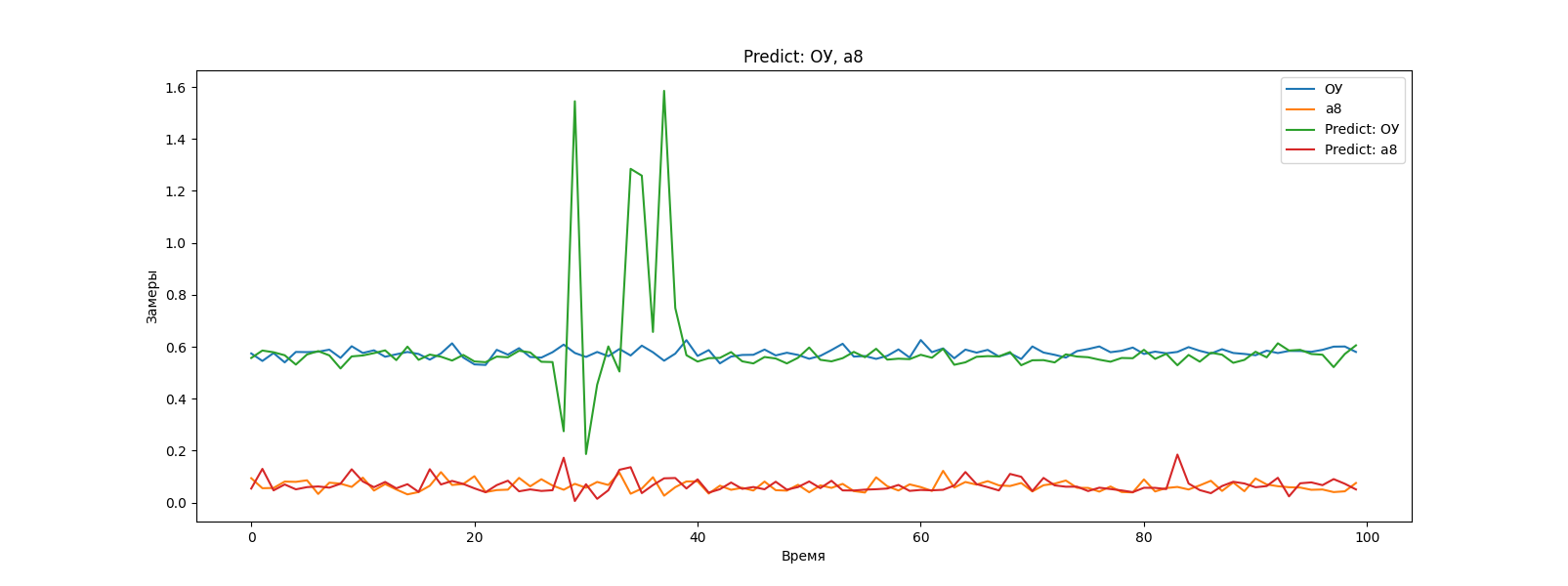

I changed the activation function to tanh, everything seems to look good loss: it goes away for hundredths with each epoch. And the predicted time series seems to be similar to the original one. It seems to be exactly. Once I slipped a test sample after training, after the test sample there was a small jump according to the data, so the network repeated this jump and almost 1 in 1. There is a feeling that it does not predict, but stupidly repeats ... somehow it does not look believable.

3000 - whole dataset

2800 - training

100 - input

100 - predicted

How everything is divided

Prediction of the last 100 observations

Such peaks should not have been included in the prediction of the series, but again, I repeat, either she repeated the input test data or I don’t understand something. But it seems to me that such peaks should not be there.

Deadlines are tight, I don’t even know what else to do ...

Where did the Multiple Parallel Input and Multi-Step Output

info come from https://machinelearningmastery.com/how-to-develop-...

part of the model

self.model = Sequential()

self.model.add(LSTM(300, activation='tanh', input_shape=(self.n_steps_in, self.n_features)))

self.model.add(RepeatVector(self.n_steps_out))

self.model.add(LSTM(200, activation='tanh', return_sequences=True))

self.model.add(TimeDistributed(Dense(100, activation='tanh')))

self.model.add(TimeDistributed(Dense(self.n_features)))

self.model.compile(loss='mse', optimizer='adam')Answer the question

In order to leave comments, you need to log in

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question