Answer the question

In order to leave comments, you need to log in

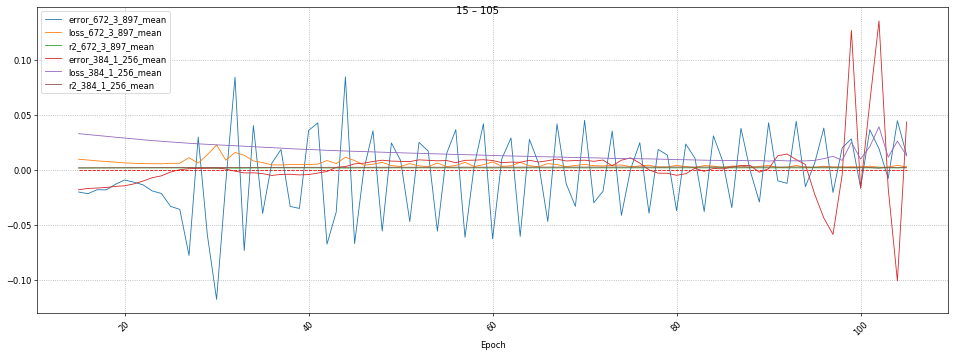

Is the jumping error phenomenon normal when training a neural network and can the error be negative?

Tested a neural network with various model parameters: the number of full-connected layers, their size, the size of the training package. The network validates according to Loss, which decreased, but the error began to fluctuate with an increase in the amplitude of oscillations.

Hence, 2 questions:

Answer the question

In order to leave comments, you need to log in

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question