Answer the question

In order to leave comments, you need to log in

How to properly organize a php parser?

I am writing a parser for one site. There is an authorization on the site, this greatly complicates it, because. i am new to php

<?php

include_once('simple_html_dom.php');

function curl_get($url, $referer = 'http://www.google.com', $fields = [], $headers) {

$ch = curl_init();

curl_setopt($ch, CURLOPT_URL, $url);

curl_setopt($ch, CURLOPT_HEADER, $headers);

curl_setopt($ch, CURLOPT_USERAGENT, "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:69.0) Gecko/20100101 Firefox/69.0");

curl_setopt($ch, CURLOPT_REFERER, $referer);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);

curl_setopt($ch, CURLOPT_COOKIEJAR, __DIR__ . "/cookie.txt");

$data = curl_exec($ch);

$dom = str_get_html($data);

$token = $dom->find('#big_login input[name="authenticity_token"]');

foreach($token as $tok) {

$fields["authenticity_token"] = $tok->value;

}

curl_setopt($ch, CURLOPT_COOKIEFILE, __DIR__ . "/cookie.txt");

curl_setopt($ch, CURLOPT_REFERER, $url);

curl_setopt($ch, CURLOPT_POST, true);

curl_setopt($ch, CURLOPT_POSTFIELDS, http_build_query($fields));

$data = curl_exec($ch);

curl_close($ch);

return $data;

}

$headers = array(

"Accept: text/html,application/xhtml+xm…plication/xml;q=0.9,*/*;q=0.8",

"Accept-Encoding: gzip, deflate, br",

"Accept-Language: ru-RU,ru;q=0.8,en-US;q=0.5,en;q=0.3",

"Connection: keep-alive",

"Content-Length: 231",

"Content-Type: application/x-www-form-urlencoded",

"Host: *********",

"TE: Trailers",

"Upgrade-Insecure-Requests: 1",

"User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:69.0) Gecko/20100101 Firefox/69.0"

);

$url_auth = 'https://*******/users/sign_in';

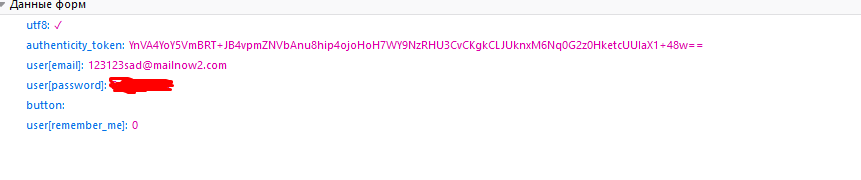

$auth_data = [

"user[email]" => "[email protected]",

"user[password]" => "******",

"user[remember_me]" => "0",

"authenticity_token" => "",

"utf8" => "✓",

"button" => ""

];

$page = curl_get($url_auth, 'http://www.google.com', $auth_data, $headers);

echo $page;

?>

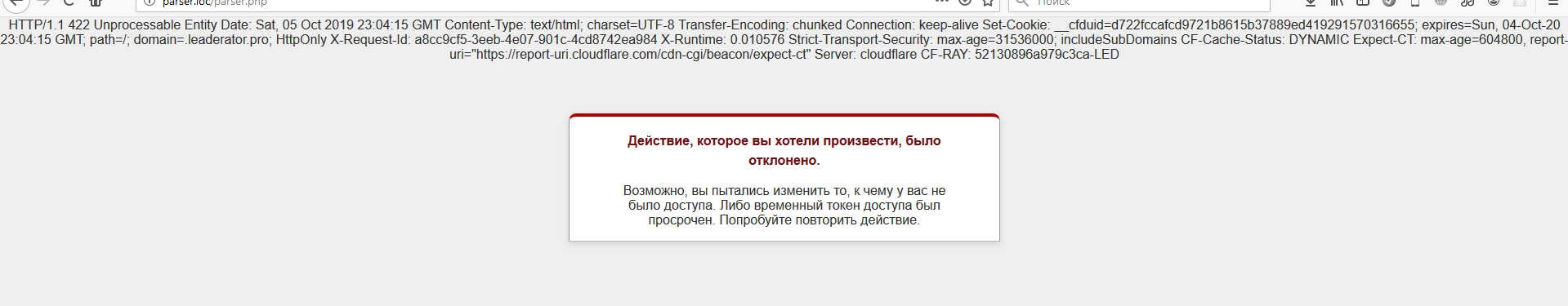

HTTP/1.1 422 Unprocessable Entity Date: Sat, 05 Oct 2019 23:04:15 GMT Content-Type: text/html; charset=UTF-8 Transfer-Encoding: chunked Connection: keep-alive Set-Cookie: __cfduid=d722fccafcd9721b8615b37889ed419291570316655; expires=Sun, 04-Oct-20 23:04:15 GMT; path=/; domain=.leader.pro; HttpOnly X-Request-Id: a8cc9cf5-3eeb-4e07-901c-4cd8742ea984 X-Runtime: 0.010576 Strict-Transport-Security: max-age=31536000; includeSubDomains CF-Cache-Status: DYNAMIC Expect-CT: max-age=604800, report-uri=" https://report-uri.cloudflare.com/cdn-cgi/beacon/e... " Server: cloudflare CF -RAY: 52130896a979c3ca-LED

HTTP/1.1 302 Found Date: Sun, 06 Oct 2019 09:49:22 GMT Content-Type: text/html; charset=utf-8 Transfer-Encoding: chunked Connection: keep-alive X-Frame-Options: SAMEORIGIN X-XSS-Protection: 1; mode=block X-Content-Type-Options: nosniff X-Download-Options: noopen X-Permitted-Cross-Domain-Policies: none Referrer-Policy: strict-origin-when-cross-origin Location: https://* *******/Cache-Control: no-cache Set-Cookie: _finder_session = RkgyQUV1NWdlNis0cmFuL0FQdDBjcWxNMUUzOEljMTZUR0lpVENleitTMTY5OGtmc1gyTzkrWTM3YVE0UkNOV3Y4dDlIdkxqMVpkZ2hiRmJtam4xU1VxU1o5cmg3M0VZV0NLazlwTlg1S0lWNk8zZ21TLy8xZkJoTVBrQVBZNmg5ZTA2ckFDaEhJUkVpajZBWHE3TWhyVS8vVlZTMzg1NldxNHJVQUVxOHFUQXlsT3A3UUVETXNCeFFGeWVIZXJUc0NZV2JQSGhCT2tlYlJFVllXV0U2M0pMbXJiT0JyT0hFUXRLeExxbnlLNkpMZXJLdGRwejFxTXBDMU5oMmpuTWVtVXdySTZ3Vm41NjJmTDUrbkl0Mnc9PS0tUFRZcnVBeW9oZTJZQTRDMW5WVzhQdz09--578e6d6b8b7333177eae8ab28a9713461e441f40; path=/; secure; HttpOnly X-Request-Id: a01c854a-f4ca-47b3-a93c-f0fb3f30de69 X-Runtime: 0.272714 Strict-Transport-Security: max-age=31536000; includeSubDomains Strict-Transport-Security: max-age=31536000 Content-Security-Policy: block-all-mixed-content CF-Cache-Status: DYNAMIC Expect-CT: max-age=604800, report-uri="https://report-uri.cloudflare.com/cdn-cgi/beacon/e... " Server: cloudflare CF-RAY: 5216b9951afd4e64-DME You are being redirected.

You are being redirected (this word is clickable, and it links to the main page of the site, as happens with normal authorization).

Answer the question

In order to leave comments, you need to log in

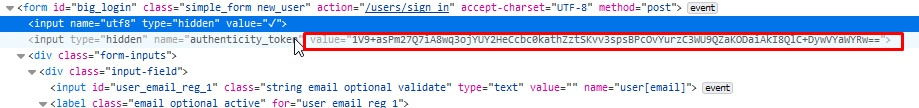

The first curl_exec() is done, we got the authorization page.

Parsed, pulled out auth token. And that's it.

And where is the second curl_exec(), with the authorization form fields already filled in? Only curl_close() - and goodbye.

Yes, and the Referer of this second request can no longer be google.com, it must be the address of this site.

Usually, sites check headers (valid strings, cookies, etc.) and work using JS.

Check that the site you are parsing does not use JS.

Then, properly form the headings.

If this is not the case, then you need to use JS for parsing from the client side for the page to work correctly, and process the received content wherever you want.

For example head-less browser PhantomJS or nightmarejs

you can analyze requests through programs like wireshark and everything will be visible there.

and you can simulate on JavaScript events as in this description, where I did site parsing in Lazarus, tested all this on Linux servers with rabbitmq and VNC, the network worked for half a year until I got tired of Chromium and Lazarus-IDE on the server side, with the installa...

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question