Answer the question

In order to leave comments, you need to log in

How to parse multiple urls from an excel file?

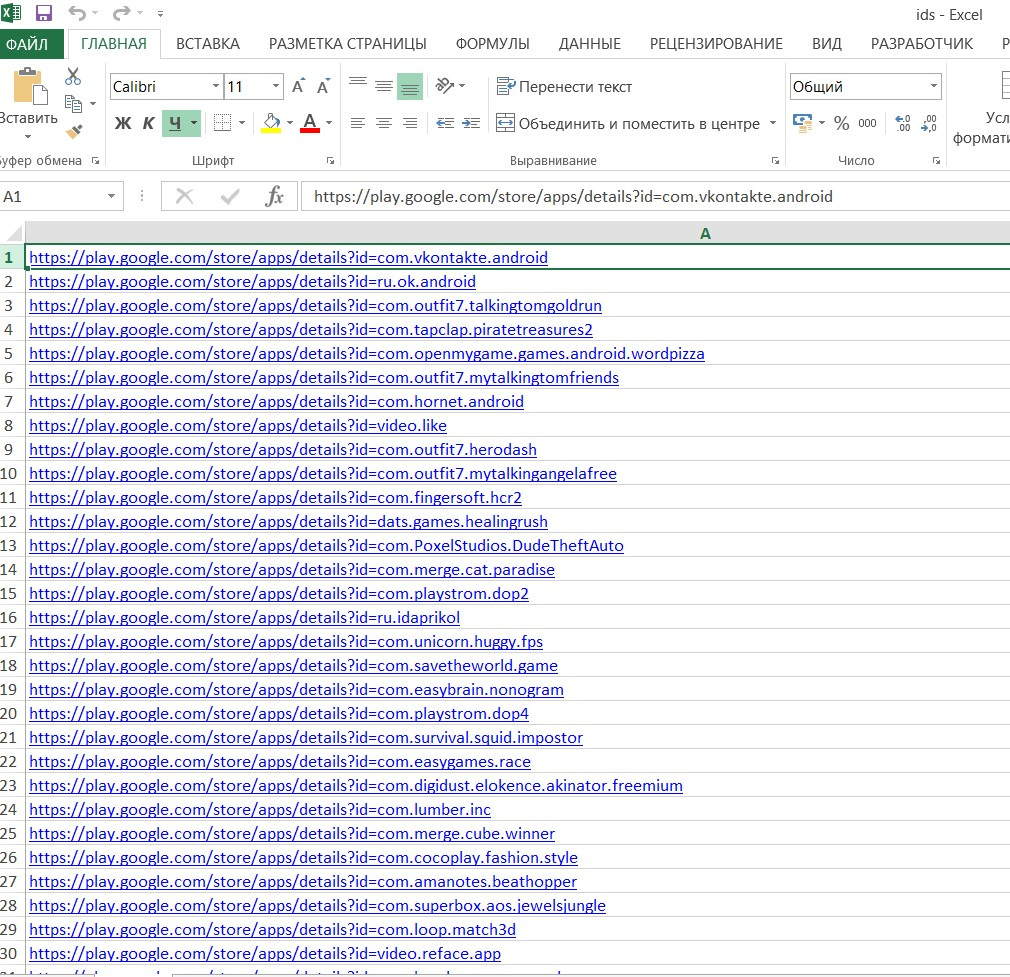

There is an Excel file that contains links in one column.

How to correctly pull out the name of the applications that it gives out by links?

An example of a file in the picture:

I will duplicate here some of the links from the list:

https://play.google.com/store/apps/details?id=com.vkontakte.android

https://play.google.com/store/apps/details?id=ru.ok.android

https://play.google.com/store/apps/details?id=com.outfit7.talkingtomgoldrun

https://play.google.com/store/apps/details?id=com.tapclap.piratetreasures2

https://play.google.com/store/apps/details?id=com.openmygame.games.android.wordpizza

https://play.google.com/store/apps/details?id=com.outfit7.mytalkingtomfriends

https://play.google.com/store/apps/details?id=com.hornet.androidimport requests

from bs4 import BeautifulSoup

import pandas as pd

df = pd.read_excel('ids.xlsx')

url = df

for urlibs in url:

response = requests.get(urlibs)

soup = BeautifulSoup(response.text, 'lxml')

quotes = soup.find_all('h1', class_='AHFaub')

for quote in quotes:

print(quote.text)Answer the question

In order to leave comments, you need to log in

save the document in csv, and process it with any convenient tool

# -*- coding: utf-8 -*-

import pandas as pd

import requests

from bs4 import BeautifulSoup

filename = 'ids.xlsx'

df = pd.read_excel(filename)

url = df.iloc[:, 0].tolist() # Преобразую нулевую колонку к списку

for urlibs in url:

response = requests.get(urlibs)

soup = BeautifulSoup(response.text, 'lxml')

appname = soup.find('h1', class_='AHFaub').text

print(appname)# -*- coding: utf-8 -*-

import pandas as pd

import requests

from bs4 import BeautifulSoup

from concurrent.futures import ThreadPoolExecutor

filename = 'ids.xlsx'

df = pd.read_excel(filename)

urls = df.iloc[:, 0].tolist()

def get_app_name(url):

response = requests.get(url)

soup = BeautifulSoup(response.text, 'lxml')

appname = soup.find('h1', class_='AHFaub').text

print(appname)

# Число воркеров можно изменить на свое усмотрение

with ThreadPoolExecutor(max_workers=16) as executor:

executor.map(get_app_name, urls)Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question