Answer the question

In order to leave comments, you need to log in

How to import huge XML into MongoDB?

There is a heavy (700+ mb, 1.7 million records) XML file with data that needs to be added to MongoDB. I have tried many npm packages, console utilities and desktop programs. None of them can really process a file of this size in the right form (not an xml "tree").

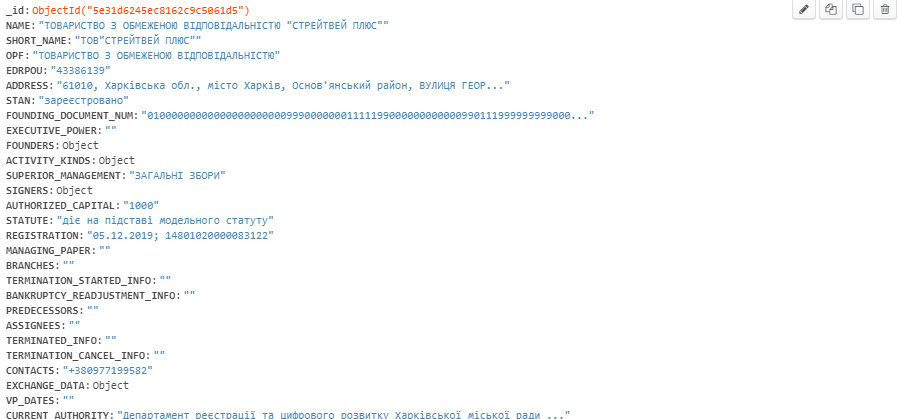

An example of an object that is being written:

I opted for the xml-stream package. It reads the stream and outputs js objects and everything is fine as long as I am limited to console output. But there are problems with any asynchronous output of this data, for example, when writing this data to mongu.

When trying to save several records to an array and then massively write them to the database, they are assigned identical IDs and an error is thrown. In the current version, nothing just happens after starting and connecting to the database. No errors, no logs, nothing.

When you try to write to a file, it does not go beyond its creation.

When writing to the database one at a time, the first 214065 records are successfully added, and then the connection to the mongo "falls" (MongoError: Topology is closed, please connect). Presumably an error in XML (although this is official data from data.gov.ua) or a problem in the parser.

An example of a file for writing with several output options - https://pastebin.com/K3cLFsws

The last output to the console of an object for writing before the "fall" of mongo - https://pastebin.com/fWa7c7mD

Help me figure out how to add this data to the database. I've been scratching my head over this all day.

update:Solved the problem of getting stuck on 214065 records. It turns out the archiver crookedly extracted the file and it was cut. The full file is 5GB. xml-stream worked fine and I added the data to the database. But the question of writing to the database in batches remains open to improve performance.

Answer the question

In order to leave comments, you need to log in

The size is not that big, but the technique is something like this

Use a stream parser

https://www.npmjs.com/package/sax

For speed, collect a set of 100 - 1000 documents and insert at a time

https://docs.mongodb.com/ manual/reference/method/B...

Oleg Vdovenko .... identical IDs are assigned and an error is thrown. ... I can't believe that - if, for example, insert white noise, will there also be collisions or not?

Yes ? try sequentially.

Convert XML to json - a lot of code can do it and just write it yourself - (by the way, if I were you, I would do it in Java - or on dotnet - or in ruby, or at least in python) - it seems to me that any of these alternatives is any more reliable

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question