Answer the question

In order to leave comments, you need to log in

How are multiple outputs implemented in a multilayer perceptron?

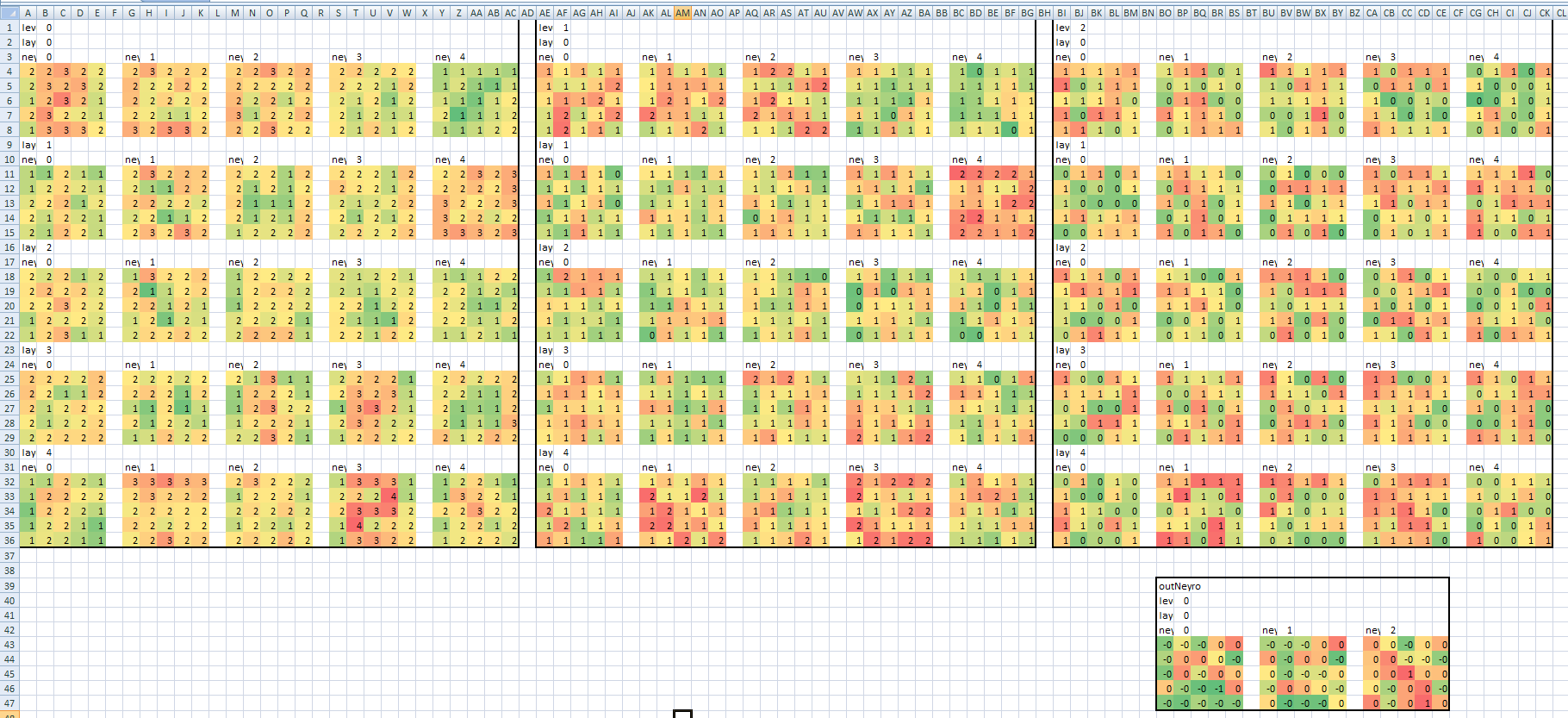

I'm trying to implement a multilayer perceptron with multiple outputs.

Went through a lot of articles about backpropagation. In particular , this page describes the material on which I implemented the training in a very accessible way.

At the input I submit 3 matrices 5X5, depicting "0", "1", "2". The output is three neurons.

The learning process is as follows:

Answer the question

In order to leave comments, you need to log in

I made the first fully connected networks according to this article, the most understandable. After testing from the input to 3 neurons and 1 output, I started making a neural network into 2 hidden layers with an input of 100 values (image 10x10 bw) to recognize numbers from 0 to 9 - and it all worked. I advise you to try. Are you using Bias? What is the learning rate, activation function?

Article

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question