Answer the question

In order to leave comments, you need to log in

Delay testing node.js event loop?

I'm exploring nodejs event loop delays using process.hrtime() and also I'm calculating percentiles for the measured delays.

Here is my simple test example

. I ran this test on a computer with an i7-4770.

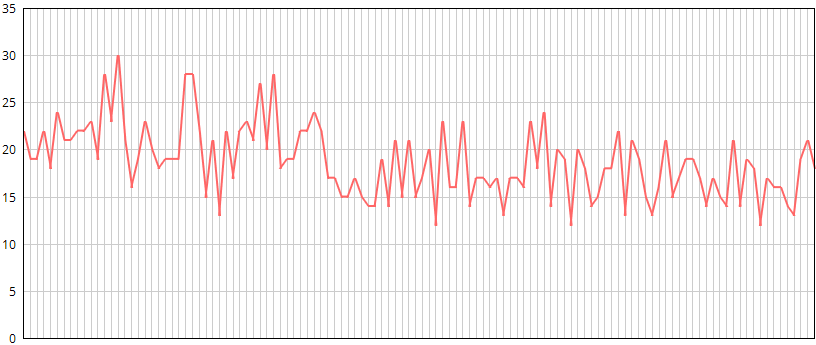

This is what the graph looks like when the script is run on Windows 7 (same type of graph on OSX):

Where the x-axis (horizontal) is time. And the y -axis (vertical) is the 90th percentile latency in µs (microseconds)

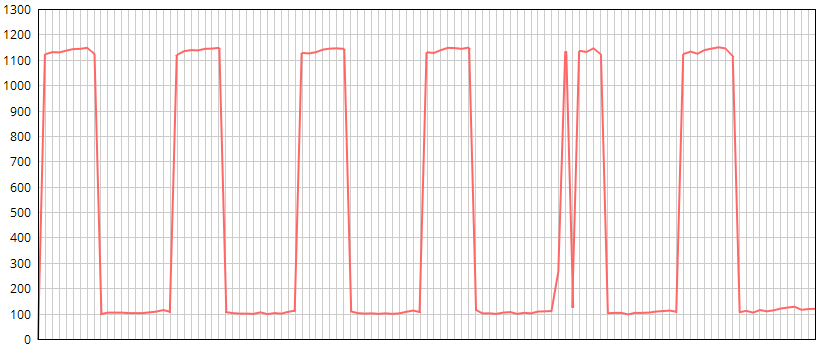

And this is what the graph looks like when the script is run on CentOS 7/6 :

Where the x-axis (horizontal) is time. A Y axis(vertical) - 90th percentile delay in µs (microseconds). The wavelength is about 2 minutes.

As you can see, in general, there are more delays on Linux. But the real problem lies in the periodic peaks, when the average delay and the amount of high delay (on the order of 1000µs) per measured interval increases. Simply put, on Linux, the execution speed periodically sags. It has nothing to do with the garbage collector.

I'm not sure where exactly the problem is, in the OS, V8 or libuv. Is there a good explanation for this behaviour? For example, differences in the implementation of timers on different operating systems. Or how to fix it?

Answer the question

In order to leave comments, you need to log in

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question