Answer the question

In order to leave comments, you need to log in

Why, when training a neural network, can't you just calculate the error and substitute such weights for which the error will be equal to 0?

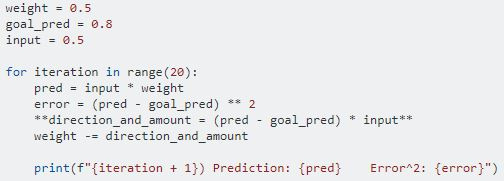

I will give an example:

Why in this example (when calculating direction_and_amount) it is impossible to do division operation instead of multiplying by input? Then the required weight would be found already at the second iteration.

I'm new, so this might sound like a dumb question. Please do not judge strictly.

Answer the question

In order to leave comments, you need to log in

By substituting such weights, you tune the neural network to a "specific" instance - torn from the statistics.

It's like saying that all oranges are of the same color #fdaf21 and the colors #fdaf22 and #fdaf20 are no longer an orange, but something else.

In this exaggerated example, you can.

In more complex networks, the desired dependence is unknown,

so the weights are selected in small steps.

It is possible, but sometimes the exact calculation of the error at each iteration is comparable to the creation of another parallel neural network or analytical system.

Therefore, if the result is > 90%, they think: "And so it will do!"... :((

By the way, if you initially build the mathematical model of the neural network correctly, you can generally eliminate the appearance of errors.

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question