Answer the question

In order to leave comments, you need to log in

Why is printf outputting wrong?

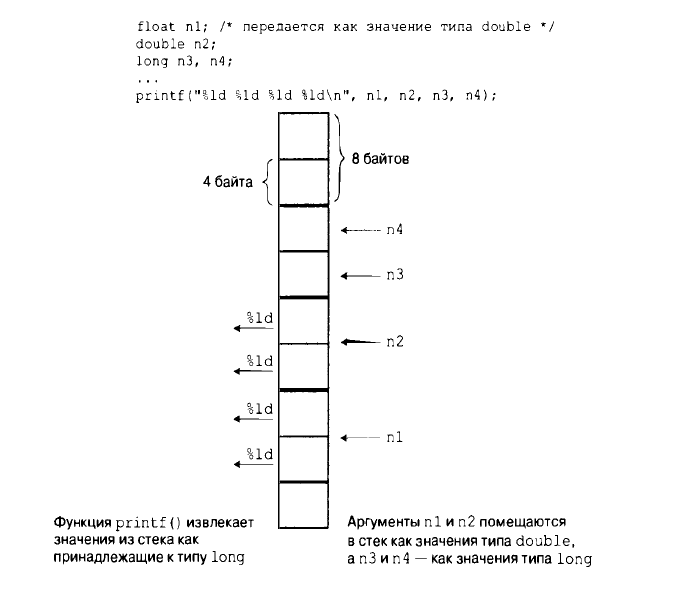

In the book of S. Prat. The C programming language presents a code example that shows that in certain cases, even using the correct specifier, the result is not what was originally expected*.

It seems like the general logic is clear, but some points in the explanation are incomprehensible. There is the following example:

#define _CRT_SECURE_NO_WARNINGS

#include <stdio.h>

#include <conio.h>

int main(void)

{

float a = 3.0;

double b = 3.0;

long c = 2000000000;

long d = 1234567890;

printf("%ld %ld %ld %ld", a, b, c, d);

_getch();

return 0;

}

Answer the question

In order to leave comments, you need to log in

I got: 0 1074266112 0 1074266112

Or, in the 16th system, 0 40080000 0 40080000

This is due to such things.

1. Arguments of float type are written on the stack as doubles.

2. On x86, Intel byte order (reverse).

3. Fractional numbers are stored without a leading bit (which is always 1), in mantissa-exponent-sign format (Intel byte order).

4. For unity (xxx·2 0 ) the order will be 011…11.

3 = 1.10…0 2 2¹, and taking into account the discarded leading bit, the mantissa will be 10…0.

Order 011…11 + 1 = 10…0.

With big endian, double 3.0 will look like this

• 6 zero bytes - mantissa

• 0000.1000: the lower half bytes are the mantissa, the upper ones are the exponent

• 0100.0000: the sign bit and seven more exponent bits This is

00.00.00.00.00.00.08.40.

We split into two pieces of memory, 4 bytes each.

[00.00.00.00] [00.00.08.40]

Again, let's not forget that integers also have little endianness - and it turns out 0 and 40080000.

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question