Answer the question

In order to leave comments, you need to log in

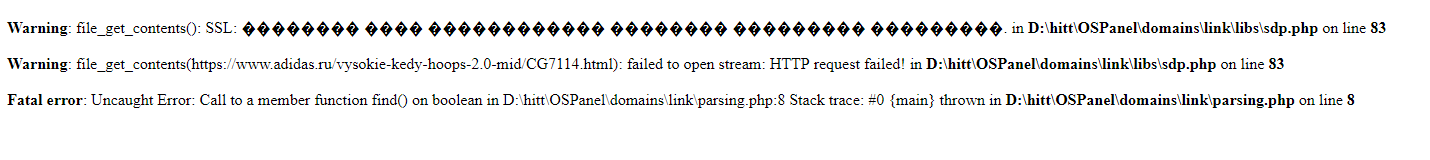

Why doesn't the parser bypass the ssl check?

Hello !

I want to pull out a picture and the name *website*

from this site, I

use the *simple html dom parser* library, but when I try to parse, it gives an error

$url = 'https://www.adidas.ru/vysokie-kedy-hoops-2.0-mid/CG7114.html';

$html = file_get_html($url);

foreach ($html->find('img') as $el) {

echo $el;

}Answer the question

In order to leave comments, you need to log in

Let me give you an example, it might help. I somehow needed to parse + -11k pages from one site. The site had a limit on the number of requests from one IP or something like that. In general, as I did, I used cUrl, you can install headers in the curl, and also use a proxy. There are sites on the Internet with a list of free proxy servers, I also found a list of headers on the Internet. I threw all this into arrays. And with the help of cUrl parsed, if the response from the site is an error, or the content did not come, then another header and proxy were taken, and so on until I got the content I needed. I will say right away that proxy servers need to be thrown as much as possible, they often worked slowly or did not work at all. If necessary, I can try to find this code, but I will warn you right away, it is a bit of a crutch, because it is one-time))

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question