Answer the question

In order to leave comments, you need to log in

Why does neural network accuracy grow with error?

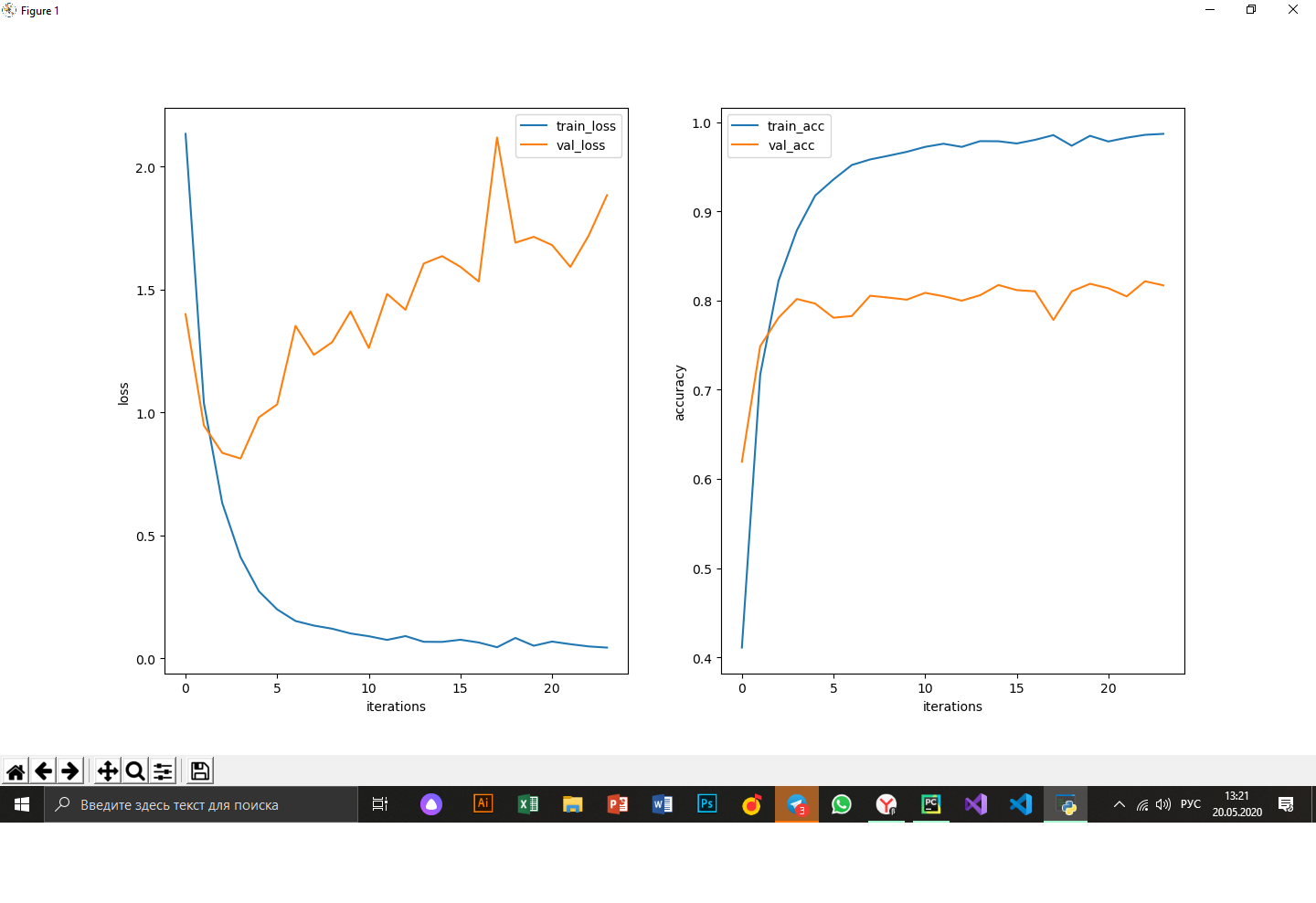

I am solving an image classification problem. To debug the rest of the code, I wrote a simple convolutional neuron of 5 convolutional and 1 fc layers. On training, I gave out the following graphs: train and validation accuracy and error. Why, in general, does the error on the validation set increase with the accuracy on it?

There are 42 unique classes in total, ~20k pictures, 20% of them are the validation sample

PS Correct me if I'm wrong, but the fact that the validation error is growing indicates network retraining, right?

Charts:

Answer the question

In order to leave comments, you need to log in

Yes, it's definitely a retraining.

In order to answer why the accuracy also increases, you need a little more information about your project:

try using dropout, it is quite possible that this is retraining

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question