Answer the question

In order to leave comments, you need to log in

Where could mistakes be made in the project architecture?

What may be the problem in your opinion:

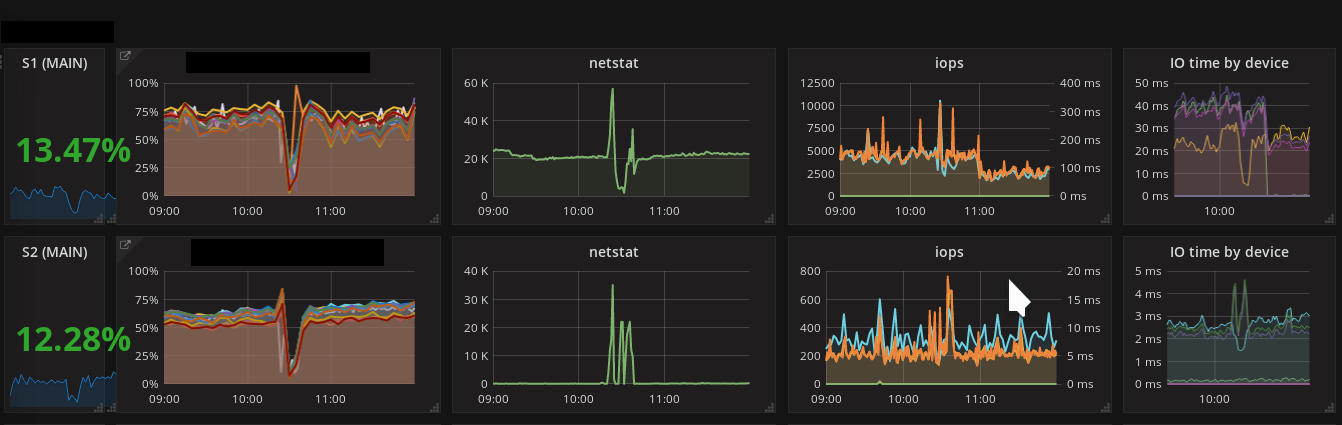

S1 NGINX+PHP_FPM+POSTGRES

S2 NGINX+PHP_FPM

S1 proxies connections to S2

At a certain moment, the number of established connections increases dramatically, for this reason S2 creates additional requests to the base on S1 that S1 cannot handle

net.ipv4.tcp_slow_start_after_idle = 0

net.ipv4.conf.all.forwarding = 1

net.ipv4.ip_forward=1

net.ipv6.conf.all.disable_ipv6 = 1

net.ipv6.conf.default.disable_ipv6 = 1

net.ipv4.ip_local_port_range=1024 65535

net.netfilter.nf_conntrack_max = 562144

net.nf_conntrack_max = 562144

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

kernel.sysrq = 0

kernel.core_uses_pid = 1

net.ipv4.tcp_syncookies = 1

kernel.msgmnb = 65536

kernel.msgmax = 65536

kernel.shmmax = 68719476736

kernel.shmall = 4294967296

net.ipv4.ip_forward = 1

net.ipv6.conf.default.forwarding = 1

net.ipv6.conf.all.forwarding = 1

net.ipv4.conf.default.proxy_arp = 0

net.ipv4.conf.all.rp_filter = 1

kernel.sysrq = 0

net.ipv4.conf.default.send_redirects = 1

net.ipv4.conf.all.send_redirects = 0

net.ipv4.tcp_max_tw_buckets = 1440000

net.ipv4.tcp_max_tw_buckets_ub = 26536

net.ipv4.tcp_tw_recycle = 0

net.ipv4.tcp_tw_reuse = 1

fs.file-max = 999999

fs.suid_dumpable = 0

kernel.core_pattern = /dev/null

net.netfilter.nf_conntrack_max = 2048576

net.nf_conntrack_max = 2048576

net.ipv4.tcp_max_syn_backlog = 100000

net.core.somaxconn = 100000

net.core.netdev_max_backlog = 100000</blockquote>* soft nofile 100000

* hard nofile 100000

root soft nofile 100000

root hard nofile 100000Limit Soft Limit Hard Limit Units

Max cpu time unlimited unlimited seconds

Max file size unlimited unlimited bytes

Max data size unlimited unlimited bytes

Max stack size 8388608 unlimited bytes

Max core file size 0 unlimited bytes

Max resident set unlimited unlimited bytes

Max processes 514588 514588 processes

Max open files 100000 100000 files

Max locked memory 65536 65536 bytes

Max address space unlimited unlimited bytes

Max file locks unlimited unlimited locks

Max pending signals 514588 514588 signals

Max msgqueue size 819200 819200 bytes

Max nice priority 0 0

Max realtime priority 0 0

Max realtime timeout unlimited unlimited us[databases]

* = host=111.111.111.111 port=5432

[pgbouncer]

logfile = /var/log/pgbouncer/pgbouncer.log

pidfile = /var/run/pgbouncer/pgbouncer.pid

listen_addr = *

listen_port = 6432

;Настройки аутентификации:

auth_type = md5

auth_file = /etc/pgbouncer/userlist.txt

;доступ

admin_users = postgres

server_reset_query = DISCARD ALL

;Чаще всего значение имеет смысл заменить на transaction. В этом случае соединение будет возвращаться в общий пул после завершения транзакции.

;pool_mode = transaction

pool_mode = session

;Настройки размера пула:

max_client_conn = 10000

default_pool_size = 30

;Не логировать подключения/отключения

log_connections = 0

log_disconnections = 0Answer the question

In order to leave comments, you need to log in

Mistakes in architecture could be made anywhere. Analyze bottlenecks and look for reasons. By the way, are you asking about architecture errors without providing the architecture itself and any information about the system and hoping to get some kind of answer that will solve your problems?

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question