Answer the question

In order to leave comments, you need to log in

What is the best way to start implementing parser logs in python?

I welcome everyone! There is a parser that parses news resources.

The task is:

Implement logs for the parser and write these logs to a separate table in the database.

Question: What is the best way to implement logs for the parser in my code.

import requests

import pymysql

import dateparser

import schedule

import time

from bs4 import BeautifulSoup

# < Получаем html код.

def get_html(url):

r = requests.get(url)

return r.text

# < Получаем ссылки.

def get_resource_links(resource_page,links_rule,resource_domain):

resource_links = []

soup = BeautifulSoup(resource_page,'lxml')

resource_links_blocks = soup.find_all(links_rule[0],{links_rule[1]:links_rule[2]})

for resource_link_block in resource_links_blocks:

a_tag = resource_link_block .find("a")

if a_tag:

link = a_tag.get("href")

resource_links.append(resource_domain + link)

return resource_links

# < Собираем заголовки с страницы.

def get_item_title(item_page,title_rule):

soup = BeautifulSoup(item_page, 'lxml')

item_title = soup.find(title_rule[0],{title_rule[1]:title_rule[2]})

return item_title['content']

# < Собираем даты с страницы.

def get_item_datetime(item_page,datetime_rule,datetime1_rule):

soup = BeautifulSoup(item_page, 'lxml')

item_datetime = soup.find(datetime_rule[0],{datetime_rule[1]:datetime_rule[2]})

if item_datetime is not None:

item_datetime = soup.find(datetime_rule[0],{datetime_rule[1]:datetime_rule[2]}).text

item_datetime = dateparser.parse(item_datetime, date_formats=['%d %B %Y %H'])

else:

if (len(datetime1_rule) == 3):

item_datetime = soup.find(datetime1_rule[0],{datetime1_rule[1]:datetime1_rule[2]}).text

item_datetime = dateparser.parse(item_datetime, date_formats=['%d %B %Y %H'])

else:

item_datetime = ''

return item_datetime

# < Собираем контент с страницы.

def get_text_content(item_page,text_rule,text1_rule):

soup = BeautifulSoup(item_page, 'lxml')

item_text = soup.find(text_rule[0],{text_rule[1]:text_rule[2]})

if item_text is not None:

item_text = soup.find(text_rule[0],{text_rule[1]:text_rule[2]}).text

else:

if (len(text1_rule) == 3):

item_text = soup.find(text1_rule[0],{text1_rule[1]:text1_rule[2]}).text

else:

item_text = ''

return item_text

# < Подключение к базе данных.

connection = pymysql.connect(host = 'localhost',

user = 'root',

password = '',

db = 'news_portal',

charset = 'utf8',

autocommit = True)

cursor = connection.cursor()

# < Запрос правил выдергивания из таблицы resource контента.

cursor.execute('SELECT * FROM `resource`')

resources = cursor.fetchall()

# < Цикл для перебора из кортежа.

for resource in resources:

resource_name = resource[1]

resource_link = resource[2]

resource_url = resource[3]

link_rule = resource[4]

title_rule = resource[5]

datetime_rule = resource[6]

datetime1_rule = resource[7]

text_rule = resource[8]

text1_rule = resource[9]

print(resource_name)

resource_domain=resource_link

# < Разбиваю данные из кортежа в массив.

links_rule = link_rule.split(',')

title_rule = title_rule.split(',')

datetime_rule = datetime_rule.split(',')

datetime1_rule = datetime1_rule.split(',')

text_rule = text_rule.split(',')

text1_rule = text1_rule.split(',')

resource_page = get_html(resource_url)

resource_links = get_resource_links(resource_page,links_rule,resource_domain)

print('кол-во ссылок: '+str(len(resource_links)))

# < Цикл для вызова функции.

for resource_link in resource_links:

item_page = get_html(resource_link)

item_title = get_item_title(item_page,title_rule)

item_datetime = get_item_datetime(item_page,datetime_rule,datetime1_rule)

item_text_content = get_text_content(item_page,text_rule,text1_rule)

try:

# < Запись новостей в БД.

sql = "insert into items (`item_link`,`item_title`,`item_datetime`,`item_text_content`) values (%s,%s,%s,%s)"

cursor=connection.cursor()

cursor.execute(sql,(str(resource_link),str(item_title),str(item_datetime),str(item_text_content)))

print('Запись в базу данных успешно завершена!')

except pymysql.err.IntegrityError:

print('ah shit ! duplicate error!')

break

except pymysql.err.InternalError:

print('ah shit ! error')

break

connection.close()

Answer the question

In order to leave comments, you need to log in

What is the actual problem?

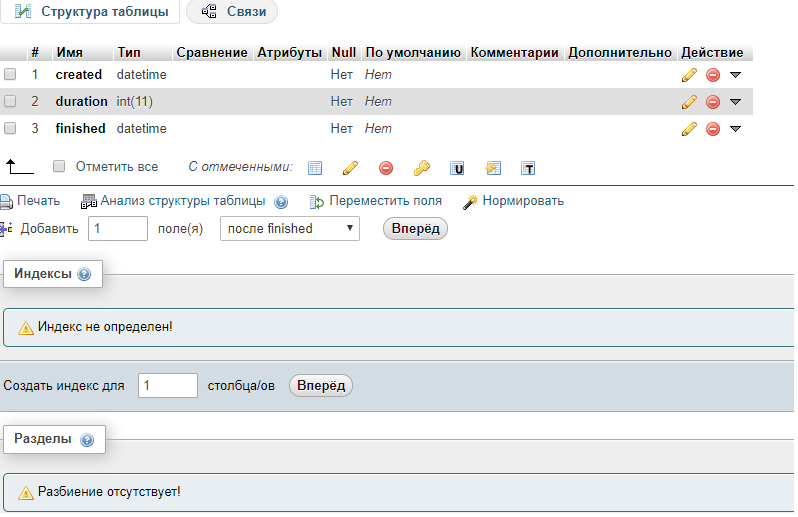

# В начале работы скрипта

created = time.strftime('%Y-%m-%d %H:%M:%S')

start_time = time.time()

# В конце скрипта

duration = time.time()-start_time # время работы в секундах, дальше конвертируйте как хотите

finished = time.strftime('%Y-%m-%d %H:%M:%S')Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question