Answer the question

In order to leave comments, you need to log in

SAN: choice of storage systems, switch etc?

All good.

Friends asked for help, because their admin went on vacation and did not return (he went to Malaysia to rest and wrote an SMS that he does not plan to return yet).

What we have:

5 self-assembly, which are used for NAS storage (2-4TB) for 15 self-assembly servers. (they get rid of everything)

We ordered a normal cabinet 19-ku, for 42 units. We are waiting for the first 5 HP Prolian G7 to arrive, there is no more accurate model at hand.

Friends decided to connect all the servers to a common (for starters, one, because they will buy the second) storage system.

There are several questions on which I would like to get advice from you:

1) SAS or FC?

2) Which storage model (storage) to choose (recently not at all in the subject, I was doing a little different)

3) what switch model to take for this case (they will take 2 pieces)

Budget for switches and other trifles: $ 2-8k

Budget for storage: $ 28-60k without disks. (because they have about 80 pieces of new sata 1-2TB in stock, preferably SATA-enabled storage)

I hope you can understand.

PS For the pros or cons in karma - thanks, for the answers to the question + guaranteed (this is the least I can thank)

PSS if you need more input, ask a question, I hope I will have an answer to it.

Answer the question

In order to leave comments, you need to log in

(because they have about 80 pieces of new sata 1-2TB in stock, preferably storage with SATA support)

1) SAS or FC? - it all depends on the tasks.

do not discount both iscsi and nfs. if a very important indicator is the delay time (for example, for a VDI infrastructure) - the choice of FC If just a server, not with super-critical delays - iscsi /

nfs

(depending on what bandwidth is needed)

in the subject, I was doing a little different)

here you have to dance from the load and preferences. sizing is first. vendor - there are so many people and so many opinions. I like HP, someone likes NetAp.

I have 3 storage systems from HP mid to enterprise level. When the disk was replaced for me in 12 hours, I was glad)). Easy setup, high performance.

3) which switch model to take for this case (they will take 2 pieces)

here again it depends on the speed, of course I doubt that you need 10G. If you build access to the storage system on the LAN, then you will manage 2, and if on the FC, then for fault tolerance according to the correct 2 FC + 2 SW.

Since the infrastructure is actually being built from scratch, be sure to look in the direction of visualization.

The answers are still at the macro level, there will be more detailed questions, I will answer even more precisely, since there is a lot of experience.

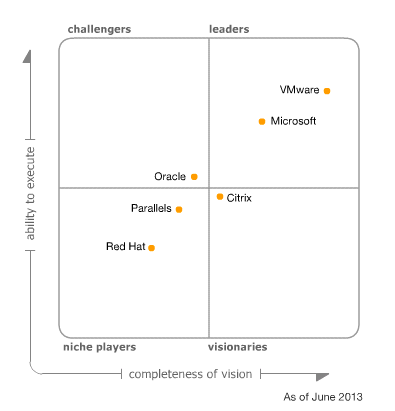

Between vmware and citrix is definitely the first. Citrix was never able to set up its hypervisor and gave it to Open Course .

Moreover, the next edition of Gartner Magic Quadrant on x86 Server Virtualization 2013 speaks for itself

<img src="  " alt="image"/> I

" alt="image"/> I

use:

Hp Eva p6000

Hp Eva p6300

HP P2000 G3 MSA DC

We use storage for the virtualization platform.

Configuration 1 - 3 x HP DL360 G7 with ESX connected via SAS to a two-headed MSA P2000 G3. Connections are duplicated for fault tolerance and increased throughput. Previously, there was a SAS switch - they refused, since the delays are large.

There are 12-17 machines on each node - the degradation of the performance of the disk subsystem has not yet been noticed.

Configuration 2- 8 x HP BL660c Gen8 connected via SAS to a two-headed NetApp 4020. There are only 8 machines on the shelf, so the utilization is scanty. However, compared to the native FS P2000, WAFL gives more freedom for LUN scaling, as well as the ability to multiple access to FS (in addition to SAS, you can use FC, CIFS, NFS, including at the same time) + NDPM support out of the box. Well, and another such subjective touch - it works faster somehow, either because of optimization for ESX, or it seems to me because of the low load on the cluster.

In general, both have the right to exist, but FC is extremely expensive, and the performance in the end is the same as that of SAS. It only makes sense if access to the shelf is needed from a distance greater than that allowed for a SAS cable (8 meters in my opinion). 10G is expensive and needed for latency-critical systems that require high bandwidth infrastructure.

For corporate purposes, I think 8 Gigabits over LAN and 12 over SAS is enough. ESX perfectly knows how to team on LAN with automatic balancing (we collect all the ports of each server into a group and rejoice). The shelf has 2 x 6G - which, in addition to redundancy, gives up to 12 gigabits to the shelf as a bonus.

Something like this…

I'm afraid that you won't put the usual "household" SATA HDD in a normal storage system.

I would advise you to look towards Dell/Equallogic PS4110 or 6110, work on iSCSI and buy a couple of switches with 10G support. Equallogic integrates with vmware "like native". Vmvar, however, is expensive.

As 0000168 rightly points out , let's define the tasks first. What kind of applications, what are the requirements for storage volume and performance?

Well, since we are already on Habré, I recommend looking at the storage system manufacturers already present here, in particular, I wrote a lot here about the NetApp technologies used (for example , here ), well, HP regularly publishes posts on this topic.

EMC, unfortunately, didn’t join us, just like Dell (there is a blog, but they don’t write about storage systems), otherwise the entire leading group would be assembled :)

In the absence of clear storage requirements, it is better to look at a system with tiering and inexpensive expansion. For example, younger 3PAR models (especially since you have already bought servers from them).

NetApp looks tempting with deduplication and tight integration with VMware, but the price tag for expansion shelves can make a customer swoon. However, it is necessary to compare specific proposals and count.

From my own experience (we have approximately the same tasks):

1. The first place ends, deduplication would help here. Without it, this is solved by archiving old stands.

2. We run into performance, in this case we add disks and allocate separate arrays to share the load.

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question