Answer the question

In order to leave comments, you need to log in

Parsing in python. How to fix pagination?

Hello! There is such a program that parses products by reference:

import requests

import csv

from bs4 import BeautifulSoup as bs

headers = {'accept': '*/*', 'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:72.0) Gecko/20100101 Firefox/72.0'}

MAIN_URL = 'https://www.yoox.com' # для формирования полной ссылки

base_url = 'https://www.yoox.com/ru/%D0%B4%D0%BB%D1%8F%20%D0%BC%D1%83%D0%B6%D1%87%D0%B8%D0%BD/%D0%BE%D0%B4%D0%B5%D0%B6%D0%B4%D0%B0/shoponline#/dept=clothingmen&gender=U&page=1&season=X'

def yoox_parse(base_url, headers):

session = requests.Session()

request = session.get(base_url, headers=headers)

clothes = []

urls = []

urls.append(base_url)

if request.status_code == 200:

soup = bs(request.content, 'html.parser')

try:

pagination = soup.find_all('li', attrs={'class': 'text-light'})

count = int(pagination[-1].text)

for i in range(1,count):

url = f'https://www.yoox.com/ru/%D0%B4%D0%BB%D1%8F%20%D0%BC%D1%83%D0%B6%D1%87%D0%B8%D0%BD/%D0%BE%D0%B4%D0%B5%D0%B6%D0%B4%D0%B0/shoponline#/dept=clothingmen&gender=U&page={i}&season=X'

if url not in urls:

urls.append(url)

except:

pass

for url in urls:

request = session.get(url, headers=headers)

soup = bs(request.content, 'html.parser')

divs = soup.find_all('div', attrs={'class': 'col-8-24'})

for div in divs:

brand = div.find('div', attrs={'class': 'brand font-bold text-uppercase'})

group = div.find('div', attrs={'class': 'microcategory font-sans'})

old_price = div.find('span', attrs={'class': 'oldprice text-linethrough text-light'})

new_price = div.find('span', attrs={'class': 'newprice font-bold'})

price = div.find('span', attrs={'class': 'fullprice font-bold'})

sizes = div.find_all('span', attrs={'class': 'aSize'})

href = div.find('a', attrs={'class': 'itemlink'})

art = div.find('div', attrs={'class': ''})

if brand and group and new_price: # new_price выводит только товары со скидкой

clothes.append({

'art': art,

'href': MAIN_URL + href.get('href'),

'sizes': [size.get_text() for size in sizes],

'brand': brand.get_text(),

'group': group.get_text(strip=True),

'old_price': old_price.get_text().replace(' ', '').replace('руб', '') if old_price else None,

'new_price': new_price.get_text().replace(' ', '').replace('руб', '') if new_price else None,

'price': price.get_text().replace(' ', '').replace('руб', '') if price else None,

})

print(len(clothes))

else:

print('ERROR or Done')

return clothes

def files_writer(clothes):

with open('parsed_yoox_man_clothes.csv', 'w', newline='') as file:

a_pen = csv.writer(file)

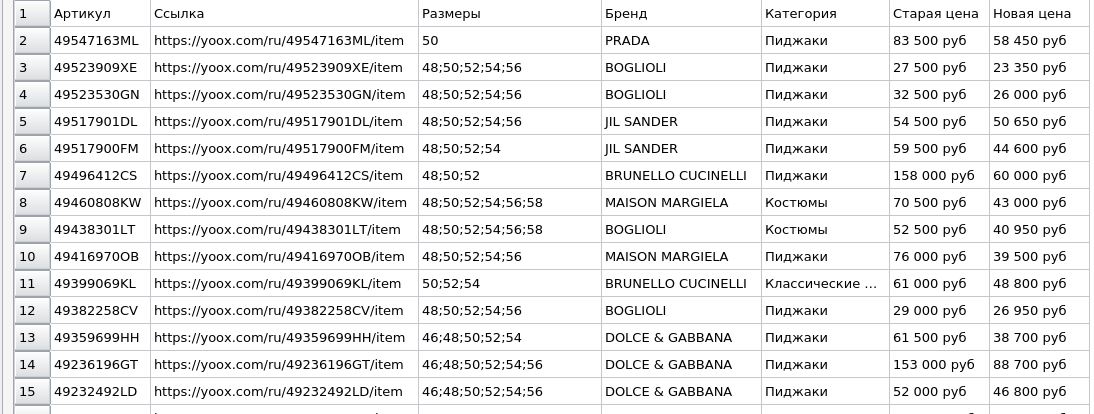

a_pen.writerow(('Артикул', 'Ссылка', 'Размер', 'Марка', 'Категория', 'Старая цена', 'Новая цена', 'Цена'))

for clothe in clothes:

a_pen.writerow((clothe['art'], clothe['href'], clothe['sizes'], clothe['brand'], clothe['group'], clothe['old_price'], clothe['new_price'], clothe['price']))

clothes = yoox_parse(base_url, headers)

files_writer(clothes)Answer the question

In order to leave comments, you need to log in

You are using the wrong link. You need this - "www.yoox.com/RU/shoponline?dept=clothingmen&gender=U&page={x}&season=X&clientabt=SmsMultiChannel_ON%2CSrRecommendations_ON%2CNewDelivery_ON%2CRecentlyViewed_ON%2CmyooxNew_ON

" . Only results with discounts are written to the file!

Here is the working code, maybe it will be useful for someone:

import requests

from bs4 import BeautifulSoup

from lxml import html

import csv

url = 'https://www.yoox.com/ru/%D0%B4%D0%BB%D1%8F%20%D0%BC%D1%83%D0%B6%D1%87%D0%B8%D0%BD/%D0%BE%D0%B4%D0%B5%D0%B6%D0%B4%D0%B0/shoponline#/dept=clothingmen&gender=U&page=1&season=X'

headers = {'user-Agent': 'Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:72.0) Gecko/20100101 Firefox/72.0',

'Accept':'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8'

}

def getClothes(url,page_id):

clothes = []

respones = requests.get(url,headers=headers)

soup = BeautifulSoup(respones.text,'lxml')

mainContent = soup.find('div',id=f'srpage{page_id}')

products = mainContent.find_all('div',class_='col-8-24')

for product in products:

brand = product.find('div',class_='itemContainer')['data-brand'] # Бренд

cod10 = product.find('div',class_='itemContainer')['data-current-cod10'] # Для формирования ссылки yoox.com/ru/{cod10}/item

category = product.find('div',class_='itemContainer')['data-category'] # Категория

oldPrice = product.find('span',class_='oldprice text-linethrough text-light') # Старая цена (может не быть)

newPrice = product.find('span',class_='newprice font-bold') # Новая цена (может не быть)

if oldPrice is not None:

# Данный код выполняется только, если на товар есть скидка

sizes = product.find_all('div',class_='size text-light')

str_sizes = ''

for x in sizes:

str_sizes += x.text.strip().replace('\n',';')

clothes.append({'art':cod10,

'brand':brand,

'category':category,

'url':f'https://yoox.com/ru/{cod10}/item',

'oldPrice':oldPrice.text,

'newPrice':newPrice.text,

'sizes':str_sizes

})

return clothes

def getLastPage(url):

respones = requests.get(url,headers=headers)

soup = BeautifulSoup(respones.text,'lxml')

id = soup.find_all('li', class_ = 'text-light')[2]

return int(id.a['data-total-page']) + 1

def writeCsvHeader():

with open('yoox_man_clothes.csv', 'a', newline='') as file:

a_pen = csv.writer(file)

a_pen.writerow(('Артикул', 'Ссылка', 'Размеры', 'Бренд', 'Категория', 'Старая цена', 'Новая цена'))

def files_writer(clothes):

with open('yoox_man_clothes.csv', 'a', newline='') as file:

a_pen = csv.writer(file)

for clothe in clothes:

a_pen.writerow((clothe['art'], clothe['url'], clothe['sizes'], clothe['brand'], clothe['category'], clothe['oldPrice'], clothe['newPrice']))

if __name__ == '__main__':

writeCsvHeader() # Запись заголовка в csv файл

lastPage = getLastPage(url) # Получаем последнею страницу

for x in range(1,lastPage): # Вместо 1 и lastPage можно указать диапазон страниц. Не начинайте парсить с нулевой страницы!

print(f'Скачавается: {x} из {lastPage-1}')

url = f'https://www.yoox.com/RU/shoponline?dept=clothingmen&gender=U&page={x}&season=X&clientabt=SmsMultiChannel_ON%2CSrRecommendations_ON%2CNewDelivery_ON%2CRecentlyViewed_ON%2CmyooxNew_ON'

files_writer(getClothes(url,x)) # Парсим и одновременно заносим данные в csv

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question