Answer the question

In order to leave comments, you need to log in

Optimal way to sort points in 2D by table?

Good afternoon!

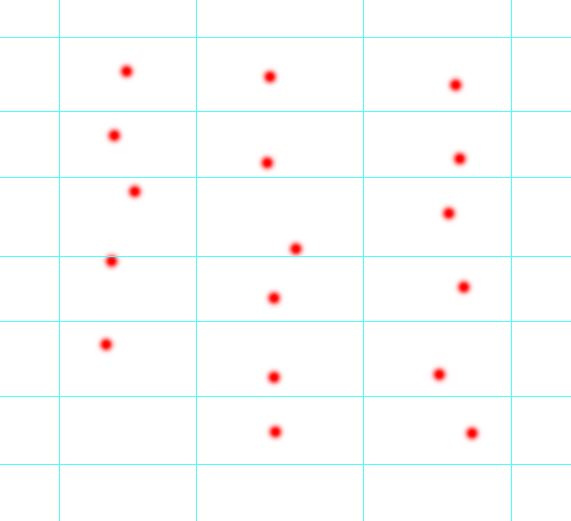

There are many coordinates of points in 2D space that vaguely resemble a table/grid.

Example: It is

necessary, roughly speaking, to distribute the points over the "table":

The number of rows and columns is not known in advance.

Now I do this: I sort by the X coordinate, run through the resulting array - as soon as the distance between neighboring ones is too large (more than the expected error), I consider that a new column begins.

Then in each "column" I sort by Y.

But in my case, the problem is that it is not known in advance how much at least approximately should be between columns, rows, their number. Therefore, sometimes, it turns out that the point is in another column.

Can you please tell me a more correct and better algorithm for this?

Answer the question

In order to leave comments, you need to log in

For the clustering problem , there are ready-made solutions .

In the form of libraries , including . Grouping

algorithms will probably even suit you .

We take all Y-ki of all points and find the minimum and maximum distance.

We take the average, and align the grid of lines in height so that the points are as close as possible to the center of the lines.

We do the same with columns.

I would suggest 2 steps.

1) build a histogram for all X,Y coordinates separately. Select local minima. These will be the table lines. But not exactly.

2) defects in cells that have 2 points each can be corrected by randomly moving the lines. But to make randomness more efficient, I would suggest a genetic algorithm.

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question