Answer the question

In order to leave comments, you need to log in

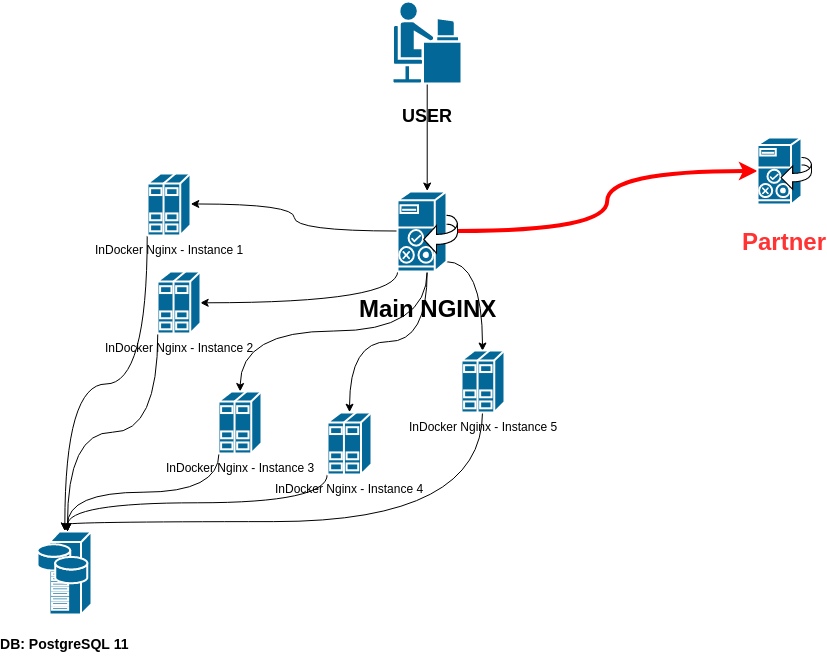

Nginx as reverse proxy: 1% of requests timed out (504). What can be done?

We have:

Problem:

Problem:user www-data;

worker_processes 16;

worker_priority -10;

pid /run/nginx.pid;

worker_rlimit_nofile 200000;

events {

worker_connections 2048;

use epoll;

}

http {

sendfile on;

tcp_nopush off;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

# server_tokens off;

# server_names_hash_bucket_size 64;

# server_name_in_redirect off;

include /etc/nginx/mime.types;

default_type application/octet-stream;

ssl_protocols TLSv1 TLSv1.1 TLSv1.2; # Dropping SSLv3, ref: POODLE

ssl_prefer_server_ciphers on;

access_log /var/log/nginx/access.log;

error_log /var/log/nginx/error.log;

gzip on;

gzip_disable "msie6";

# gzip_vary on;

# gzip_proxied any;

# gzip_comp_level 6;

# gzip_buffers 16 8k;

# gzip_http_version 1.1;

# gzip_types text/plain text/css application/json application/javascript text/xml application/xml application/xml+rss text/javascript;

upstream backend {

least_conn;

ip_hash;

server localhost:8082;

server 37.48.123.321:8082;

# other servers...

}

upstream partner_api {

server WW.XX.YY.ZZ;

}

proxy_cache_path /etc/nginx/conf.d/data/cache/line levels=1:2 keys_zone=line_cache:150m max_size=1g inactive=60m;

proxy_cache_path /etc/nginx/conf.d/data/cache/live levels=1:2 keys_zone=live_cache:150m max_size=1g inactive=60m;

include /etc/nginx/sites-enabled/*;

}server {

listen 443 ssl http2;

server_name domain.com www.domain.com *.domain.com;

ssl_certificate /etc/letsencrypt/live/domain.com/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/domain.com/privkey.pem;

ssl_trusted_certificate /etc/letsencrypt/live/domain.com/chain.pem;

ssl_stapling on;

ssl_stapling_verify on;

resolver 127.0.0.1 8.8.8.8;

add_header Strict-Transport-Security "max-age=31536000";

include /etc/nginx/conf.d/reverse_porxy.conf;

location / {

proxy_pass http://backend;

proxy_http_version 1.1;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

proxy_set_header Host $host;

error_page 500 502 503 504 /50x.html;

}

}

server

{

listen 80;

server_name domain.com www.domain.com;

location /

{

return 301 https://$host$request_uri;

}

}location ~ ^/api/remote/(.+) {

add_header 'Access-Control-Allow-Origin' '*';

add_header 'Access-Control-Allow-Credentials' 'true';

add_header 'Access-Control-Allow-Methods' 'GET, POST, OPTIONS';

add_header 'Access-Control-Allow-Headers' 'DNT,X-CustomHeader,Keep-Alive,User-Agent,X-Requested-With,If-Modified-Since,Cache-Control,Content-Type';

include /etc/nginx/conf.d/reverse_proxy_settings.conf;

proxy_pass http://partner_api/WebApiG/WebServices/BCService.asmx/$1;

}

location ~ ^/api/bs2/remote/api/line/(.+) {

include /etc/nginx/conf.d/reverse_proxy_settings.conf;

proxy_cache_valid any 2m;

proxy_pass http://partner_api/WebApiG/api/line/$1;

proxy_cache line_cache;

proxy_cache_use_stale error timeout http_500 http_502 http_503 http_504;

}

location ~ ^/api/bs2/remote/api/live/(.+) {

include /etc/nginx/conf.d/reverse_proxy_settings.conf;

proxy_pass http://partner_api/WebApiG/api/live/$1;

proxy_cache live_cache;

proxy_cache_use_stale error timeout http_500 http_502 http_503 http_504;

}

# etc...proxy_buffering on;

proxy_ignore_headers "Cache-Control" "Expires";

expires max;

proxy_buffers 32 4m;

proxy_busy_buffers_size 25m;

proxy_max_temp_file_size 0;

proxy_cache_methods GET;

proxy_cache_valid any 3s;

proxy_cache_revalidate off;

proxy_set_header Host "PARTNER_IP";

proxy_set_header Origin "";

proxy_set_header Referer "";

proxy_set_header Cookie '.TOKEN1=$cookie__TOKEN1; ASP.NET_SessionId=$cookie_ASP_NET_SessionId;';

proxy_hide_header 'Set-Cookie';

proxy_http_version 1.1;Answer the question

In order to leave comments, you need to log in

The solution was: increase the number of nginx workers and connections:

user www-data;

worker_processes 32;

worker_priority -10;

pid /run/nginx.pid;

worker_rlimit_nofile 200000;

events {

worker_connections 4096;

use epoll;

}

...upstream partner_api {

server WW.XX.YY.ZZ;

keepalive 512;

}proxy_http_version 1.1;the keep-alive connection support will not turn on. You also need to install proxy_set_header Connection "";Increase the timeout, and then investigate in a calm environment without falling off requests. Is the network subsystem on ubunts normally configured, tuned, etc.?

It is necessary to cut down buffering at reverse_porxy.conf or increase read timeout in domain.com.conf (like 30 seconds by default). It's just that nginx by default receives all the data and only then gives it further. I suspect that on load it does not have time to wait for the data to be received, and read timeout is triggered, since it did not receive a byte. And if it connects directly, then there is no nginx, read timeout does not work and everything is ok.

https://nginx.org/ru/docs/http/ngx_http_proxy_modu...

in the config for the domain, we increase the timeout, which is minimal by default. After that, you can study why the request is being processed for a long time or perform further optimization (if necessary)

Add to /etc/nginx/sites-enabled/domain.com.conf in the location / instruction section:

fastcgi_read_timeout 600; # ждать обработку 600 сек, напримерDidn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question