Answer the question

In order to leave comments, you need to log in

How to restore ZFS RAIDZ1?

Given:

Proxmox 6.1

RAIDZ1 + ssd cache + ssd log

4 disks of 2 TB each -WDC_WD2003FYYS (worked on average for more than 5 years)

+ Nvme Samsung_SSD_970_PRO_512GB

Sunday evening the server hung.

Soft reboot did not help, did a reset

Did not boot.

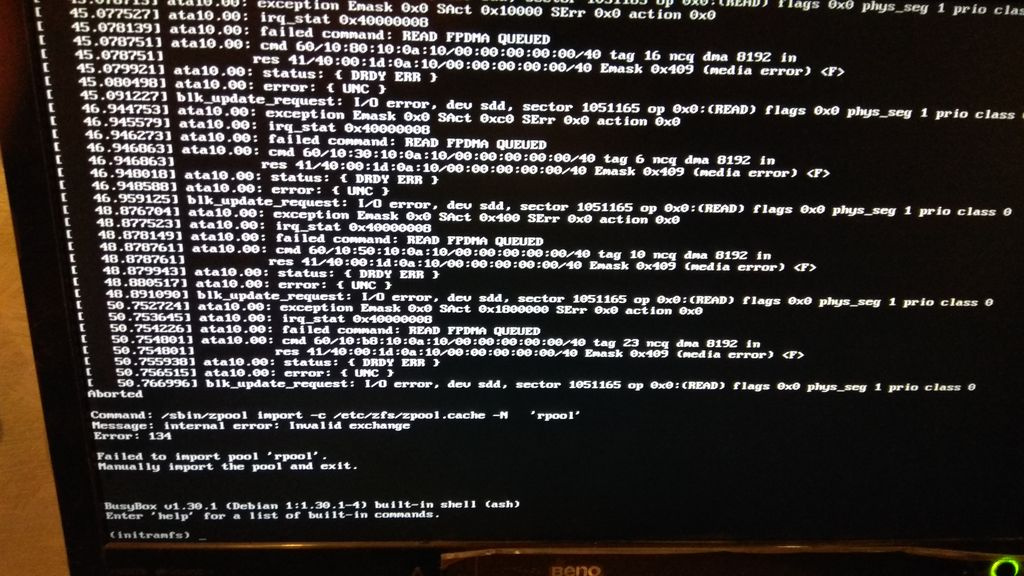

I connected the monitor and saw that the sector could not be read by boot.

I booted from a USB flash drive and ran the Victoria program on the disk with which there were problems.

In smart, it was indicated that there is 1 unstable sector.

Tested and reassigned it (only 1 sector).

Having not finished the full disk check, I tried to boot into Proxmox again . I'm

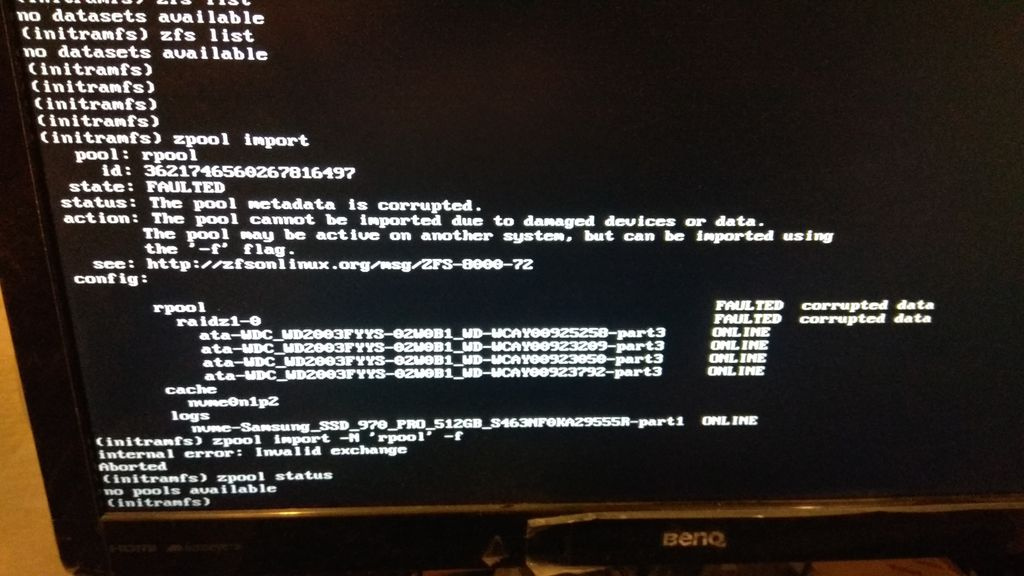

attaching screenshots below.

I tried to mount the pool in different ways - it does not work.

At the moment, the creation of a full backup of disk images via ddrescue is being completed

. That's what it turned out.

/dev/disk/by-id/ata-WDC_WD2003FYYS-02W0B1_WD-WCAY00923792-part3 the disk was able to backup only 99.9%

Hundreds of unreadable sectors.

3 disks have the same TXG (one of them is broken)

1 The disk differs in its TXG (the disk itself is readable)

Created disk images.

Mounted these images

losetup /dev/loop1 /opt/ata-WDC_WD2003FYYS-02W0B1_WD-WCAY00923209-part3.bin

losetup /dev/loop2 /opt/ata-WDC_WD2003FYYS-02W0B1_WD-WCAY00925258-part3.bin

losetup /dev/loop5 /opt/ata-WDC_WD2003FYYS-02W0B1_WD-WCAY00923792-part3.bin

losetup /dev/loop0 /opt/ata-WDC_WD2003FYYS-02W0B1_WD-WCAY00923050-part3.bin

losetup /dev/loop3 /opt/nvme-Samsung_SSD_970_PRO_512GB_S463NF0KA29555R-part1.bin

losetup /dev/loop4 /opt/nvme-Samsung_SSD_970_PRO_512GB_S463NF0KA29555R-part2.binzpool import -fF -XT 922580 -o readonly=on rpool

zpool import -fF -XT 962577 -o readonly=on rpoolAnswer the question

In order to leave comments, you need to log in

First, it was necessary to use the instruction - https://docs.oracle.com/cd/E19253-01/819-5461/gbct... - to try to roll back transactions.

Look at similar problems - https://www.ixsystems.com/community/threads/pool-m...

At worst, remove the problem disk and you would have a Degraded Pool.

You need to understand what pool metadata is and how it is stored. You need to understand what scrub is on ZFS and why you need to use ECC memory. You need to understand that reallocate sectors are unlikely to solve the problem, since the data on it is already corrupted.

And then go to RLab.

And set up backups.

And how can zfs be brought to such a state? In the same place, scrubbing must constantly work

Try Klennet ZFS Recovery. Certainly not free, but the price is quite lifting.

In trial mode, it will scan disks and show everything that it could find, incl. and remote.

In my first case, the pool was recreated on top of the existing one, in the second - an unmountable damaged pool.

Are there any recovery utilities?

This program "sees" my pool

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question