Answer the question

In order to leave comments, you need to log in

How to restart a task in grab:spider?

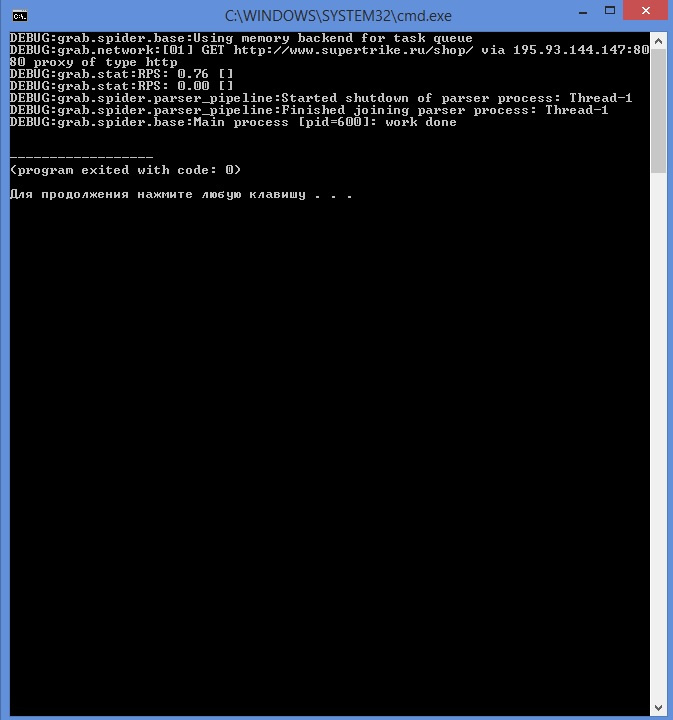

Sometimes it happens that when I just start the spider, it apparently uses a rotten proxy and gets the wrong page content. That is, the response header says 200, that's for sure, but the content itself is incorrect. Because I can define it in code, then I thought why don't I repeat the request. I just don't know how to do it. The code:

class ExampleSpider(Spider):

initial_urls = ['http://www.supertrike.ru/shop/']

def create_grab_instance(self):

g = Grab(

connect_timeout=6,

timeout=8

)

g.proxylist.load_url('http://127.0.0.1:7777/proxy.txt')

return g

# Функция prepare вызываетя один раз перед началом

def prepare(self):

self.result_file = csv.writer(open('result.txt', 'w'))

def task_initial(self, grab, task):

print('Собераю ссылки на категории')

cats=[a.text() for a in grab.doc.select('//ul[@class="menu vmLinkMenu"]//li//@href').selector_list][:-1]

print('КАТЕГОРИИ: %s' % '\n'.join(cats))

if not cats:

Task('initials', url=self.initial_urls[0]) #Тут я сделал глупую попытку решить проблему, которая почему-то не сработала((

for cat_url in cats:

print('-%s' % cat_url)

yield Task('category', url='http://www.supertrike.ru%s?limitstart=0&limit=65535s' % cat_url)

def task_category(self, grab, task):

#print('Собераю ссылки на товары')

for prod_url in [a.text() for a in grab.doc.select('//div[@class="bp_product_details"]/a/@href').selector_list]:

yield Task('product', url='http://www.supertrike.ru%s' % prod_url)

def task_product(self, grab, task):

ims = [a.text() for a in grab.doc.select("//div[@class='contentp left_top_box']//*//a/./img/../@href").selector_list]

images = []

for i, im in enumerate(ims):#Удаляем повторы (костыль, нужен из-за несовершенного xpath'а)

if im in images[:i]:

continue

else:

images.append(im)

i=0

while i < len(images):

if not 'supertrike.ru' in images[i]:

images[i] = 'http://supertrike.ru%s' % images[i]

i+=1

price = None

try:

price = grab.doc.select('//div[@class="product_price_box"]//span[@class="productPrice"]').text()

except:

pass

images_fnames = []

category=''

try:

category=grab.doc.select('//div[@class="cbwrap"]//*//span[2]//a').text()

except:

category=''

descr=grab.doc.select('//div[@class="contentp right_top_box"]/div')

title=grab.doc.select('//h1')

product = [

task.url, #Ссылка на товар

category, #Категория

descr.text() if descr else '', #Описание

title.text() if title else '', #Заголовок

re.search('([ 0987654321]*)', price).group(0).replace(' ', '') if price else None, #Цена

','.join(images) if images else ''#Картинки

]

i = 0

while i < len(product):

product[i] = product[i]

i += 1

self.result_file.writerow(product)

print('Saved %s' % product[1])

if __name__ == '__main__':

bot = ExampleSpider(

thread_number=2,

network_try_limit=10

)

bot.run()

Answer the question

In order to leave comments, you need to log in

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question