Answer the question

In order to leave comments, you need to log in

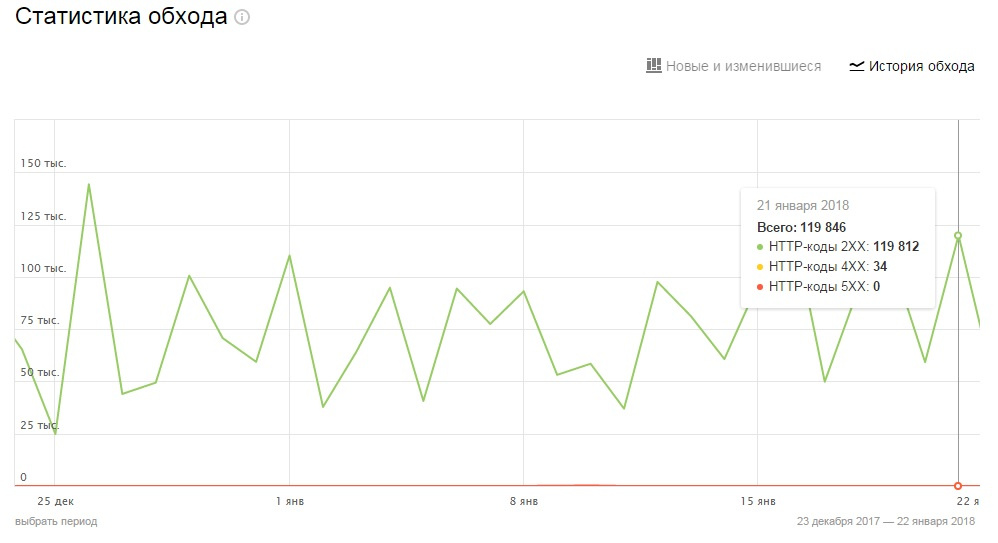

How to reduce the load on the server from search robots?

Hello everyone, I have a resource located on elasticweb hosting, with payment for resources!

YII2 NGINX

_

_

_

_

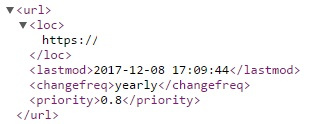

_ _ _ _ fixed, not as before date() !

And now it has indexed new MAPs, and still walks hard through the pages))) Is there

any way to make it walk less??

Answer the question

In order to leave comments, you need to log in

https://yandex.com/support/search/robots/request-r...

But in general, if there is some kind of super load from the robot, but you need to cut the code normally, because there are a lot of robots, and most of them don’t care about what you write in robots or sitemap.

The site should not crash or consume the entire server when crawled by bots.

155600 - what are you selling?

or do you have a scientific library?

Or is it stupid with all kinds of get queries in the filter?

If so, then prohibit indexing their

disallow in robots.txt , write down the

canonical rial.

Close with ajax.

Exclude from the sitemap.

Give me a link

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question