Answer the question

In order to leave comments, you need to log in

How to optimize these queries on Laravel?

Requests take up too much time and memory because there is a lot of data in the database. I need to speed up somehow. I have changed the code many times and still do not know how to make it even faster.

I have three graphs on the main page. There we choose from which date to which to show and I get data for every day.

First, I convert the date to the desired format:

if ($from) {

$dateFrom = Date::createFromFormat('d.m.Y G:i', $from);

}

if ($to) {

$dateTo = Date::createFromFormat('d.m.Y G:i', $to);

}$tableDate = [

[

'count_sales' => [],

],

[

'new_users' => [],

],

[

'success_payments' => [],

],

'days' => [],

];// Все дни с первого по последнего.

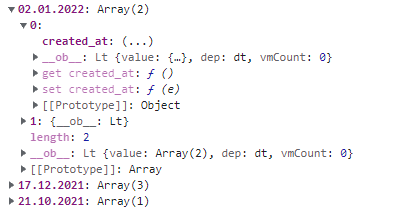

$count_days = floor(abs(strtotime($dateFrom) - strtotime($dateTo)) / 86400) + 1;$count_sales = ActiveService::orderBy('created_at')

->whereBetween('updated_at', [$dateFrom, $dateTo])

->get('created_at')

->groupBy(function($events) {

return Carbon::parse($events->created_at)->format('d.m.Y');

});

$new_users = User::orderBy('created_at')

->whereBetween('created_at', [$dateFrom, $dateTo])

->get('created_at')

->groupBy(function($events) {

return Carbon::parse($events->created_at)->format('d.m.Y')

});

$success_payments = HistoryPayment::orderBy('created_at')

->whereBetween('created_at', [$dateFrom, $dateTo])

->where('operation', 'top_up')

->where('status_transaction', 'success')

->where('payment_id', '!=', '0')

->select(['amount', 'created_at'])

->get()

->groupBy(function($events) {

return Carbon::parse($events->created_at)->format('d.m.Y');

});

for ($i=0; $i < $count_days; $i++) {

$day = date('d.m.Y', strtotime($dateFrom) + 86400 * $i);

array_push($tableDate['days'], $day);

$tableDate[0]['count_sales'][$i] = 0;

$tableDate[1]['new_users'][$i] = 0;

$tableDate[2]['success_payments'][$i] = 0;

// Кол-во успешных активаций (продаж) по дням.

if($count_sales->get($day) !== null) {

$count = $count_sales->get($day)->count();

$tableDate[0]['count_sales'][$i] = $count;

}

// Кол-во регистраций по дням

if($new_users->get($day) !== null) {

$count = $new_users->get($day)->count();

$tableDate[1]['new_users'][$i] = $count;

}

// Сумма успешных оплат по дням

if($success_payments->get($day) !== null) {

$amounts = $success_payments->get($day);

foreach ($amounts as $value) {

$tableDate[2]['success_payments'][$i] += $value->amount;

}

}

}if($count_sales->get($day) !== null)return new Collection([

'tableDate' => $tableDate,

]);Answer the question

In order to leave comments, you need to log in

As far as I remember, ->get() makes a request to the database and returns a collection. And this is a lot of data and your project will die, use groupBy as part of the query and calculate on the DB side before the get () call, and not after, when you are no longer working with a query builder, but with a huge collection.

This is a task, not a question.

Well, if the question is, then rewrite the code so that 1 request to the database is formed and data is already retrieved from it.

No problem, I've done this many times.

Well, if the task, then $200/h and in a few hours we will make the necessary request :)

Write optimized queries to the database. Grouping is mandatory on the request side. 99% that this will solve your problem, because you definitely don’t have much data. And if suddenly, then explain and indexes to help.

1. Place the correct indexes in the tables.

2. Build queries in the mysql client, debug them and make sure they run with minimal time.

3. Build queries through the framework tools.

* Select from the database only those columns that are necessary for further use.

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question