Answer the question

In order to leave comments, you need to log in

How to optimize performance in JupyterNotebook?

Good day.

I'm taking a course on machine learning on Stepik and ran into a rather unpleasant problem.

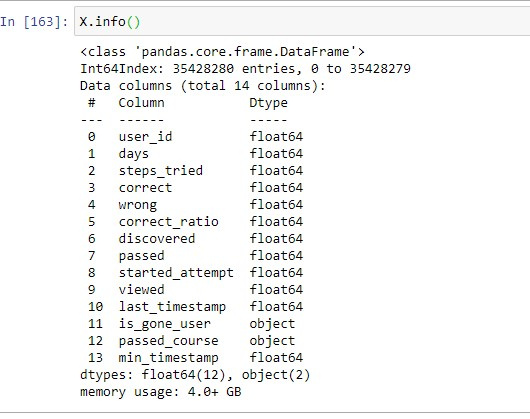

During data preparation, the final dataframe has grown to an unprecedented size

. Is there any way to optimize the use of computer resources, otherwise it is impossible to work. When you start the cell, the computer just hangs. Even in order to display a message on the screenshot, the computer was pretty much stuck.

The computer has an old intel core i3 and 8gb ddr3. Or do you still need to change components?

Answer the question

In order to leave comments, you need to log in

First of all, look at how the memory is being used:

And then optimize the type of each column :

df.memory_usage(deep=True)

df['object'].astype('category')Changed the type of one of the columns from float64 to int via df.step_id.astype(int) and memory usage decreased from 4gb+ to 3.4gb . True, the computer was absolutely inoperable for 15 minutes, while operations were performed to change the column type

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question