Answer the question

In order to leave comments, you need to log in

How to optimize file handling in Python?

Hello! Prompt who than can on a following question.

There are 16 thousand excels, the data from which I will need to merge together. However, the names of the sheets, despite the same content, differ, however, as well as their order. To begin with, I want to rename the sheets using Python and bring their names to a single form so that you can collect data from them using a single key. I sketched a code that collects unique sheet names from files and uploads them to a file for further processing:

def sheet_name_file():

from openpyxl import load_workbook, Workbook

import os

sheetname_set = set()

for dir in os.listdir('C:\\Users\Иван Данчук\Documents\Тест_shutil'):

path = f'C:\\Users\Иван Данчук\Documents\Тест_shutil\{dir}'

for file in os.listdir(path):

wb = load_workbook(os.path.join(path, file))

sheetname_set.update(wb.sheetnames[0:10])

print(file)

wb2 = Workbook()

ws = wb2.active

for row in range(1):

ws.append(list(sheetname_set))

wb2.save(f'unique_sheet_name_list_{dir}.xlsx')Answer the question

In order to leave comments, you need to log in

Or is it better to dive into VBA to solve such problems?

Function FilenamesCollection(ByVal FolderPath As String, Optional ByVal Mask As String = "", _

Optional ByVal SearchDeep As Long = 999) As Collection

' © EducatedFool excelvba.ru/code/FilenamesCollection

' Получает в качестве параметра путь к папке FolderPath,

' маску имени искомых файлов Mask (будут отобраны только файлы с такой маской/расширением)

' и глубину поиска SearchDeep в подпапках (если SearchDeep=1, то подпапки не просматриваются).

' Возвращает коллекцию, содержащую полные пути найденных файлов

' (применяется рекурсивный вызов процедуры GetAllFileNamesUsingFSO)

Set FilenamesCollection = New Collection ' создаём пустую коллекцию

Set FSO = CreateObject("Scripting.FileSystemObject") ' создаём экземпляр FileSystemObject

GetAllFileNamesUsingFSO FolderPath, Mask, FSO, FilenamesCollection, SearchDeep ' поиск

Set FSO = Nothing ' очистка строки состояния Excel

End Function

Function GetAllFileNamesUsingFSO(ByVal FolderPath As String, ByVal Mask As String, ByRef FSO, _

ByRef FileNamesColl As Collection, ByVal SearchDeep As Long)

' перебирает все файлы и подпапки в папке FolderPath, используя объект FSO

' перебор папок осуществляется в том случае, если SearchDeep > 1

' добавляет пути найденных файлов в коллекцию FileNamesColl

On Error Resume Next: Set curfold = FSO.GetFolder(FolderPath)

If Not curfold Is Nothing Then ' если удалось получить доступ к папке

' раскомментируйте эту строку для вывода пути к просматриваемой

' в текущий момент папке в строку состояния Excel

' Application.StatusBar = "Поиск в папке: " & FolderPath

For Each fil In curfold.Files ' перебираем все файлы в папке FolderPath

If fil.Name Like "*" & Mask Then FileNamesColl.Add fil.Path

Next

SearchDeep = SearchDeep - 1 ' уменьшаем глубину поиска в подпапках

If SearchDeep Then ' если надо искать глубже

For Each sfol In curfold.SubFolders ' перебираем все подпапки в папке FolderPath

GetAllFileNamesUsingFSO sfol.Path, Mask, FSO, FileNamesColl, SearchDeep

Next

End If

Set fil = Nothing: Set curfold = Nothing ' очищаем переменные

End If

End Function

Sub LoopThroughFiles(ByVal sDirName As String, ByRef lRow As Long, ByVal sMask As String)

On Error Resume Next

Dim folder$, coll As Collection

Dim EX As Excel.Application

Dim wkb As Workbook

Dim wks As Worksheet

Dim file As Variant

Dim i As Long

Dim v As Variant

folder$ = sDirName

If Dir(folder$, vbDirectory) = "" Then

MsgBox "Не найдена папка «" & folder$ & "»", vbCritical

Exit Sub ' выход, если папка не найдена

End If

Set coll = FilenamesCollection(folder$, sMask) ' получаем список файлов по маске из папки

If coll.Count = 0 Then

' MsgBox "В папке «" & Split(folder$, "\")(UBound(Split(folder$, "\")) - 1) & "» нет ни одного подходящего файла!", _

vbCritical, "Файлы для обработки не найдены"

Exit Sub ' выход, если нет файлов

End If

Set EX = New Application

EX.Visible = False

' перебираем все найденные файлы

For Each file In coll

Cells(lRow, 2) = CStr(file)

Set wkb = EX.Workbooks.Open(Filename:=file)

' Если книга не пуста

If wkb.Sheets.Count > 0 Then

i = 1

ReDim v(1 To wkb.Sheets.Count)

' Получаем названия листов

For Each wks In wkb.Sheets

v(i) = wks.Name

i = i + 1

Next wks

End If

Cells(lRow, 3) = Join(v, ",")

wkb.Close False

DoEvents

lRow = lRow + 1

DoEvents

Next file

Set wks = Nothing: Set wkb = Nothing: Set EX = Nothing

Set colShts = Nothing

End Sub

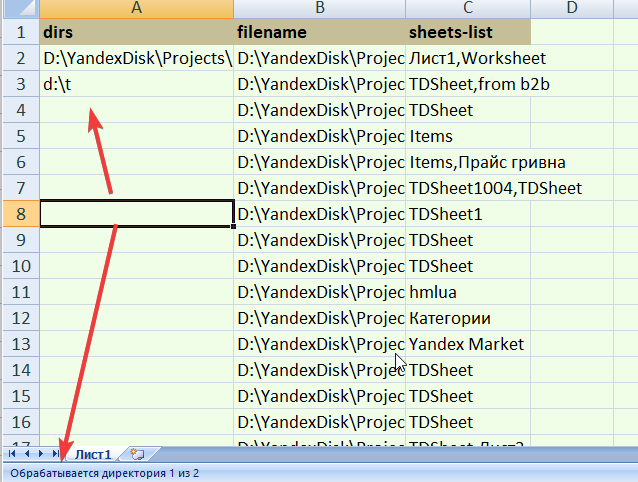

Sub LoopThroughDirs()

Dim lLastRow As Long

Dim lRow As Long

Dim i As Long

Dim v As Variant

Dim dTime As Double

lRow = 2

lLastRow = Cells(Rows.Count, 1).End(xlUp).Row

v = Range(Cells(2, 1), Cells(lLastRow, 2))

dTime = Time()

For i = LBound(v) To UBound(v)

Application.StatusBar = "Обрабатывается директория " & i & " из " & UBound(v)

Call LoopThroughFiles(v(i, 1), lRow, "*.xls")

Call LoopThroughFiles(v(i, 1), lRow, "*.xlsx")

Call LoopThroughFiles(v(i, 1), lRow, "*.xlsm")

DoEvents

Next i

MsgBox "Готово за " & CStr(CDate(Time() - dTime))

End Sub

You can write to CSV, save a lot on that. CSV files are then easily and quickly concatenated using system command line tools. You have Windows, so COPY, for example.

The most annoying thing that can happen is to wake up in the morning and see a monolithic giant file (the result of your script's night vigil) in which something went wrong somewhere after the first 30-60%.

In general, the main thing is whether this is a one-time task. If you load such a volume, say, every couple of days, then, of course, you need to use multiprocessing and set up a normal pipeline with automation and logs.

If we are talking about a one-time custom processing within the framework of R&D, then do not worry, do it as simple as possible.

Write to CSV. Break the volume with a simple slice into several pieces and stupidly start with separate scripts. This will save you time for debugging multiprocessing, no need to block queues, tasks and other things.

Questions about your code:

for row in range(1):

ws.append(list(sheetname_set))Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question