Answer the question

In order to leave comments, you need to log in

How to deal with broadcasting video from raspberries?

Hello,

I found a library for broadcasting video from raspberry pi on github

https://github.com/131/h264-live-player

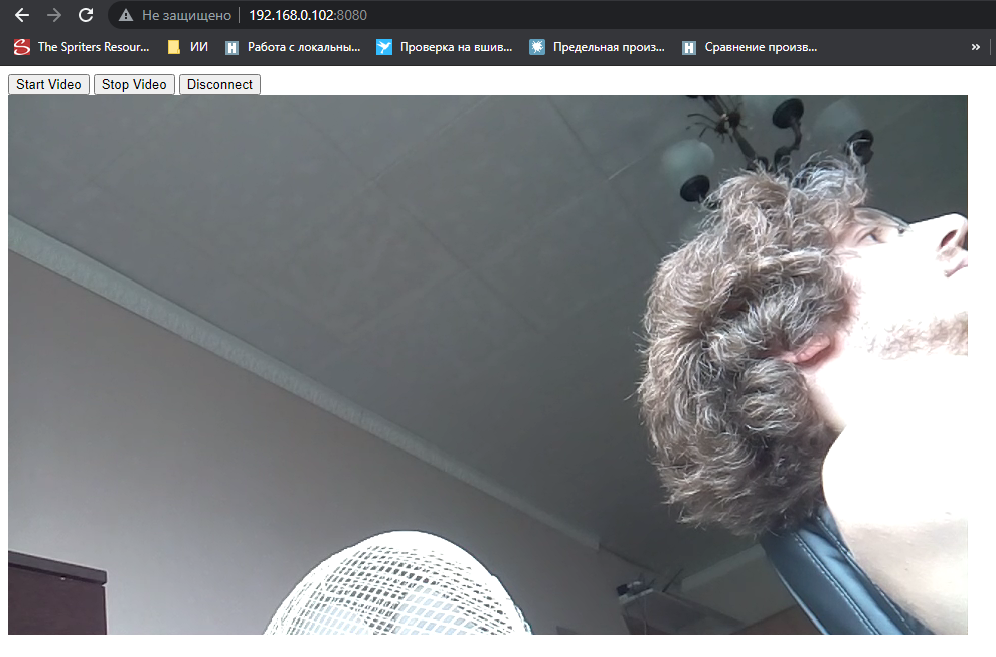

Unpacked on raspberry, ran: node server-rpi.js without changes and on home computer in browser 192.168.0.102 :8080 got a great live stream with no delays.

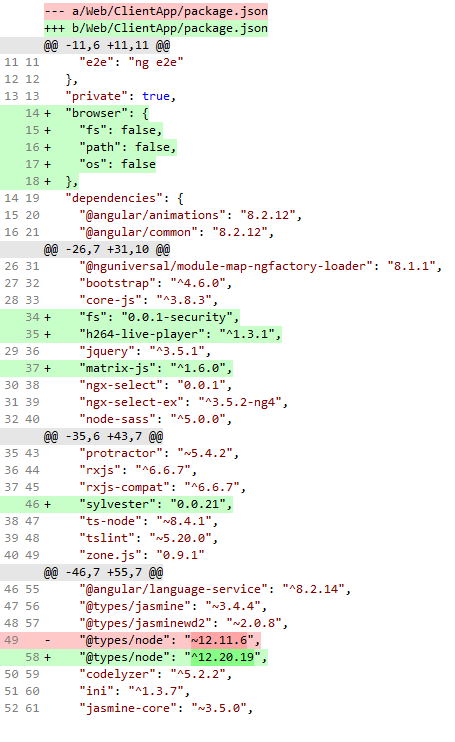

Then I needed to embed this live stream on my website. The need lies in the fact that on the same page, in addition to the video broadcast, there should be other control buttons and information plates. I'm developing a site in Visual Studio in C#, I use angular on the front (but this is not necessary). I made an iframe and posted a link to the page described above

<iframe [src] = "getVideoUrl" style = "width: 1000px; height: 600px;"> </iframe>

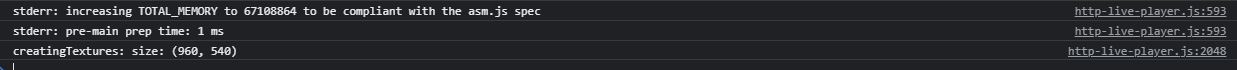

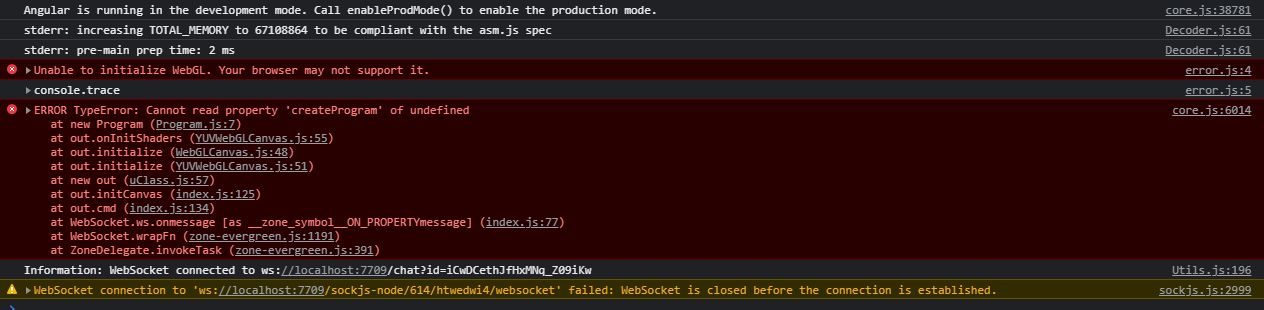

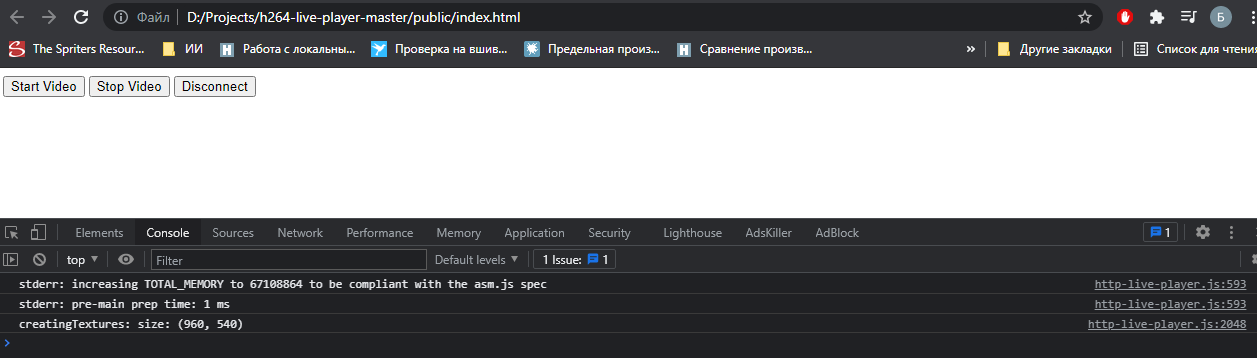

stderr: increasing TOTAL_MEMORY to 67108864 to be compliant with the asm.js spec

stderr: pre-main prep time: 1 ms

creatingTextures: size: (960, 540)

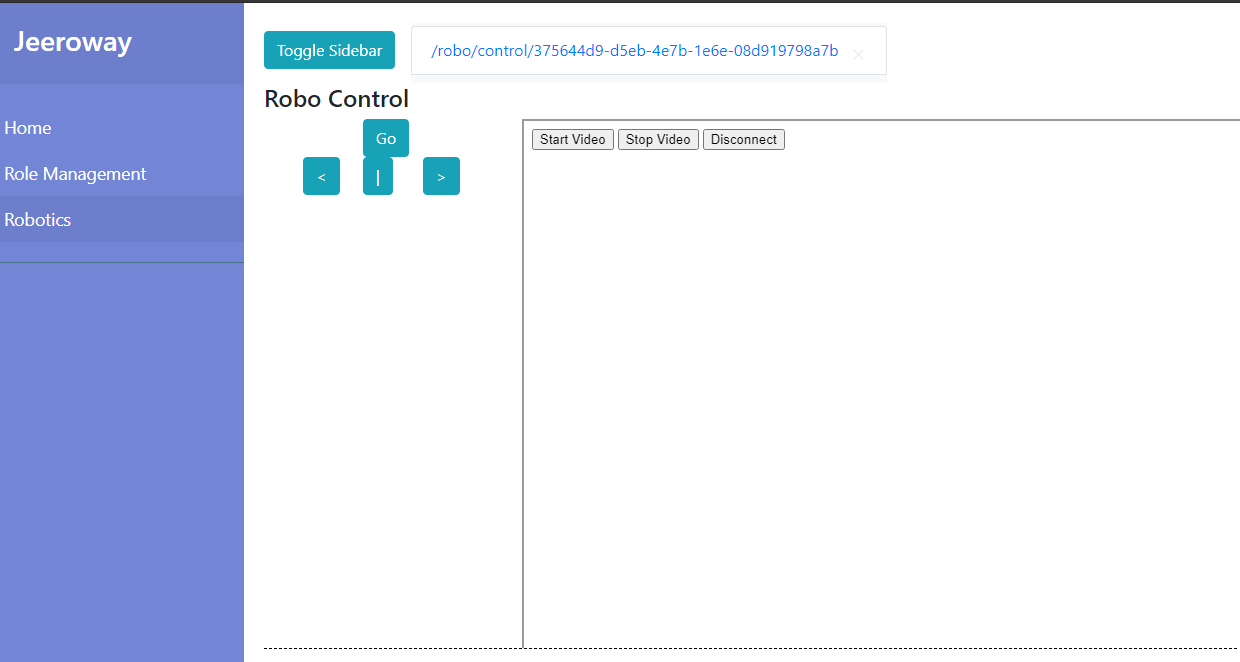

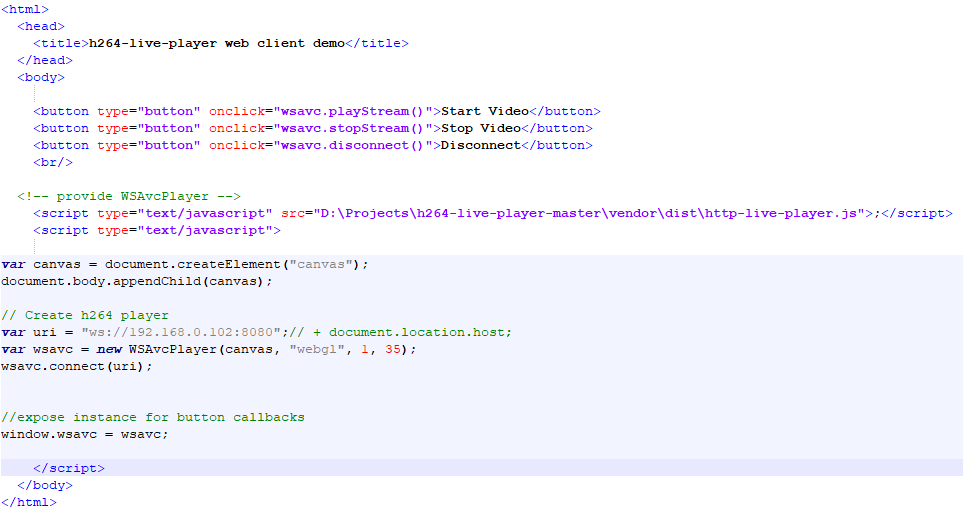

<div class="col-md-9 text-center">

<button type="button" (click)="wsavc.playStream()">Start Video</button>

<button type="button" (click)="wsavc.stopStream()">Stop Video</button>

<button type="button" (click)="wsavc.disconnect()">Disconnect</button>

<br />

<canvas #roboVideo></canvas>

</div>var WSAvcPlayer = require('h264-live-player/wsavc/index.js');

public wsavc: any;

ngAfterViewInit() {

var uri = "ws://192.168.0.102:8080";

this.wsavc = new WSAvcPlayer(this.roboVideo, "webgl", 1, 35);

this.wsavc.connect(uri);

}

Answer the question

In order to leave comments, you need to log in

1) Read Harutyunyan's articles from the fence to lunch:

2) Build an idea for yourself about the codec, media container, transport and their relationships.

You need to understand what the first, second and third are (no, this is not a menu in the canteen). And how one affects the other.

For example, you need to understand that the codec must be tuned to realtime, namely, to wind up its key frame rate to the maximum. Otherwise, the user will have to wait half a minute for the I-Frame.

You need to understand that depending on the platform and browser, you get restrictions on the codec, container and transport - the old iPhone understands only h264 baseline, dumped in H264 annexb stream and transmitted via HLS

(and that's just one link option)

That is, what comes out of the codec, for a live broadcast, you need to put it in the right container and put it in the right transport.

The first two tasks are done by ffmpeg, the third one by the broadcast server.

The more devices you need to support, the more broadcast configurations and transcoded streams (if you want to broadcast in different resolutions - strain ffmpeg for decoding - scale - coding) If

you want to be viewed on a Linux wheelbarrow - encode in VP8/

9 the answer with all the options will take a whole book, and for two months I was busy setting up corporate streaming video calls, taking into account all the necessary wishes.

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question