Answer the question

In order to leave comments, you need to log in

How to cache requests to the service API correctly?

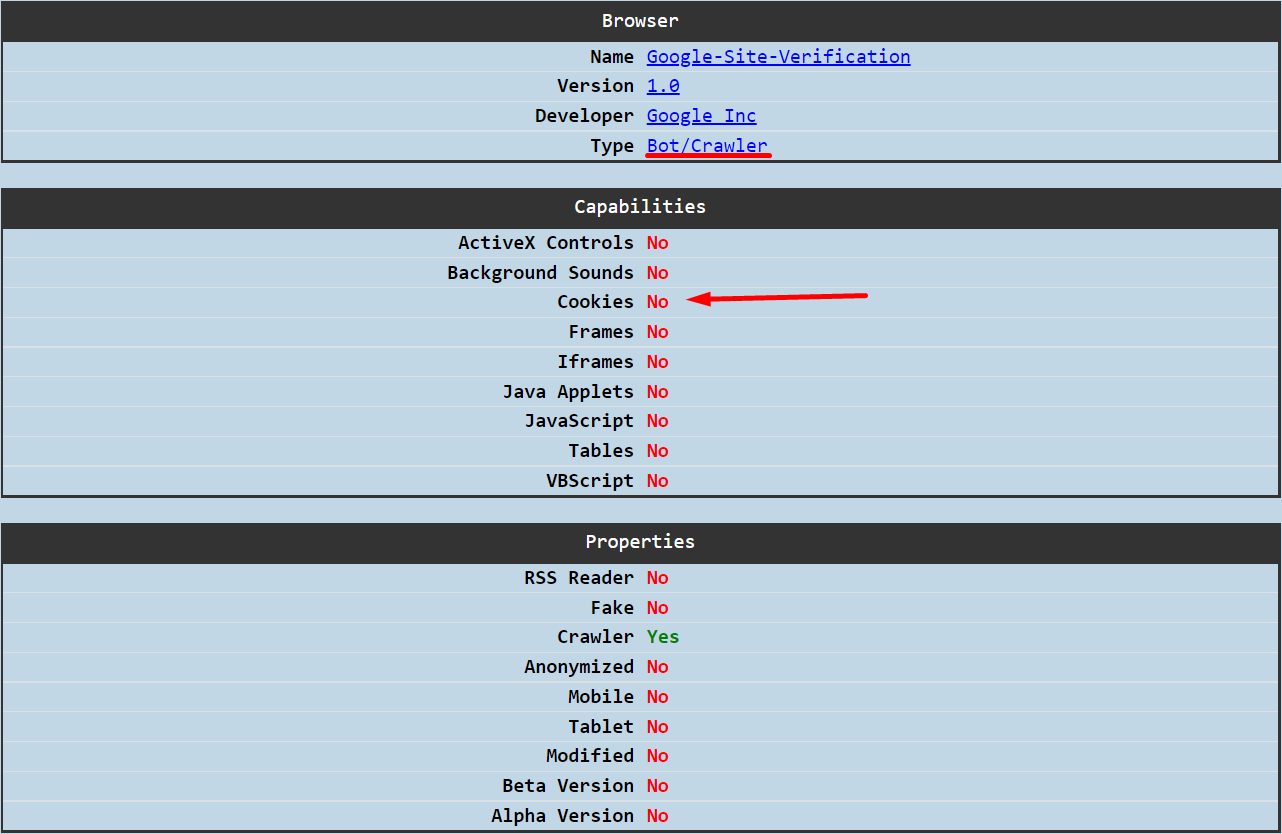

Each time you visit the php page of the site, a certain service is called through their API to obtain a certain array of information. But the service has a limited number of API calls per day for one account. I noticed that I very quickly used up all available calls to the service via API, although there were much fewer clicks to the site than calls to the service, even taking into account all search engines and not only bots. That is, ~ 100 people went to the site, for example, and ~ 500 requests to the service.

Here is the call code:

//Запись определённых ключей из массива в переменные

$key1= getData()['Key1'];

$key2= getData()['Key2'];

$key3= getData()['Key3'];

$key4= getData()['Key4'];

$key5= getData()['Key5'];

$key6= getData()['Key6'];

//Отправка запроса к сервису и получение массива информации

function getData() {

return curl('https:/api.example.com/json_array');

}//Функцию curl сюда вставлять не буду, там обычный curl запрос

//название там другое, тут указал curl для примера

//Далее как то манипулирую с этими данными...$myarray = getData();

$key1= $myarray['Key1'];

$key2= $myarray['Key2'];

$key3= $myarray['Key3'];

$key4= $myarray['Key4'];

$key5= $myarray['Key5'];

$key6= $myarray['Key6'];function getData() {

if (isset($_COOKIE['arrayData']) && !empty($_COOKIE['arrayData'])) {

return unserialize($_COOKIE['arrayData']);

} else {

$arrayData = curl('https:/api.example.com/json_array');

setcookie('arrayData', serialize($arrayData));

return curl('https:/api.example.com/json_array');

//И тут оказывается глупость сделал, два раза curl запрос сделал правильнее наверное так:

//return $arrayData;

}

}

Answer the question

In order to leave comments, you need to log in

Sessions and cookies work differently, if in the case of the latter, the data is stored on the client side, then with sessions it’s the other way around, and only its identifier “chases” over the network, so if cookies don’t work, then sessions should work like always.

Try to store in the session, but it's better not to, because what will you do when the number of uniques exceeds your limit of 500 requests? Redo everything?

I'm assuming you're using a free API with a daily request limit and not using an API auth key? If so, I would take it all to the client side: a regular ajax request to the API, which, if successful, also sends the finished data to your php handler with ajax, and you can already use sessions in it, so that after reloading the page, the client did not resubmit requests to the API.

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question