Answer the question

In order to leave comments, you need to log in

How to bypass parser blocking?

In general, I encountered such a problem that when I want to parse a site using requests, the site is blocked by the parser after the second request (that is, you cannot make more than two requests in two minutes), while the browser is blocked after 10-11 requests (updated page via Ctrl+F5).

Site: https://steamcommunity.com/profiles/76561198077051...

I make requests simply through requests.get (), I tried to insert only headers with user-agent there.

The question in general is, what other arguments can be inserted besides the user-agent, and besides headers, I heard that sites can find out the screen resolution and something else, is it possible to simulate all this somehow. I do not want to use a proxy, but if there are no other options, then I will have to use a proxy.

my headers

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:79.0) Gecko/20100101 Firefox/79.0'}

headers tried to change after each request, the same does not help.

Answer the question

In order to leave comments, you need to log in

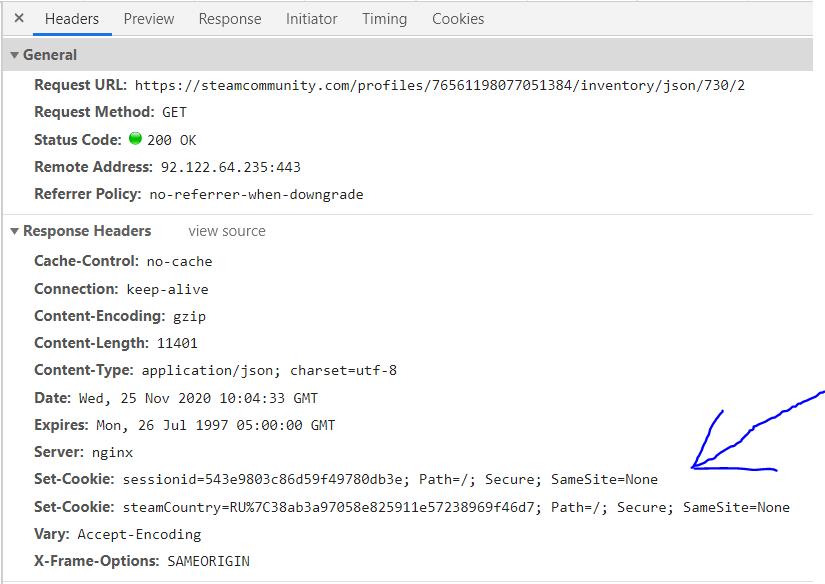

When you make your first request, the site sets you cookies. If, with your further requests, you do not return the previously set cookies, then of course you will be banned as a bot and will be right.

The ultimate option is to agree on the provision of api, you earn money, so share it with the creators of the resource.

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question