Answer the question

In order to leave comments, you need to log in

How to avoid long GC pauses when creating many small objects?

Good afternoon.

There is a server that performs pathfinding calculations. For each calculation, a local HashMap is created, into which small Node objects are written (about 50 bytes per Node and 50 bytes per Position, which is a Node field).

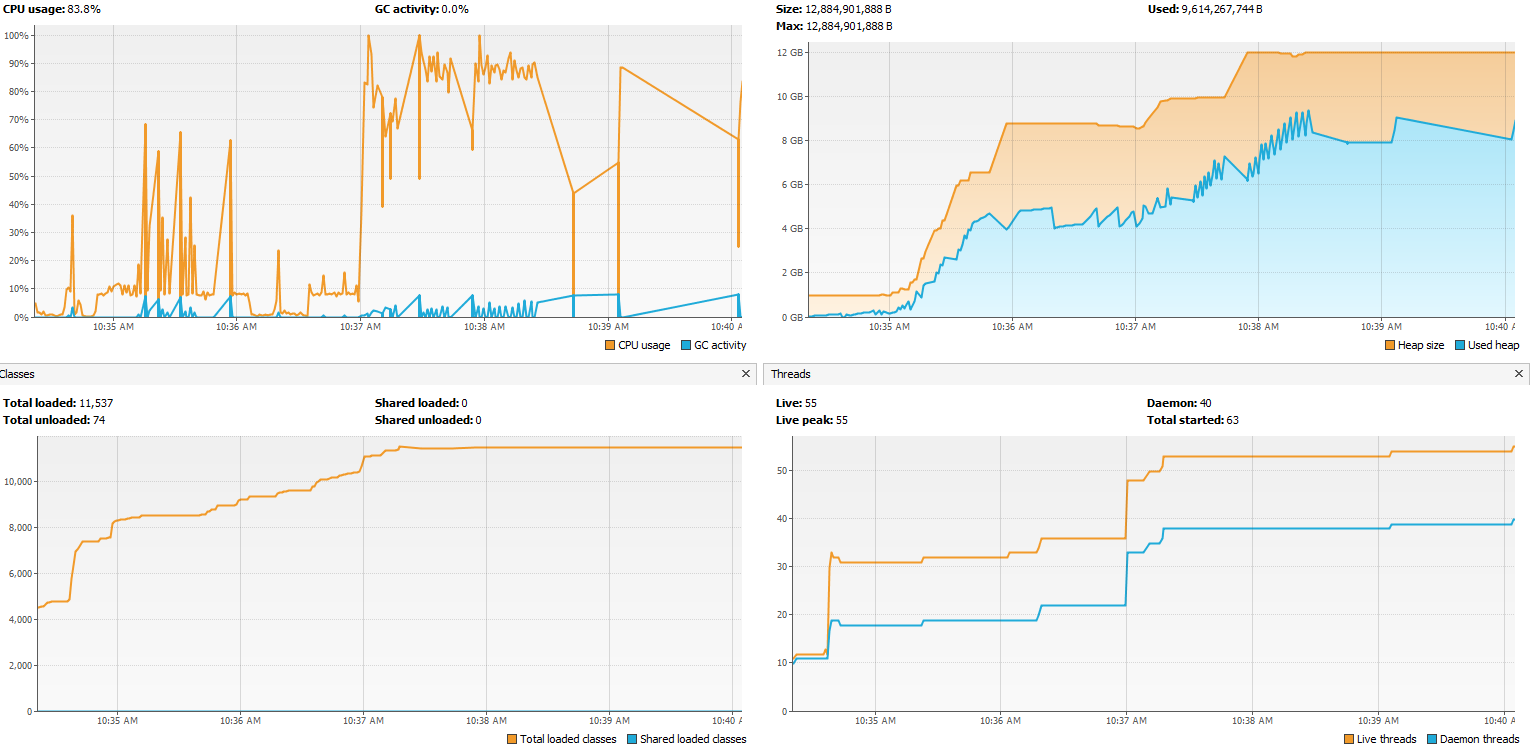

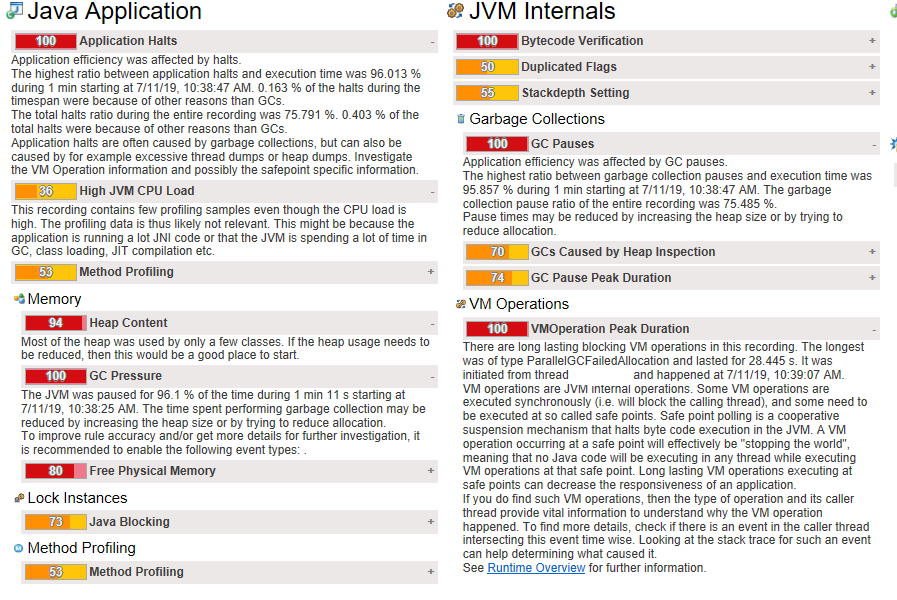

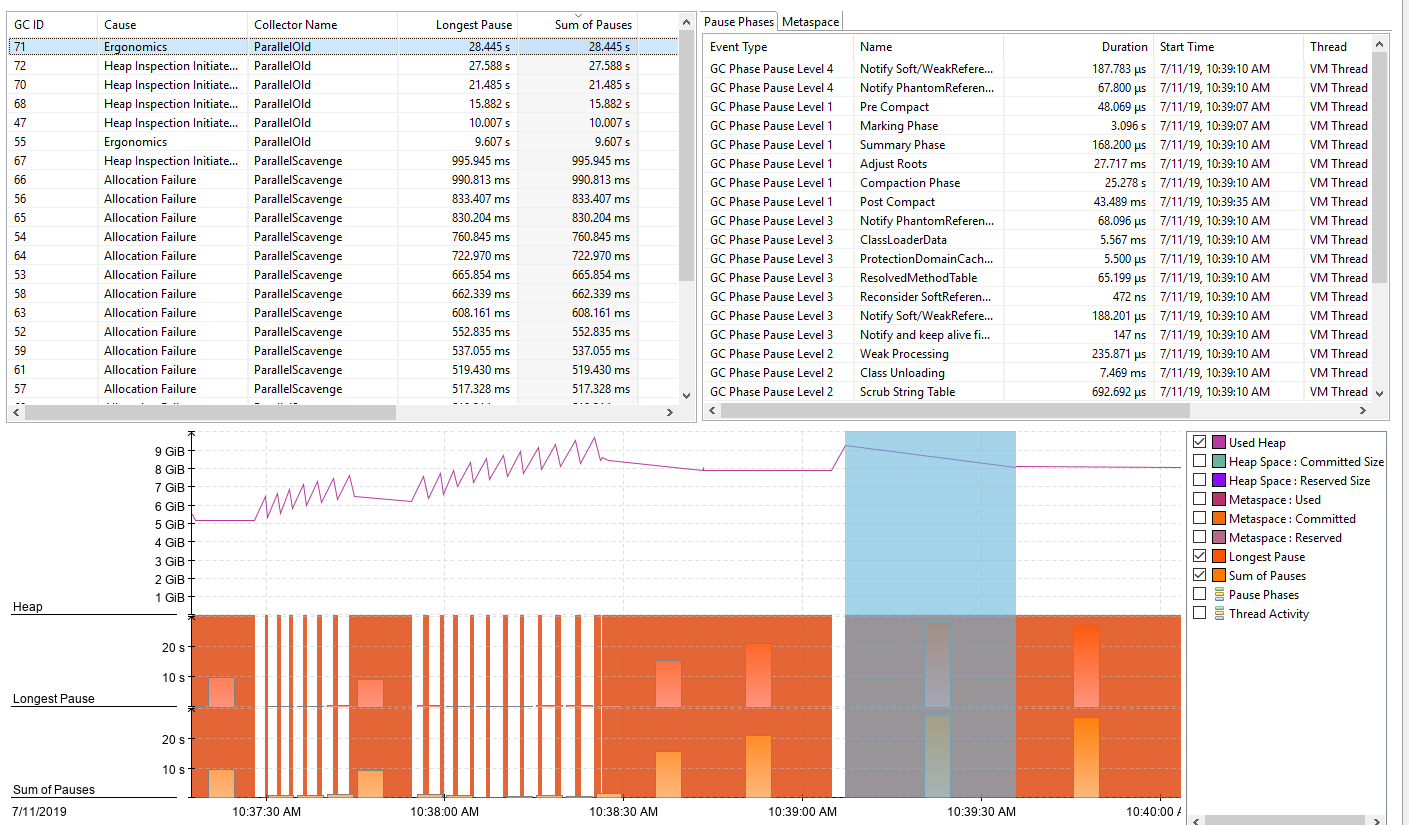

The server is started with parameters

-Xmx12g

-Xms1g

-server

-XX:+UseParallelGC

Answer the question

In order to leave comments, you need to log in

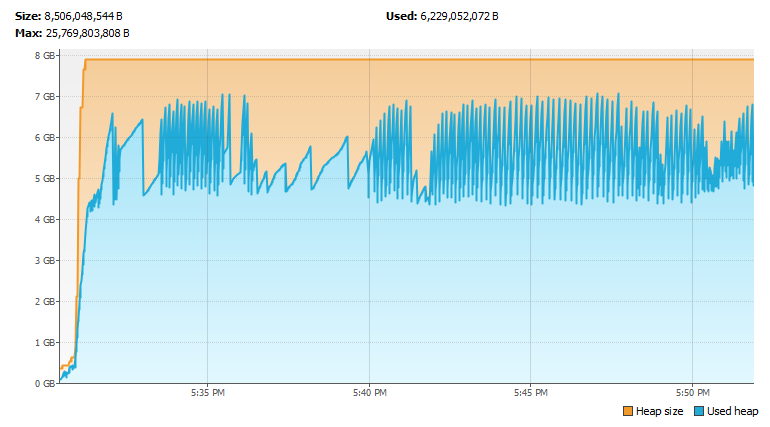

Everything has been fixed. A mistake made in the code, during the refactoring, which was not covered by the tests. The main thread began to process too many nodes, ignoring the check for the maximum allowable number. This led to a strong increase in memory consumption, while other threads successfully completed their work and the GC cleaned up the memory behind them. This confused me, tk. Judging by the schedule, the memory was sometimes cleared.

I looked at the number of Node objects and the queues in which they are stored, it turned out that there are about 10'000'000 Node per queue, this value is much larger than the maximum allowed. After fixing the error, the main thread began to correctly perform its task, memory growth and the GC call ceased to be a problem.

Memory graph after solving the problem:

As an option, avoid freeing and allocating memory. Especially frequent and in large quantities.

It is obvious that the nodes will change only if the level itself changes (well, or some objects that affect the passability of the map move) and obviously these will be small (relative to the total number of nodes) changes, respectively, you can have a global pool of nodes in the local hashmap to use links to the nodes you need. As a result, your nodes will quickly cease to be young objects and the GC will not check them (or will, but rarely. I didn’t really understand this point from the Oracle docks)

Another option is to use another collector. Dock

And on Habré it is advised to increase the size of PermanentGeneration

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question