Answer the question

In order to leave comments, you need to log in

How not to crash the site by running a huge script in the cron?

The script has the following actions:

1. it downloads a large gz archive.

2. unpacks and reads the nested xml.

3. takes objects from xml to the database and downloads photos.

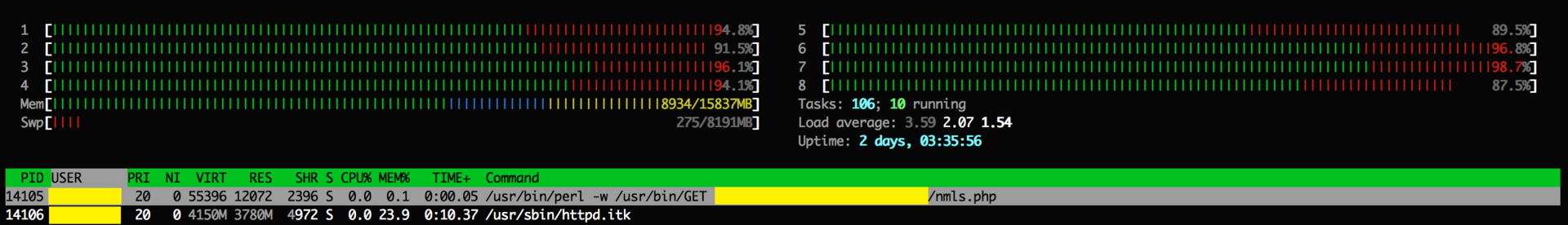

When monitoring the performance, the server shows:

With such a load, the site becomes unavailable.

Tell me what to do, is it possible to limit the number of processes used by the script.

And what should be avoided in the script itself?

Answer the question

In order to leave comments, you need to log in

Found the problem in the script. The simplexml_load_file function ate all the RAM, writing hefty XML into memory, which caused the server to hang. After the script was rewritten under the XMLReader class, the script stopped eating up all the RAM. I did as in the example: how to use XMLReader .

Thanks to Adamos for the tip:

I used XMLReader to parse the file.

Hello, topic starter.

And where does he download the archive from? It is worth attending to the issue of network load.

Provide a script, it will help to better think over the solution.

At what step does the load occur? If when unpacking, then How to extract certain files from a tar archive

LA 3.5 with 8 cores does not look like a reason for the site to be unavailable.

Maybe the problem is in the lock of the table at the time of the database update?

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question