Answer the question

In order to leave comments, you need to log in

fmpeg. How to programmatically record a stream in real mode?

I'm trying to record a frame stream, which in the future will be like capturing data from a device. Now for the test I made a frame capture from the screen.

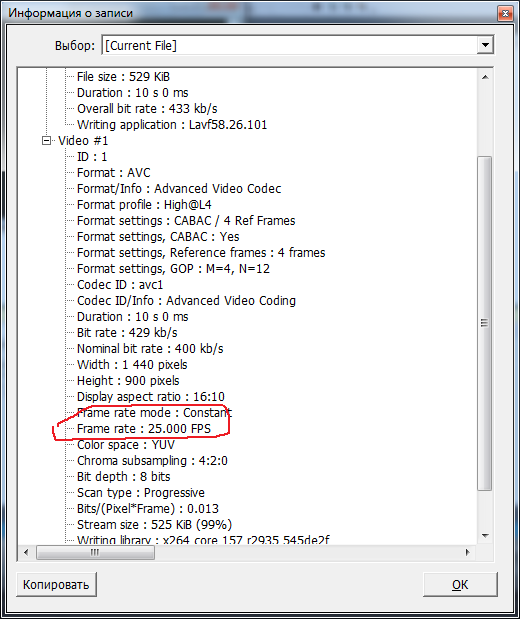

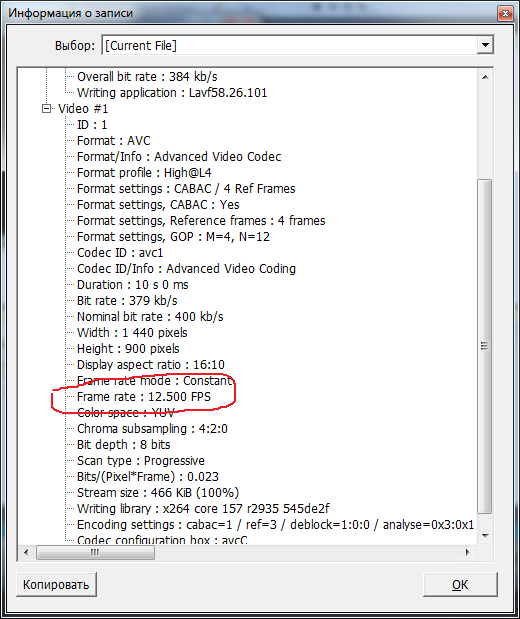

The point is this. If the frame numbering is sequential, then ffmpeg honestly writes to the resulting file - 25 frames per second. But I will have a real mode, which means that some frames will disappear. I simulated this case as increasing the counter at each step by 2.

12.5 fps is written to the final file. And it should be 25 fps. Tell me what parameter to set and what I'm doing wrong.

#include <iostream>

#include <conio.h>

#include <Windows.h>

extern "C"

{

#include <libavcodec/avcodec.h>

#include <libavutil/avassert.h>

#include <libavutil/channel_layout.h>

#include <libavutil/opt.h>

#include <libavutil/mathematics.h>

#include <libavutil/timestamp.h>

#include <libavformat/avformat.h>

#include <libswscale/swscale.h>

#include <libswresample/swresample.h>

#include <libavutil/imgutils.h>

}

using namespace std;

// Для av_err2str

#ifdef __cplusplus

static const std::string av_make_error_string(int errnum)

{

char errbuf[AV_ERROR_MAX_STRING_SIZE];

av_strerror(errnum, errbuf, AV_ERROR_MAX_STRING_SIZE);

return (std::string)errbuf;

}

#undef av_err2str

#define av_err2str(errnum) av_make_error_string(errnum).c_str()

#endif // __cplusplus

#define STREAM_DURATION 10.0

#define STREAM_FRAME_RATE 25 /* 25 images/s */

#define STREAM_PIX_FMT AV_PIX_FMT_BGRA // AV_PIX_FMT_BGRA AV_PIX_FMT_RGBA AV_PIX_FMT_ARGB

#define CODEC_PIX_FMT AV_PIX_FMT_YUV420P //AV_PIX_FMT_YUV420P AV_PIX_FMT_NV12 AV_PIX_FMT_YUVJ420P AV_PIX_FMT_BGR24

#define FRAME_WIDTH 1440

#define FRAME_HEIGHT 900

#define SCALE_FLAGS SWS_BICUBIC

AVCodecContext *cc = NULL;

AVFormatContext *oc = NULL;

AVOutputFormat *fmt = NULL;

AVDictionary *opt = NULL;

AVCodec *video_codec = NULL;

AVStream *video_stream = NULL;

AVFrame *video_frame = NULL;

int64_t next_pts = 0;

SwsContext *sws_ctx = 0;

static AVFrame *alloc_picture(enum AVPixelFormat pix_fmt, int width, int height)

{

AVFrame *picture;

int ret;

picture = av_frame_alloc();

if (!picture)

return NULL;

picture->format = pix_fmt;

picture->width = width;

picture->height = height;

ret = av_frame_get_buffer(picture, 32);

if (ret < 0) {

fprintf(stderr, "Could not allocate frame data.\n");

exit(1);

}

return picture;

}

bool bInit = false;

int nSimpleWidth = FRAME_WIDTH;

int nSimpleHeight = FRAME_HEIGHT;

int nSimpleStride = 0;

int nSimpleAlign = 0;

int bSimpleUseAlign = 0;

HWND hDesktopWnd;

HDC hDesktopDC;

HDC hCaptureDC;

int cadrSize = 0;

BITMAPINFO m_bmiSimple;

int m_bSimpleBottomUpImg = 0;

BYTE *m_bSimpleData = 0;

HBITMAP hCaptureBitmap = 0;

BOOL res = 0;

LARGE_INTEGER StartingTime, EndingTime, ElapsedMicroseconds;

LARGE_INTEGER Frequency;

int startFrame = 0;

int frameCounter = 0;

int ofps = 40;

int globalStat = 0;

static AVFrame *get_video_frame()

{

if (av_compare_ts(next_pts, cc->time_base, STREAM_DURATION, AVRational{ 1, 1 }) >= 0)

{

return NULL;

}

if (av_frame_make_writable(video_frame) < 0)

exit(1);

if (!bInit)

{

/**

* Настройка захвата кадра.

**/

}

if (bInit)

{

BOOL res = BitBlt(hCaptureDC, 0, 0, nSimpleWidth, nSimpleHeight, hDesktopDC, 0, 0, SRCCOPY | CAPTUREBLT);

if (cc->pix_fmt != STREAM_PIX_FMT)

{

sws_scale(sws_ctx, &m_bSimpleData, &nSimpleStride, 0, cc->height, video_frame->data, video_frame->linesize);

}

else

{

memcpy_s(video_frame->data[0], cadrSize, m_bSimpleData, cadrSize);

}

}

if (!startFrame)

{

startFrame = 1;

frameCounter = 0;

next_pts = 0;

}

else

{

// Расчёт времени.

//++frameCounter;

frameCounter += 2; // Emulate skip frame.

}

// Ставим время кадра.

//ost->frame->pts = ost->next_pts++;

next_pts = frameCounter;

video_frame->pts = next_pts;

cout << "pts: " << video_frame->pts << endl;

++globalStat;

return video_frame;

}

static int write_frame(AVFormatContext *fmt_ctx, const AVRational *time_base, AVStream *st, AVPacket *pkt)

{

av_packet_rescale_ts(pkt, *time_base, st->time_base);

pkt->stream_index = st->index;

return av_interleaved_write_frame(fmt_ctx, pkt);

}

static int write_video_frame()

{

int ret;

int ret1;

int flush = 0;

AVFrame *frame;

int got_packet = 0;

AVPacket pkt = { 0 };

frame = get_video_frame();

av_init_packet(&pkt);

ret = avcodec_send_frame(cc, frame);

if (ret < 0) {

fprintf(stderr, "Error sending a frame for video encoding\n");

if (ret == AVERROR(EAGAIN))

{

fprintf(stderr, "Error eagain\n");

}

else if (ret == AVERROR_EOF)

{

flush = 1;

fprintf(stderr, "Error EOF\n");

}

else if (ret == AVERROR(EINVAL))

{

fprintf(stderr, "Error EINVAL\n");

}

else if (ret == AVERROR(ENOMEM))

{

fprintf(stderr, "Error ENOMEM\n");

}

if (ret != AVERROR_EOF)

{

exit(1);

}

}

while (flush || ret >= 0) {

got_packet = 1;

ret = avcodec_receive_packet(cc, &pkt);

if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF)

{

got_packet = 0;

flush = 0;

break;

}

else if (ret < 0)

{

fprintf(stderr, "Error during encoding\n");

exit(1);

}

ret1 = write_frame(oc, &cc->time_base, video_stream, &pkt);

if (ret1 < 0) {

fprintf(stderr, "Error while writing video frame: %s\n",

av_err2str(ret1));

exit(1);

}

}

return (frame || got_packet) ? 0 : 1;

}

// Обработка.

int process()

{

int ret = 0;

const char *filename = "D:\\capture_test.mp4";

avformat_alloc_output_context2(&oc, NULL, NULL, filename);

if (!oc)

{

cout << "Couldnt open file in format." << endl;

avformat_alloc_output_context2(&oc, NULL, "mpeg", filename);

}

if (!oc)

{

cout << "Couldnt open file in MPEG format." << endl;

return 0;

}

fmt = oc->oformat;

if (fmt->video_codec == AV_CODEC_ID_NONE)

{

cout << "Video codec dont podderzjka." << endl;

}

video_codec = avcodec_find_encoder(fmt->video_codec);

if (!video_codec)

{

cout << "Could not find encoder for " << avcodec_get_name(fmt->video_codec) << endl;

return 0;

}

video_stream = avformat_new_stream(oc, NULL);

if (!video_stream)

{

cout << "Could not allocate stream" << endl;

return 0;

}

video_stream->id = oc->nb_streams - 1;

video_stream->avg_frame_rate = AVRational{ 1, STREAM_FRAME_RATE };

cc = avcodec_alloc_context3(video_codec);

if (!cc)

{

cout << "Could not alloc an encoding context" << endl;

return 0;

}

cc->codec_id = video_codec->id;

cc->bit_rate = 400000;

cc->width = FRAME_WIDTH;

cc->height = FRAME_HEIGHT;

cc->gop_size = 12;

cc->pix_fmt = CODEC_PIX_FMT;

if (cc->codec_id == AV_CODEC_ID_MPEG2VIDEO)

{

cc->max_b_frames = 2;

}

if (cc->codec_id == AV_CODEC_ID_MPEG1VIDEO)

{

cc->mb_decision = 2;

}

video_stream->time_base = AVRational{ 1, STREAM_FRAME_RATE };

cc->time_base = video_stream->time_base;

if (fmt->flags & AVFMT_GLOBALHEADER) cc->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;

video_frame = alloc_picture(cc->pix_fmt, cc->width, cc->height);

if (!video_frame)

{

cout << "Could not allocate video frame" << endl;

return 0;

}

// Открываем кодек.

ret = avcodec_open2(cc, video_codec, NULL);

if (ret < 0)

{

cout << "Could not open video codec: " << av_err2str(ret) << endl;

return 0;

}

/* copy the stream parameters to the muxer */

ret = avcodec_parameters_from_context(video_stream->codecpar, cc);

if (ret < 0)

{

cout << "Could not copy the stream parameters" << endl;

return 0;

}

av_dump_format(oc, 0, filename, 1);

/* open the output file, if needed */

if (!(fmt->flags & AVFMT_NOFILE))

{

ret = avio_open(&oc->pb, filename, AVIO_FLAG_WRITE);

if (ret < 0)

{

cout << "Could not open " << filename << " : " << av_err2str(ret) << endl;

return 0;

}

}

ret = avformat_write_header(oc, &opt);

if (ret < 0)

{

cout << "Error occurred when opening output file: " << av_err2str(ret) << endl;

return 0;

}

// Пишем данные.

int encode_video = 1;

while (encode_video)

{

cout << "Thread video 1" << endl;

encode_video = !write_video_frame();

}

// Закрываем.

av_write_trailer(oc);

/* Close each codec. */

avcodec_free_context(&cc);

if (!(fmt->flags & AVFMT_NOFILE))

{

avio_closep(&oc->pb);

}

/* free the stream */

avformat_free_context(oc);

return 0;

}

int main()

{

setlocale(LC_ALL, "Russian");

process();

_getch();

return 0;

}

Answer the question

In order to leave comments, you need to log in

Since the frame number for real-time does not make sense, you need to look for a numbering that will bring this meaning.

Write the current timestamp as the name.

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question