Answer the question

In order to leave comments, you need to log in

Docker 15 instances, why?

Hello everyone, hats off to puppeteer on nodejs wrapped in a docker container.

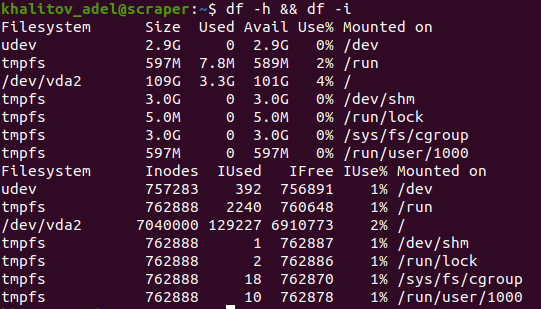

Rented kde from yandex, specs 6GB RAM, 6 cores, 70-110GB ssd.

Picked up this docker instance in 15 instances. This instance opens the browser, enters the site, takes the data, closes the browser. Each instance works with only one browser and one tab, opening the browser and closing it. Iterates these iterations an infinite number of times. After some time, 2-3 hours, the memory overflows, I don’t understand what, I don’t understand who eats it.

Jun 14 18:41:21 scraper sshd[6681]: fatal: fork of unprivileged child failed

Jun 14 18:42:31 scraper sshd[6681]: message repeated 3 times: [ fatal: fork of unprivileged child failed]

Jun 14 19:17:01 scraper CRON[6681]: pam_unix(cron:session): session opened for user root by (uid=0)

Jun 14 19:22:32 scraper sshd[819]: error: fork: No space left on device

Jun 14 19:22:32 scraper sshd[6681]: fatal: fork of unprivileged child failed

Jun 14 20:05:19 scraper sshd[819]: error: fork: No space left on device

Jun 14 20:07:21 scraper sshd[819]: error: fork: No space left on device

Jun 14 20:17:01 scraper CRON[30284]: pam_unix(cron:session): session opened for user root by (uid=0)

Jun 14 20:47:09 scraper sshd[819]: error: fork: No space left on device

Jun 14 20:51:12 scraper sshd[819]: message repeated 2 times: [ error: fork: No space left on device]

Jun 14 21:07:00 scraper sshd[30284]: fatal: fork of unprivileged child failed

Jun 14 21:09:24 scraper sshd[30284]: fatal: fork of unprivileged child failed

Jun 14 21:12:36 scraper sshd[819]: error: fork: No space left on device

Jun 14 21:16:18 scraper sshd[819]: message repeated 2 times: [ error: fork: No space left on device]docker ps -a

runtime/cgo: pthread_create failed: No space left on device

runtime/cgo: pthread_create failed: No space left on device

SIGABRT: abort

PC=0x7f011dd42e97 m=7 sigcode=18446744073709551610

goroutine 0 [idle]:

runtime: unknown pc 0x7f011dd42e97

stack: frame={sp:0x7f011894a840, fp:0x0} stack=[0x7f011814b288,0x7f011894ae88)

00007f011894a740: 0000000000000000 0000000000000000

00007f011894a750: 0000000000000000 0000000000000000

00007f011894a760: 0000000000000000 0000000000000000

00007f011894a770: 0000000000000000 0000000000000000

00007f011894a780: 0000000000000000 0000000000000000

00007f011894a790: 0000000000000000 0000000000000000

00007f011894a7a0: 0000000000000000 0000000000000000

00007f011894a7b0: 0000000000000000 0000000000000000

00007f011894a7c0: 0000000000000000 0000000000000000

00007f011894a7d0: 0000000000000000 0000000000000000

00007f011894a7e0: 0000000000000000 0000000000000000

00007f011894a7f0: 0000000000000000 0000000000000000

00007f011894a800: 0000000000000000 0000000000000000

00007f011894a810: 0000000000000000 0000000000000000

00007f011894a820: 0000000000000000 0000000000000000

00007f011894a830: 00007f011894a860 000055c5324ba9fe <runtime.(*mTreap).end+78>

00007f011894a840: <0000000000000000 0000000000000001

00007f011894a850: 0000000000000000 0000000100000000

00007f011894a860: 00007f011894a8f0 000055c5324c43af <runtime.(*mheap).scavengeLocked+559>

00007f011894a870: 00007f011894a8a0 000055c5324ba9fe <runtime.(*mTreap).end+78>

00007f011894a880: 0000000000000000 000055c500000001

00007f011894a890: 0000000000000000 0000000100000038

00007f011894a8a0: 00007f011894a930 000055c5324c43af <runtime.(*mheap).scavengeLocked+559>

00007f011894a8b0: 000055c535626108 00007f011e700003

00007f011894a8c0: fffffffe7fffffff ffffffffffffffff

00007f011894a8d0: ffffffffffffffff ffffffffffffffff

00007f011894a8e0: ffffffffffffffff ffffffffffffffff

00007f011894a8f0: ffffffffffffffff ffffffffffffffff

00007f011894a900: ffffffffffffffff ffffffffffffffff

00007f011894a910: ffffffffffffffff ffffffffffffffff

00007f011894a920: ffffffffffffffff ffffffffffffffff

00007f011894a930: ffffffffffffffff ffffffffffffffff

runtime: unknown pc 0x7f011dd42e97

stack: frame={sp:0x7f011894a840, fp:0x0} stack=[0x7f011814b288,0x7f011894ae88)

00007f011894a740: 0000000000000000 0000000000000000

00007f011894a750: 0000000000000000 0000000000000000

00007f011894a760: 0000000000000000 0000000000000000

00007f011894a770: 0000000000000000 0000000000000000

00007f011894a780: 0000000000000000 0000000000000000

00007f011894a790: 0000000000000000 0000000000000000

00007f011894a7a0: 0000000000000000 0000000000000000

00007f011894a7b0: 0000000000000000 0000000000000000

00007f011894a7c0: 0000000000000000 0000000000000000

00007f011894a7d0: 0000000000000000 0000000000000000

00007f011894a7e0: 0000000000000000 0000000000000000

00007f011894a7f0: 0000000000000000 0000000000000000

00007f011894a800: 0000000000000000 0000000000000000

00007f011894a810: 0000000000000000 0000000000000000

00007f011894a820: 0000000000000000 0000000000000000

00007f011894a830: 00007f011894a860 000055c5324ba9fe <runtime.(*mTreap).end+78>

00007f011894a840: <0000000000000000 0000000000000001

00007f011894a850: 0000000000000000 0000000100000000

00007f011894a860: 00007f011894a8f0 000055c5324c43af <runtime.(*mheap).scavengeLocked+559>

00007f011894a870: 00007f011894a8a0 000055c5324ba9fe <runtime.(*mTreap).end+78>

00007f011894a880: 0000000000000000 000055c500000001

00007f011894a890: 0000000000000000 0000000100000038

00007f011894a8a0: 00007f011894a930 000055c5324c43af <runtime.(*mheap).scavengeLocked+559>

00007f011894a8b0: 000055c535626108 00007f011e700003

00007f011894a8c0: fffffffe7fffffff ffffffffffffffff

00007f011894a8d0: ffffffffffffffff ffffffffffffffff

00007f011894a8e0: ffffffffffffffff ffffffffffffffff

00007f011894a8f0: ffffffffffffffff ffffffffffffffff

00007f011894a900: ffffffffffffffff ffffffffffffffff

00007f011894a910: ffffffffffffffff ffffffffffffffff

00007f011894a920: ffffffffffffffff ffffffffffffffff

00007f011894a930: ffffffffffffffff ffffffffffffffff

goroutine 1 [runnable, locked to thread]:

reflect.resolveTypeOff(0x55c533ee18c0, 0xc0006dab20, 0x55c53423cb9b)

/usr/local/go/src/runtime/runtime1.go:483 +0x4e

github.com/docker/cli/vendor/github.com/modern-go/reflect2.loadGo17Types()

/go/src/github.com/docker/cli/vendor/github.com/modern-go/reflect2/type_map.go:70 +0x10d

github.com/docker/cli/vendor/github.com/modern-go/reflect2.init.0()

/go/src/github.com/docker/cli/vendor/github.com/modern-go/reflect2/type_map.go:28 +0x96

goroutine 21 [syscall]:

os/signal.signal_recv(0x55c5324f96b6)

/usr/local/go/src/runtime/sigqueue.go:147 +0x9e

os/signal.loop()

/usr/local/go/src/os/signal/signal_unix.go:23 +0x24

created by os/signal.init.0

/usr/local/go/src/os/signal/signal_unix.go:29 +0x43

goroutine 23 [chan receive]:

github.com/docker/cli/vendor/k8s.io/klog.(*loggingT).flushDaemon(0x55c53561f400)

/go/src/github.com/docker/cli/vendor/k8s.io/klog/klog.go:1010 +0x8d

created by github.com/docker/cli/vendor/k8s.io/klog.init.0

/go/src/github.com/docker/cli/vendor/k8s.io/klog/klog.go:411 +0xd8

rax 0x0

rbx 0x7f011e0f0840

rcx 0x7f011dd42e97

rdx 0x0

rdi 0x2

rsi 0x7f011894a840

rbp 0x55c533cde54a

rsp 0x7f011894a840

r8 0x0

r9 0x7f011894a840

r10 0x8

r11 0x246

r12 0x55c536102110

r13 0x0

r14 0x55c533c842b4

r15 0x0

rip 0x7f011dd42e97

rflags 0x246

cs 0x33

fs 0x0

gs 0x0

Answer the question

In order to leave comments, you need to log in

Thank you all a little all by. The system is in order, the physical memory is not spent as well as the frames.

The reason and solutions are elegantly described in a post on habr .

Even reduced CPU consumption.

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question