Answer the question

In order to leave comments, you need to log in

Are there ready-made solutions or best practices for organizing backups with version control?

I would like to get information on how to organize a backup with version control of old archives.

Are there ready-made tools or detailed instructions on how to organize this and how?

Google gives rsync for pulling files, but what then to use for version control?

The essence of the problem:

When new files appear on the working machine, immediately pull them to the backup machine (here rsync is more likely).

Once a day, for example, make a snapshot of the reserve, and somehow it is economical to keep it, i.e. only new files or changed ones, so that there is a history of changes throughout the month (mb btrfs somehow screwed).

In general, are there any ideas on how to implement this better, whether to make your own bike or is there something ready?

Answer the question

In order to leave comments, you need to log in

For more than a year now, my tool has been successfully making BTRFS snapshots with control over the number of snapshots depending on age and the ability to backup to an external BTRFS disk: https://github.com/nazar-pc/just-backup-btrfs

I'll tell you how I would do with my bikes.

rsync sends data from your machine to a backup server. Let's say that on a ZFS backup server and with snapshots, everything is simple. According to the crown on the backup machine, we rivet how much and when we want ZFS snapshots. According to the crown, we control the number of created snapshots and delete the extra ones. If not ZFS, then LVM snapshots...

If the local network, then BackupPC

Works like a clock, for backup and recovery, he monitors clients (for example, backup laptops that are not regularly connected to the network) and makes a backup if necessary. The web muzzle is available, but you really need to read the settings first. Free, no client software required.

Or bacula, but not free, but powerful. On clients it is necessary to put software.

We use both packages, for different sectors of the network, and the latest free version of Bakula, when it was still open source, but it does not have a web-frontend, and everything is from the command line.

If you need online synchronization, then it is better to use https://syncthing.net/ or analogues.

For backups, I recommend rsnapshot rsnapshot.org . Allows you to store multiple copies, the same files will not be duplicated due to hard links.

Try ZBackup + rsync ( + s3fs for Amazon S3).

tar -c /home | zbackup backup /my/backup/repo/backups/home-`date '+%Y-%m-%d'`.tar

zbackup restore /my/backup/repo/backups/home-`date '+%Y-%m-%d'`.tar | tar -xf -Some filesystems (most notably ZFS and Btrfs) provide deduplication features. They do so only at block level though, without a sliding window, so they can not accomodate to arbitrary byte insertion/deletion in the middle of data.

Have a look at this:

it.werther-web.de/2011/10/23/migrate-rsnapshot-bas...

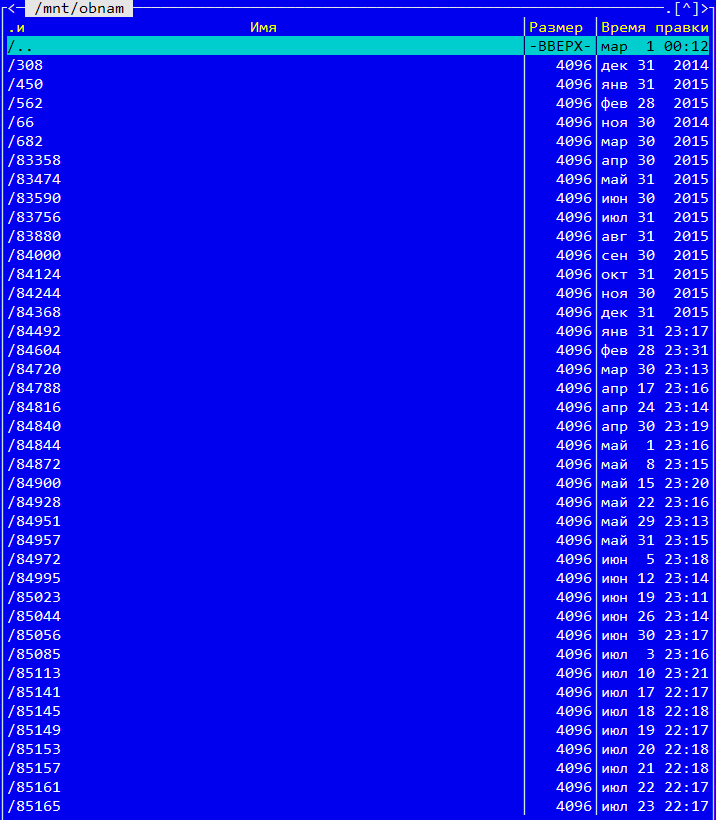

We are currently using obnam . There are about 10 repositories with a total volume of about 300 gigabytes. There is a history from the very beginning of use, that is, from December 2014. Already several times I had to use the recovery, everything works fine and pretty quickly.

To restore the data, you need to mount the repository to some directory and you will have access to all versions (see screenshot).

Bontmia (a bash wrapper over rsync) is very suitable for the described task. Works very similar to Apple's backups, creating a directory structure with hardlinks to immutable data.

If you dig harder, then on a working machine (if there is Linux), you can install ZFS and transfer only snapshots to the backup server (zfs can do this). Well, or get confused and archive in tar and connected xdiff3 instead of the usual archiver.

Didn't find what you were looking for?

Ask your questionAsk a Question

731 491 924 answers to any question